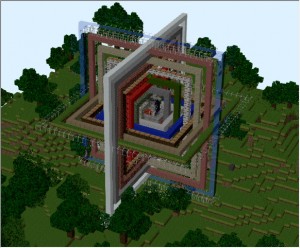

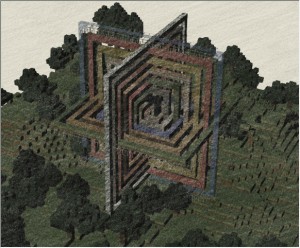

My crazy-person project for the month is done. It’s a little program called Mineways, which is a bridge between Minecraft and Shapeways, the 3D printing service. You can grab a chunk of a Minecraft world for rendering or 3D printing. See the Mineways Flickr group for some results.

Monthly Archives: December 2011

2011 Color and Imaging Conference Roundup

For convenience (using the CIC 2011 tag works but shows posts in reverse order), this post combines all the links to my CIC 2011 posts. Note that video for many of the presentations is available online, and many of the papers are also available on the various author’s home pages.

Next year, CIC will be in Los Angeles, between November 12 and November 16. If you are local, I warmly recommend attending at least some of the courses, especially the two-day “fundamentals” course.

Two recommended (albeit somewhat expensive) resources for people interested in further study of color topics:

- The DVD of the two-day “Fundamentals of Color Science and Imaging” course, presented by Dr. Hunt.

- The book “Digital Color Management: Encoding Solutions” (make sure to get the 2nd edition) has great coverage of the image reproduction problem and its solutions. I see this problem as “the other half of rendering” – what do you do after you’ve generated those physically-based scene radiance values?

2011 Color and Imaging Conference, Part VI: Special Session

This last post on CIC 2011 covers a special session that took place the day after the conference. Although not strictly part of the conference (it required a separate registration fee), it covered closely related topics.

The special session “Revisiting Color Spaces” was jointly organized by the Inter-Society Color Council (ISCC), the Society for Imaging Science and Technology (IS&T), and the Society for Information Display (SID) to mark the 15th anniversary of the publication of the sRGB standard. It included a series of separate talks, all related to color spaces:

sRGB – Work in Progress

This presentation was given by Ricardo Motta, a Distinguished Engineer at NVIDIA. Mr. Motta developed the first colorimetrically calibrated CRT display for his Master’s thesis at RIT, helped develop much of HP’s color imaging tech as their first color scientist, and was one of the original authors of the sRGB spec. Now he has responsibility for NVIDIA’s mobile imaging technology and roadmap.

The presentation started with some history on the development of sRGB. It actually started with an attempt by HP and Adobe in 1989 to get the industry to standardize on CIELAB as a device-independent color space. Their first attempts at achieving industry consensus didn’t go well: Gary Starkweather at Apple insisted that full spectral representations (highly impractical at the time) were the right direction, and initial agreement by Microsoft to standardize on CIELAB were scotched when Nathan Myhrvold insisted on 32-bit XYZ (also infeasible) instead. After these setbacks, the people at HP and Adobe who were working on this started realizing that RGB can actually work pretty well as a device-independent color space. They wrote drivers for the Mac first, and ported them when Windows got color capability. PC monitors and televisions at the time all used the same CRT designs (the PC market was as yet too small to justify custom designs), so Adobe characterized the typical CRT in their RGB drivers – first as an internal HP standard (“HP RGB”), and later in collaboration with Kodak and Microsoft as part of the FlashPix standard (“NIF RGB”). In 1996, HP presented NIF RGB to Microsoft as a proposed standard, ending with the sRGB standard proposal exactly 15 years ago.

Why does sRGB work? RGB tristimulus values by themselves are not enough to describe color appearance. The effect of viewing conditions and white balance on the appearance of self-luminous displays are not fully understood. Colorimetry mostly focuses on surface colors, not self-luminous aperture colors. Also, the limited gamut of displays make low-CCT white-balances impractical.

By standardizing the assumed viewing conditions and equipment (display with near 2.2 gamma, Rec.709 primaries, D65 white point at 80 nits (cd/m2), 200 lux D50 ambient, 1% flare) then the RGB data fully implies appearance with little processing needed. Also, daylight-balanced displays tend to remain constant in appearance over a wide range of viewing conditions (D65 is consistently perceived as neutral in the absence of other adapting illumination) so the results are robust in practice.

In 1996 the strength of sRGB was that these viewing conditions and equipment were common and widely used. 15 years later, this strength has become a limiting factor in some scenarios.

If self-luminous display colors are not very close to the correct scene surface colors, there is a perceptual “snap” as the image suddenly appears as a glowing rectangle instead of a 3D scene (this is similar to the “uncanny valley” problem). Current standard display primaries fail to match large classes of surface colors due to their limited gamut; newer developments (AMOLED, LED backlights) enable a much wider color gamut.

In addition, displays have been getting much brighter – every decade, LED brightness has consistently increased by at least 20X. Newest LCD tablets achieve 500 nits with over 1000:1 contrast ratio (CR), exactly matching reflected colors in most conditions. Daylight equivalence requires 6,400 nits; by the end of this decade, portable displays should be able to show actual surface colors under all lighting situations.

The sRGB approach is no longer valid in the mobile space – with highly variable viewing conditions and displays that can directly match reflective colors, we need to move from a “tristimulus + viewing conditions” encoding to an “object properties” encoding (still tristimulus-based).

OSA-UCS System: Color-signal Processing from Psychophysical to Psychometric Color

This presentation was given by Prof. Claudio Oleari from the Department of Physics at the University of Parma.

Psychophysical color specification is based on color matching under arbitrary viewing conditions. Psychometric color specification is based on quantifying perceived color differences and realizing uniform scales of perceived colors under controlled conditions (comparison of color samples on a uniform achromatic background under a chosen illuminant). Under these conditions, the only appearance phenomena are the instantaneous color constancy and the lightness contrast.

Between 1947 and 1974 the Optical Society of America (OSA) had a committee working on a uniform psychometric color scale; their goal was a lattice of colors in Euclidean color space where equal distances between points corresponds to equal visual differences. However, they eventually concluded that this is not possible – the human color system does not work this way. The resulting system (OSA-UCS) had only approximately uniform color scales and many scientists considered this to be a failure. However, OSA-UCS has a very strong property which is not shared by any other color space – it is spanned by a net of perceived geodesic lines. These are scales of colors which define the shortest perceptual path between colors, ordered with the difference between each pair of colors equal to one just noticeable difference (jnd).

Prof. Oleari has published an algorithm linking the cone activations (psychophysical color) to the OSA-UCS coordinates (“Color Opponencies in the System of the Uniform Color Scales of the Optical Society of America”, 2004).

Another of Prof. Oleari’s papers (“Euclidean Color-Difference Formula for Small-Medium Color Differences in Log Compressed OSA-UCS Space”, 2009) defines a Euclidean color-difference formula based on a logarithmically compressed version of OSA-UCS (like other such formula, it is only applicable to small color differences since a globally uniform space does not exist). This formula has only two parameters, but performs as well as the CIEDE2000 formula which has many more. Generalizing the formula to arbitrary illuminants and observers provides a matrix which is useful for color conversion of digital camera images between illuminants. Prof. Oleari claims that this matrix provides results that are clearly better for this purpose than other chromatic adaptation transforms (“Electronic Image Color Conversion between Different Illuminants by Perfect Color-Constancy Actuation in a Color-Vision Model Based on the OSA-UCS System”, 2010).

Design and Optimization of the ProPhoto RGB Color Encodings

This presentation was given by Dr. Geoff Wolfe, Senior Research Manager at Canon Information Systems Research Australia. However, the work it describes was done while he was at Kodak Research Laboratories, in collaboration with Kevin Spaulding and Edward Giorgianni.

ProPhotoRGB was created at a time (late 1990s – early 2000s) when the photographic world was in massive upheaval. In 2000 film sales were around 1 billion rolls/year; this decreased to 20 million by 2010 with an ongoing 20% volume reduction year on year. Digital cameras were just starting to become decent: in 1998 most consumer cameras had sensors under 1 megapixel, and in 1999 most had 2 megapixel sensors, and resolution continued to increase rapidly. Another interesting trend was digital processing for film; in 1990 the PhotoCD system scanned film to 24 megapixel images which were processed digitally and then printed out to analog film. ProPhoto RGB was intended to be used in a system which took this one step further: optically scanning negatives and then processing as well as printing digitally (today of course imaging is digital from start to finish).

During the mid to late 90s there was an increasing awareness that images could exist in different “image states”, characterized by different viewing environments, dynamic ranges, colorimetric aims and intended uses. The simplest example is to classify images as either scene-referred (unrendered) or output-referred (rendered picture or other reproduction). On one hand, scenes are very different than pictures – scenes have 14 stops or so of dynamic range vs. 6-8 stops in a picture, pictures are viewed in an adaptive viewing environment with a certain white point, luminance, flare, and surround – all of which affect color appearance. On the other hand, a scene and its picture are obviously closely related: the picture should convey the scene appearance. Memory and preference also play a part: people often assess an image against their memory of the scene appearance, which tends to be different (for example, more saturated) than the original scene. Even if they have never seen the original, people tend to prefer slightly oversaturated images.

There are several issues regarding the rendering of the scene into the display image. The first is the dynamic range problem – which 6-8 stops from the scene’s 14 should we keep? The adaptive viewing environment also poses some issues. An “accurate” reproduction of the scene colorimetry looks flat and dull compared to a “pleasing” rendition with adjustments to account for the viewing environment’s effect on perception.

ProPhoto RGB was designed as a related family of encodings allowing both original scenes and rendered pictures to be encoded. The encodings should facilitate rendering from scenes to picture with: common primaries for both scene and picture encoding, suitable working spaces for digital image processing, direct and simple relationships to CIE colorimetry and the ICC profile color space (PCS), and fast and simple transformations to commonly used output color encodings such as sRGB or Adobe RGB.

Since the desire was to have the same primaries for both scene and picture image states, choosing the right primaries was critical. The primaries needed to enable a gamut wide enough to cover all real world surface colors, and all output devices. On the other hand, making the gamut too wide could cause quantization errors (given a fixed bit depth and encoding curve, quantization gets worse with increasing gamut size). The primaries needed to yield the desired white point (D50) when present in equal amounts, and avoid objectionable hue distortions under tonescale operations (more on that below). However, the primaries did not need to be physically realizable; they could be outside the spectral locus.

Regarding tonescale operations: a common image processing operation is to put each channel through a nonlinear curve, for example an S-shaped contrast enhancement curve. Such operations are fast, convenient and generally well-behaved; they also are guaranteed to not go out of the color space’s gamut. However, in the general case, tonescale operations are not hue-preserving, and can result in noticeable hue shifts in natural “highlight to shadow” gradients. These hue shifts are particularly objectionable in skin tones, especially if they shift towards green.

All these constraints were fed into Matlab and an optimization process was performed to find the final primaries. The hue rotations could not be eliminated, but they were reduced overall and minimized for especially sensitive areas such as skin tones. The final set of ProPhoto primaries was much better in this regard than those of sRGB/Rec.709 or Adobe RGB (1998) primaries. Two of the resulting primaries were imaginary (outside the spectral locus), with the third (red) right on the spectral locus .

Besides the primaries and D50 white point, a nonlinear encoding (1/1.8 power with a linear toe segment) was added to create the ROMM (Reference Output Medium Metric) RGB color space, intended for display-referred data. A corresponding RGB space for scene-referred data was also defined: RIMM (Reference Input Medium Metric). RIMM had the same primaries as ROMM but a different encoding (same as Rec.709 but scaled to handle scene values up to 2.0, where 1.0 represents a perfect white diffuse reflector in the scene). An extended dynamic range version of RIMM (ERIMM) was defined as well. ERIMM has a logarithmic encoding curve with a linear toe segment, and can handle scene values up to 316.2 (relative to a white diffuse reflector at 1.0). All spaces can be encoded at 8, 12 or 16 bits per channel, but for ERIMM at least 12 bits are recommended.

The original intended usage for this family of color spaces was as follows. First, the negative is scanned and a representation of the original scene values is created in RIMM or ERIMM space. This is known as “unbuilding” the film response – a complex process that needs to account for capture system flare, the distribution of exposure in the different color layers, crosstalk between layers and the film response curve. Digital rendering of the image puts it through a tone scale and goes to the ROMM output space, and is finally turned into a printed picture or displayed image.

Digital cameras tend to have much simpler unbundling processes – it is straightforward to get scene linear values from the camera RAW sensor values. For this reason, Dr. Wolfe thinks that camera RAW can be an effective replacement for scene referred encodings such as RIMM/ERIMM, which he claims are now effectively redundant. On the other hand, he found that ROMM / ProPhoto RGB is still used by many photography professionals (and advanced amateurs) for its ability to capture highly saturated objects (such as iridescent bird feathers) and ease of tweaking in Photoshop.

During the Q&A period, several people in the audience challenged Dr. Wolfe’s statement that scene-referred encodings are no longer needed. The Academy of Motion Pictures Arts and Sciences (AMPAS) uses a scene-referred encoding in their Image Interchange Format (IIF) because their images come from a variety of sources, including different film stocks as well as various digital cameras. Even for still cameras, a scene-referred type of encoding is needed at least as the internal reference space (e.g. ICC PCS) even if the consumer never sees it.

Adobe RGB: Happy Accidents

This presentation was given by Chris Cox, a Senior Computer Scientist at Adobe Systems who has been working on Photoshop since 1996. It covered the history of the “Adobe RGB (1998)” color space.

In 1997-1998, Adobe was looking into creating ICC profiles that their customers could use with Photoshop’s new color management features. Not many applications had ICC color management at this point, so operating systems didn’t ship with them yet.

Thomas Knoll (the original creator – with his brother John – of Photoshop) was looking for relevant standards and ideas to build ICC profiles around; one of the specifications he found documentation for was the SMPTE 240M standard, which was the precursor to Rec.709. SMPTE 240M looked interesting – its gamut was wider than sRGB’s but not huge, and tagging existing content with it didn’t result in horrid colors. The official standards weren’t available online, and Adobe couldn’t wait to have a paper copy mailed since Photoshop 5 was about to ship, so they got the information from a somewhat official-looking website.

Adobe got highly positive feedback from their customers about the “SMPTE 240M” profile. Users loved the wide gamut and found that color adjustments looked really good in that space and that conversions to and from CYMK worked really well. A lot of books, tutorials and seminars recommended using this profile.

A while after Photoshop 5 shipped, people familiar with the SMPTE 240M spec contacted Adobe and told them that they got it wrong. It turns out that the website they used copied the values from an appendix to the spec which contained idealized primaries, not the actual standard ones. The real SMPTE-240M is a lot closer to sRGB (which Photoshop users didn’t like as a working space). Even worse, Thomas Knoll made a typo copying the red primary chromaticity values so the primaries Photoshop 5 shipped with weren’t even the correct ones from the appendix.

What to do? The profile was wrong in at least two different ways, but the customers REALLY liked it! Adobe tried to improve on the profile in various ways, and built test code to evaluate CMYK conversion quality (which was something the customers especially liked about the “SMPTE 240M” profile) in the new “fixed” profiles.

But no matter what they tried: correcting the red primary, changing the white point from D65 to the theoretically more prepress-friendly D50, widening the primaries, moving the green to cover more gamut, etc., every change made CYMK conversion worse than the “incorrect” profile.

In the end, Adobe decided to keep the profile but change the name. They picked “Adobe RGB” so they wouldn’t have to do a trademark search or get legal approval. The date was added to the profile name since they were sure they would be bringing out a better version soon, and the “Adobe RGB (1998)” profile was shipped in a Photoshop 5 dot release. Adobe kept experimenting, but was never able to improve on the profile. After a while they stopped trying.

After a while Kodak visited them to talk about ProPhoto RGB and how it was designed to minimize hue shifts under nonlinear tonescale operations (see previous talk). Adobe realized they had lucked into a color space that just happened to have good behavior in that regard, explaining the good CYMK conversions (which typically suffer from the same issue). Kodak assumed that Adobe had designed their color space like that on purpose.

Recent Work on Archival Color Spaces

This session was presented by Dr. Robert Buckley, formerly a Distinguished Engineer at Xerox, now a scientist at the University of Rochester.

It describes work done in collaboration between the CIE Technical Committee TC8-09 (of which Dr. Buckley is chair) and the Still Image Working Group of the Federal Agencies Digitization Initiative (FADGI).

TC8-09 did a recent study where they sent a set of test pieces to participating institutions to digitize with their usual procedures. The test pieces included four original color prints and three standard targets: X-Rite Digital ColorChecker SG, Image Engineering Universal Test Target (UTT) and the Library of Congress Digital Image Conformance Evaluation (DICE) Object Target. Special sleeves were made for the prints with holes to identify specific regions of interest (ROIs) for measurement. The technical committee members measured CIELAB values for the print ROIs and the standard target patches for later comparison with the results produced by the participating institutions.

Each institution used their usual scanning equipment and procedures; some used digital cameras, others used scanners; they used various profiles (manufacturer or custom) and some post-processed the resulting images.

The best agreement between the institution’s captures and the measured values were in the cases where digital cameras were used with custom profiles. In general the agreement was better for the targets than for the originals, which isn’t surprising since calibration uses similar targets. The committee concluded that better results would be obtained if the capture devices were calibrated to targets that contained colors more representative of the content being captured (not true for the standard targets).

Besides evaluating the various capture protocols, TC8-09 also wanted to establish which color space is best to use for image archiving. The gamut should of course include all the colors in the archived documents, but it should not be larger than necessary to avoid quantization artifacts. Specifically, if 8 bits per channel are used (which is common) then the gamut shouldn’t be much wider than sRGB. In practice, most of the material (with a few exceptions, such as a color plate in a book on gems) fit easily in the sRGB gamut.

Modern Display Technologies: Is sRGB Still Relevant?

This session was presented by Tom Lianza, “Corporate Free Electron” at X-Rite and Chair of the International Color Consortium (ICC).

One of sRGB’s main strengths is the fact that the primary chromaticities are the same as Rec.709 (and the two tone reproduction curves, while not identical, do have similarities). These similarities have led to the easy mixing of motion and still images in many different environments. The Rec.709 primaries were based on CRT primaries – at the time it was not clear whether they could be realized in flat-panel displays, but the standard pushed the manufacturers to make sure they did.

One of the goals of any color space is to reproduce the Pointer gamut of real-world surface colors. Unfortunately, there are cyans in this gamut that will be a problem for pretty much any physically realizable RGB system.

An output referred color space will always require some specification of ambient conditions. This is needed for effective perceptual encoding.

A missing element in many color spaces is a hard definition of black (Adobe RGB is one of the few that does have an encoding specification of black). The lack of this definition leads to inter-operability issues, and to non-uniform rendering in practice. ICC is now moving black point compensation into ISO to be considered as a standard, which would allow more vendors to use it (Adobe currently have an algorithm which their products use).

All commonly used display technologies (include the iPhone screen which has a really small gamut) encompass the Bartleson memory colors (“Memory Colors of Familiar Objects”, 1960). This explains why people find them all acceptable, although they vary greatly in gamut size and none of them cover the Pointer gamut completely.

Viewing conditions for sRGB are well defined but the assumptions of low-luminance displays viewed in low ambient lighting do not reflect how people view images today.

Cameras are not (and should not be) colorimeters. They do not use sRGB as a precise encoding curve (most cameras reproduce images with a relative gamma of 1.2-1.3 vs. the sRGB encoding curve, to take account low viewing luminance). Instead, cameras are designed to produce good images when viewed on an sRGB display – having a common target guides the different manufacturers to similar solutions. As an example, Mr. Lianza showed a scene with highly out-of-gamut colors, photographed with automatic white balancing on cameras from different vendors. There is no standard for handling out of gamut colors, but nevertheless all the cameras produced very similar images. This is because the critical visual evaluations of these camera’s algorithms were all done on the same (sRGB) displays.

Browsers have various issues with color management. ICC has a test page which can be used to see if a browser handles ICC version 4 profiles properly. Chrome does not have color management and shows the entire page poorly. Firefox shows the ICC version 2 profile test correctly, but not the ICC version 4 test. Safari has good color management and shows all images well, but not when printing.

Conclusions: sRGB is robust and can be used to reproduce a wide range of real-world and memory colors. Existence of the specification coupled with physically realizable displays makes the application of the spec quite uniform in the industries that use it. The lack of black point specification and the low luminance assumption has caused manufacturers to apply compensation to the images which may not work well at higher luminances encountered in mobile environments. It may be possible to tweak the spec for higher luminance situations, but any wholesale changes will have a very bad effect on the market place due to the huge amount of legacy content. The challenge to sRGB in the 21st century comes from disruptive display technologies and the implementations that allow for simultaneous display of sRGB and wide gamut images on the same media at high luminance and high ambient conditions.

Question from the audience: most mobile products don’t have color management, and this is a core issue now. Answer: ICC is splitting into three groups. ICC version 4 is staying stable to address current applications, the “ICC Labs” open-source project is intended for advanced applications, and there will be a separate project to establish a solution for the web and mobile (there is a current discussion regarding adding a new working group for mobile hardware).

Device-Independent Imaging System for High-Fidelity Colors

This session was presented by Dr. Akiko Yoshida from SHARP. It describes the same system that SHARP presented at SIGGRAPH 2011 (there was a talk about the system, and the system itself was shown in Emerging Technologies).

The system comprises a wide-gamut camera (which colorimetrically captures the entire human visual range of colors) and a 5-primary display with a gamut that includes 99% of Pointer’s real-world surface colors.

The camera they developed has sensor sensitivities that satisfy the Luther-Ives condition: the sensitivity curves are a linear combination of cone fundamentals (or equivalently, of the appropriate color-matching functions). This is the first digital camera to satisfy this condition. It is fully colorimetric, measuring the Macbeth ColorChecker chart with an accuracy of about 0.27 ΔE.

Today’s display systems cannot display many colors found in daily life, as can be seen by comparing their gamuts to the Pointer surface color gamut (“The Gamut of Real Surface Colors”, 1980). Although the Pointer gamut is relatively small compared to the gamut of human vision, it cannot be efficiently covered with three RGB primaries. SHARP set a goal to reproduce real-surface colors faithfully and efficiently with a five-primary system (“QuintPixel”) including RGB plus yellow and cyan. QuintPixel actually has six subpixels for each pixel – the red subpixels are repeated twice. This was necessary to get adequate coverage reds. This display can efficiently reproduce 99.9% of Pointer’s gamut.

Why not just extend the three primaries? Mitsubishi has rear-projection laser TVs with really wide RGB gamuts. The reason SHARP didn’t take this approach is efficiency – the gamut is much larger than it needs to be. Another advantage of adding primaries is color reproduction redundancy, which can be exploited to have brighter reproduction at the same power consumption, lower power consumption with the same brightness, or improved viewing angle. The larger number of sub-pixels can also be used to greatly increase resolution (similarly to Microsoft’s “ClearType” technology). These advantages can be realized without losing the wide gamut.

The camera sends 10-bit XYZ signals at 30Hz to the display via the CameraLink protocol. The display does temporal up-conversion from 30 to 60 Hz as well as interpreting the XYZ signal.

Q&A Session:

Question: Is the colorimetric camera available for purchase? Answer: yes, for 1M yen (about $13,000).

Question: 10 bits are not enough for XYZ, are they planning to address this? Answer: yes, they do plan to increase the bit-depth.

Question: what is the display resolution? Answer: They use a 4K panel and combine two pixels into one, cutting the resolution in half.

Is There Really Such a Thing As Color Space? Foundation of Uni-Dimensional Appearance Spaces

This talk was presented by Prof. Mark D. Fairchild, from the Munsell Color Science Laboratory in the Rochester Institute of Technology.

Color is an attribute of visual sensation – not physical values. Color scientists seldom question the 3D nature of color space, but Prof. Fairchild thinks that it is more correct to think about color as a series of one-dimensional appearance spaces or scales, and not to try to link them together.

Color vision is only part of the visual sense, which is itself just one of five senses. Only in color vision is a multidimensional space commonly used to describe perception. All the other senses are described with multiple independent dimensions as appropriate, not with multi-dimensional Euclidean differences.

For example, taste has at least five separate scales: sweet, bitter, sour, salty, and umami. But there is no definition of “delta-Taste” which collapses taste differences into a single number. Smell has about 1000 different receptor types, and some have tried to reduce the dimensionality to about six such as flowery, foul, fruity, spicy, burnt, and resinous. Hearing is spectral – our ears can perceive the spectral power distribution of the sound. Touch might well be too complex to summarize in a single sentence.

Why should color vision be different? Perhaps researchers have been misled by certain properties of color vision such as low-dimensional color matching and simple perceptual relationships such as color opponency. The 3×3 linear transformations between color matching spaces really reinforce the feeling of a three-dimensional color space, but they have nothing to do with perception. Color scientists have spent a lot of effort looking for the “holy grail” of a global 3D color appearance space with Euclidean differences, to no avail.

Perhaps this is misguided and efforts should focus on a set of 1D scales instead. There have been examples of such scales in color science. The Munsell system has separate hue, value and chroma dimensions. Similarly, Guth’s ATD model of visual perception was typically described in terms of independent dimensions. Color appearance models such as CIECAM02 were developed with independent predictors of the perceptual dimensions of brightness, lightness, colorfulness, saturation, chroma, and hue. This was compromised by requests for rectangular color space dimensions which appeared as CIECAM97s evolved to CIECAM02. The NCS system treats hue separately from whiteness-blackness and chromaticness, though it does plot the latter two as a two dimensional space for each hue.

This insight leads to the hypothesis that perhaps color space is best expressed as a set of 1D appearance spaces (scales), rather than a 3D space, and that difference metrics can be effective on these separate scales (but not on combinations of them). The three fundamental appearance attributes for related colors are lightness, saturation, and hue. Combined with information on absolute luminance, colorfulness and brightness can be derived from these and are important and useful appearance attributes. Lastly, chroma can be derived from saturation and lightness if desired as an alternative relative colorfulness metric.

Prof. Fairchild has derived a set of color appearance dimensions following these principles. The first step is to apply a chromatic adaptation model to compute corresponding colors for reference viewing conditions (D65 white point, 315 cd/m2 peak luminance, 1000 lux ambient lighting). Then the IPT model is used to compute a hue angle (h) and then a hue composition (H) can be computed based on NCS. For the defined hue, saturation (S) is computed using the classical formula for excitation purity applied in the u’v’ chromaticity diagram. For that chromaticity, G0 is defined as the reference for lightness (L) computations that follow a “power plus offset” (sigmoid) function. Brightness (B) is Lightness (L) scaled by the Stevens and Stevens terminal brightness factor. Colorfulness (C) is Saturation (S) scaled by Brightness (B), and Chroma (Ch) is Saturation (S) times Lightness (L).

Prof. Fairchild plans to present his detailed formulation soon, and do testing and refinement afterwards.

HDR and UCS: Do HDR Techniques Require a New UCS Space?

This session was presented by Prof. Alessandro Rizzi from the Department of Information Science and Communication at the University of Milan. There was some overlap between this session and the “HDR Imaging in Cameras, Displays and Human Vision” course which Prof. Rizzi presented earlier in the week.

Colorimetry ends in the retinal cone outer segments; color appearance is at the other end of the human visual system. Appearance incorporates all the spatial processing of all the color responsive neurons. Thus color vision can be analyzed in two ways: bottom-up starting from the color matching response of retinal receptors accounting for pre-retinal absorption and glare (going through color matching tests, e.g. the CIE 1931 observer) or top-down starting from the color appearance generated by the entire human visual system (asking observers to describe the apparent distances between hues, chromas and lightnesses, e.g. the Munsell color space).

Recent work (“A Quantitative Model for Transforming Reflectance Spectra Into the Munsell Color Space Using Cone Sensitivity Functions and Opponent Process Weights”, 2003) has linked the two, solving for the 3-D color space transform that places LMS cone responses in the color-space positions measured for the Munsell Book of Color. The process includes a correction for veiling glare inside the eye, which causes the image on the retina to be different than the original scene intensities entering the cornea. The cone response is proportional to the logarithm of the retinal intensities, which (because of glare) is proportional to the cube root of scene intensities. This glare also limits the dynamic range of the retinal image. The link between cone responses and Munsell colors also involves a strong color-opponent process (creating signals differentiating opponent colors such as red-green or yellow-blue).

CIE L*a*b* also has a cube root response and opponent channel mechanism. L*a*b* handles the lightness component of HDR scenes with a two-component compression curve – the first component is a cube-root function in both lightness and chroma for high and medium light levels, and the second is a linear function for low light levels (the two components connect seamlessly). The sRGB and Rec.709 transfer functions are similarly constructed. CIE L*a*b* normalizes each of X, Y and Z to its maximum value over the image before further processing; this is equivalent to the way human vision effectively normalizes L, M and S cone responses (it processes differentials/ratios and not absolute values, as in Retinex theory). After normalization, the compression curve scales the large range of possible radiances into a limited range of appearances – 99% of possible lightnesses correspond to the top 1000:1 range of scene radiances – all remaining radiances (darker than 1/1000 of the white point) correspond to the bottom 1% of possible perceived lightness values. sRGB has similar behavior.

Given these considerations, Prof. Rizzi does not believe that new uniform color spaces (UCSs) are needed for HDR imaging; existing spaces can handle the range that the human eye can perceive in a single scene (note that this analysis does not relate to intermediate images, such HDR IBL – UCSs are only used to describe the perceived colors in the final viewed image).

Digital HDR Color Separations

This session was presented by John McCann, an independent color and imaging consultant since 1996. Previously he led Polaroid’s Vision Research Laboratory for over 30 years, working on topics including Retinex theory, color constancy, very large-format photography, and perceptually-guided color reproduction. John is a co-author of the recently published book “The Art and Science of HDR Imaging”.

Many applications (HDR exposure bracketing, various computer vision and spatial image processing algorithms) need linear light scene values. The JPEGs produced by cameras are very far from linear light; they are images created with the intention of creating a preferred rendering of the scene, which looks pleasing and is not colorimetrically accurate. Regular color print & negative film were designed with a similar intent and produce similar results.

Although the sRGB standard specifies an encoding from scene values, and camera manufacturers follow some aspects of the sRGB standard in producing JPEGs, the processing differs in important ways from the sRGB encoding spec. the algorithms that perform the demosaic, color balance, color enhancement, tone scale, and post-LUT for display and printing create discrepancies between the sRGB output in practice and an idealized conversion of scene radiances to sRGB space.

Together with Vassilios Vonikakis (Democritus University of Thrace, Greece), John McCann did an experiment to measure these discrepancies. Images of a Macbeth ColorChecker chart were taken under varying exposures using three methods: digitally scanned traditional color separation photographs, standard JPEG images from a commercial camera, and “RAW* separations” from the same camera. Traditional color separation photographs use R, G and B filters and panchromatic black and white film to create separate single-channel R, G and B images that are combined into a single color image. “RAW* separations” are the author’s names for linear RGB values that were generated from partially processed RAW camera data (read with LibRaw’s “unprocessed” function). This data does not even include demosaic – it is a black and white image with the mosaic pattern (e.g., Bayer) in it. The authors did their own, carefully calibrated processing on these images to create normalized, linear RGB data.

The photographic separations were most correct – the chromaticity of the Macbeth chart squares remained very stable across all the exposure values. The JPEG image had the largest chroma errors – the chromaticities of the colored Macbeth squares varied greatly with exposure – this is part of the “preferred rendering” performed by these cameras to make the resulting image look good. The RAW* separations were similar to film (slightly less stable chromaticities, but close).

The conclusion is that for any algorithm that needs linear scene data, it is important to use RAW data where most of the processing has been turned off and do carefully calibrated processing.

2011 Color and Imaging Conference, Part V: Papers

Papers are the “main event” at CIC. Unlike the papers at computer science conferences (which are indistinguishable from journal papers), CIC papers appear to be focused more towards “work in progress” and “inspiring ideas”. This stands in contrast to the work published in color and imaging journals such as the Journal of Imaging Science and Technology or the Journal of Electronic Imaging. This distinction is actually the norm in most fields – computer science is atypical in that respect.

Note that since CIC is single-track, I was able to see (and describe in this post) all the papers, including some that aren’t as relevant to readers of this blog.

Root-Polynomial Colour Correction

Images from digital cameras need to be color-corrected, since they typically have sensors which cannot be easily mapped to device independent color-matching functions.

The simplest mapping is a linear transform (matrix), which can be obtained by taking photos of known color targets. However this assumes that the camera spectral sensitivities are linear combinations of the device-independent ones, which is not the case.

Polynomial color correction is another option which can reduce the error of the linear mapping by extending it with additional polynomials of increasing degree. However, polynomial color correction is not scale-independent – there is a chromaticity shift when intensity changes (e.g. based on lighting). This shift can be quite dramatic in some cases.

This paper proposes a new method: root-polynomial color correction. It is very straightforward: simply take the nth root of each nth order term in the extended polynomial vector. Besides restoring scale-independence, the vector also becomes smaller since some of the terms now become the same (e.g. sqrt(r*r) = r).

Experiments showed that with fixed illumination, root-polynomial color correction performed similarly to higher-order polynomial correction. It performed much better if the illumination level changes, even slightly. A large improvement is achieved by adding only three terms to the linear model, so this technique provides very good bang for buck.

Tone Reproduction and Color Appearance Modeling: Two Sides of the Same Coin?

This invited paper was written and presented by Erik Reinhard (University of Bristol), who has done some very influential work on tone mapping for computer graphics and has also co-authored some good books on HDR and color imaging.

Tone mapping or tone reproduction typically refers to luminance compression (often sigmoidal), intended to map high-dynamic range images onto low-dynamic range displays. This can be spatially varying or global over the image. However, tone mapping typically does not take account of color issues – most tone mapping operators work on the luminance channel and the final color is reconstructed via various ad-hoc methods – the two most popular ones are by Schlick (“Quantization Techniques for Visualization of High Dynamic Range Pictures”, 1994) and Mantiuk (“Color correction for Tone Mapping”, 2009). They do not take account of the various luminance-induced appearance phenomena that have been identified over the years: the Hunt effect (perceived colorfulness increases with luminance), the Stevens effect (perceived contrast increases with luminance), the Helmholt-Kohlrausch effect (perceived brightness increases with saturation for certain hues), and the Bezold-Brücke effect (perceived hue shifts based on luminance).

Color appearance models attempt to predict the perception of color under different illumination conditions. They include chromatic adaptation, non-linear range compression (often sigmoidal), and other features used to compute appearance correlates. They are designed to take account of effects such as the ones mentioned in the previous paragraph, but most of them do not handle high dynamic range images (there are some exceptions, such as iCAM and the model presented in the 2009 SIGGRAPH paper “Modeling Human Color Perception Under Extended Luminance Levels”).

Tone mapping and color appearance models appear to have important functional similarities, and their aims partially overlap. The paper was written to show opportunities to construct a combined tone reproduction and color appearance model that can serve as a basis for predictive color management under a wide range of illumination conditions.

Tone mapping operators tend to range-compress luminance and ignore color. Color appearance models tend to identically range-compress individual color channels (typically in a sharpened cone space) and do separate chromatic adaptation. A recent color appearance model by Erik and others (“A Neurophysiology-Inspired Steady-State color Appearance Model”, 2009) combines chromatic adaptation and range compression into the same step (basically doing different range compression on each channel), which Erik sees as a step towards unifying the two approaches.

Another recent step towards unifying the two can be seen in HDR extensions to color spaces (“hdr-CIELAB and hdr-IPT: Simple Methods for Describing the Color of High-Dynamic Range and Wide-Color-Gamut Images”, 2010) which replace the compressive power function with sigmoid curves. A similar approach was taken for HDR color appearance modeling (“Modeling Human Color Perception Under Extended Luminance Levels”, 2009). Image appearance models such as iCAM and iCAM06 incorporate HDR in a different way, taking account of spatial adaptation.

Some of the most successful tone mapping operators are based on neurophysiology, but put the resulting “perceived” values into a frame buffer. This is theoretically wrong, but looks good in practice. Color appearance models instead run the model in reverse from the perception correlates to display intensities (with the display properties and viewing conditions). This is theoretically more correct, but in practice tends to yield poor tone mapping since the two sigmoid curves (one run forward, one in reverse) tend to cancel out, undoing a lot of the range compression. An ad-hoc way to combine the strengths of both approaches (the color management of color appearance models and the range compression of tone mapping operators) is to run a color appearance model on an HDR image, then resetting the luminance to retain only chromatic adjustments and compressing luminance via a tone mapping operator. However, it is hoped that the recent work mentioned above (combining chromatic adaptation & range compression, sigmoidally compressed HDR color spaces, and HDR color appearance models such as iCAM) can be built upon to form a more principled unification of tone mapping and color appearance modeling.

Real-Time Multispectral Rendering with Complex Illumination

Somewhat unusually for this conference, this paper was about a computer graphics real-time rendering system. The relevance comes from the fact that it was a multispectral real-time rendering pipeline.

RGB rendering is used almost exclusively in industry applications, however it is an approximation. Although three numbers are enough to describe the final rendered color, they are not enough in principle to compute light-material interactions, which can be affected by metameric errors.

The authors wanted their pipeline to support complex real world illumination (image-based lighting – IBL), while still allowing for interactive (real-time) rendering. They used Filtered Importance Sampling (see “Real-time Shading with Filtered Importance Sampling”, EGSR 2008) to produce realistic (Ward) BRDF interactions with IBL.

The implementation was in OpenGL, using 6 spectral channels so they could use pairs of RGB textures for reflectance and illumination, two RGB render targets, etc. After rendering each frame, the 6-channel data was transformed first to XYZ and then to the display space, optionally using a chromatic adaptation transform.

The reflectance data was taken from spectral reflectance databases and the spectral IBL was captured by removing the IR filter from a Canon 60D camera and taking bracketed-exposure images of a stainless steel sphere with two different spectral filters.

The underlying mathematical approach was to use a set of six spectral basis functions and multiply their coefficients for light-material interactions, as in the work of Drew and Finlayson (“Multispectral Processing Without Spectra”, 2003). However, the authors found a new set of optimized basis functions (primaries), optimized to minimize error for a set of illuminants and reflectances.

The authors compared the analysis of their results with best-of-class three-channel methods such as the one described in the EGSR 2002 paper “Picture Perfect RGB rendering using Spectral Prefiltering and Sharp Color Primaries”. The results of the six-channel method were visibly closer to the ground truth (the RGB rendering had quite noticeable color errors in certain cases).

Choosing Optimal Wavelengths for Colour Laser Scanners

Monochrome laser scanners are widely used to capture geometry but are incapable of capturing color information. Color laser scanners are a popular choice since they capture geometry and color at the same time, avoiding the need for a separate color capturing system as well as the registration issues involved in combining disparate sources of data. These scanners scan three lasers (red, green, blue) to simultaneously obtain XYZ coordinates as well as RGB reflectance.

However, laser scanners are effectively point-sampling the spectral reflectance at three wavelengths, which is known to be a highly inaccurate method, prone to metamerism. Also, the three wavelengths typically used (635nm, 532nm, and 473nm for the Arius scanner – similar wavelengths for other scanners) are chosen for reasons unrelated to colorimetric accuracy.

The authors of this paper did a brute-force optimization process to find the three best wavelengths for minimizing colorimetric error in color laser scanners. They found that the same three wavelengths (460nm, 535nm, and 600nm) kept popping up, regardless of the reflectance dataset, the difference metric, or any other variation in the optimization process. The errors using these wavelengths were much lower than with the wavelengths currently used by the laser scanners – the color rendering index (CRI) improved from 48 to 75 (out of a 0-100 scale). Interestingly, adding a fourth and fifth wavelength gave no improvement at all.

Since these wavelengths are independent of the color space, difference metric and sample set, they must be associated with a fundamental property of human vision. These wavelengths are very close to the ‘prime colors’ (approximately 450nm, 530nm, and 610nm) identified in 1971 by Thornton (“Luminosity and Color-Rendering Capability of White Light”) as the wavelengths of peak visual sensitivity. These wavelengths were later shown (also by Thornton) to have the largest possible tristimulus gamut (assuming constant power), and are therefore optimal as the dominant wavelengths of display primaries. The significance of these wavelengths can be understood by applying Gram-Schmidt orthonormalization to the human color-matching functions (with the luminance function as the first axis) – the maxima and minima of the two chromatic orthonormal color matching functions line up along these three wavelengths. In other words, these wavelengths produce the maximal excitation of the opponent color channels in the retina.

These results are applicable not just to laser scanners but also to regular (broadband-filter) cameras and scanners, in guiding the dominant wavelengths of the spectral sensitivity functions.

(How) Do Observer Categories Based on Color Matching Functions Affect the Perception of Small Color Differences?

The CIE 2° and 10° standard observers that underlie a lot of color science are well-understood to be averages; people with normal color vision are expected to deviate from these to some extent. There is even a CIE standard as to the expected variation (the somewhat amusingly-named CIE Standard Deviate Observer). However, this does not say how human observers are distributed – are variations essentially random, or are people grouped into clusters defined by their color vision? In last year’s conference, a paper was presented which demonstrated that humans can be classified into one of seven well–defined color vision groups. This paper is a follow-on to that work, which attempts to discover if observers ability to detect small color differences depends on the group they belong to.

It turns out that it does, which opens up some interesting questions. Does it make sense to customize color difference equations and uniform color spaces to each category? Modern displays with their narrow-band primaries tend to exaggerate observer differences, so it might be a good time to explore more precise modeling of observer variation.

A Study on Spectral Response for Dichromatic Vision

Dichromats are people who suffer from a particular kind of color blindness; they only have two types of functional cones. Previous work has dealt with projections from 3D to 2D space but didn’t deal with spectral analysis; this work aims to remedy that. The study looked at three types of dichromats (each missing a different cone type), classified visible and lost spectra for each, and validated certain previous work.

Saliency as Compact Regions for Local Image Enhancement

The goal of this paper is to improve the subjective quality of photographs (taken by untrained photographers) by finding and enhancing their most salient (visually important) features.

It was previously found that people highly prefer images with high salience (prominence), where a region is highly distinct from its background. However untrained photographers often capture images without salient regions. It would be desirable to find an automated way to increase salience, but salience is very difficult to predict for general images.

This paper sidesteps the problem by finding an easier-to-measure correlate – spatial compactness (a certain property is spatially compact if it is concentrated in a relatively small area). The idea is to look at the distribution of pixels with certain low-level attributes such as opponent hues, luminance, sharpness, etc. If the distribution is highly compact (peaked), then there is probably high saliency there and enhancing that attribute will make the photograph look better. There are a few additional tweaks (small objects are filtered out, and regions closer to the center of the screen are considered more important) but that is the gist. The enhancements they did are relatively modest (5-10% increase in the most salient attribute). The results were surprisingly strong: 91% of people preferred the modified image (which is quite an achievement in the field of automatic image enhancement).

The Perception of Chromatic Noise on Different Colors

Pixel size on CMOS sensors is steadily decreasing as pixel count increases, and appears set to continue doing so based on camera manufacturer roadmaps. This increases the likelihood of noisy images; noise reduction filters (e.g. bilateral filters) are becoming more important. Tuning these filters correctly depends on a good model for noise perception. Previous work has shown that the perception of chromatic noise (noise which does not vary luminance) depends on patch color; this study was done to further explore this and to attempt an explanation.

It was found that the perception of chromatic noise was weakest when the noise was added to a grey patch, and strongest when the noise was added to a purple, blue, or cyan patch. Orange, yellow and green patches were in the middle.

Further experiments implied that these differences could be due to the Helmholtz-Kohlrausch (H-K) effect, which causes chromatic stimuli of certain hues to appear brighter than white stimuli of same brightness. Due to this effect, the chromatic noise on certain patches was partially perceived as brightness noise, which has higher spatial visual resolution.

Predicting Image Differences Based on Image-Difference Features

Image-difference measures are important for estimating (as a guide to reducing) distortions caused by various image processing algorithms. Many commonly used measures only take into account the lightness component, which makes them useless for applications such as gamut mapping where color distortions are critical. This paper takes a new approach, by combining many simple image-difference features (IDFs) in parallel (similar to how the human visual cortex works). The authors took a large starting set of IDFs, and (using a database of training images) isolated a combination of IDFs that best matched subjective assessments of image difference.

Comparing a Pair of Paired Comparison Experiments – Examining the Validity of Web-Based Psychophysics

Paired comparison experiments are fairly common in color science, but it is difficult to get enough observers. Some attempts have been made to do experiments over the web; this could greatly increase observer count, but has several issues (uncalibrated conditions, varying screen resolutions, applications like f.lux that vary color temperature as a function of time, etc.).

This paper describes a “meta-experiment” meant to determine the accuracy of web experiments vs. those conducted in a lab.

The correlation between web and lab experiments appears to be poor. That’s not to say that the data gained is not useful; when working on consumer applications, results are typically viewed in uncontrolled conditions. The web experiment performed for this paper ended up not having many participants and had a few other issues (bad presentation design, etc.)

The authors are now doing a second web experiment which has had a lot more participants and better correlation to the lab experiment. They hope to come back next year with a paper on why this second experiment was more successful.

Recent Development in Color Rendering Indices and Their Impacts in Viewing Graphic Printed Materials

Background information on color rendering indices can be found in the “Lighting: Characterization & Visual Quality” course description in my previous blog post. The current CIE standard (CIE-Ra) has several acknowledged faults (use of obsolete metrics such as the von Kries chromatic adaptation transform and the U*V*W* color space, low saturation of the test samples). A CIE technical committee (TC 1-69) was started in late 2006 to investigate methods that would work with new light sources including solid state/LED; this paper reports on the current status of their work.

There have been several proposals for color rendering indices. The current front-runner is based on the CAM02-UCS uniform color space (itself based on the CIECAM02 color appearance model). Various test sample sets were evaluated. The committee currently have a set of 273 samples primarily selected from the University of Leeds dataset (which contains over 100,000 measured reflectance spectra), and are working on reducing it to around 200. The color difference weighting method and scaling factors were also adjusted. Finally, the new index was compared with several others in a typical graphic art setting (common CMY ink set and 58 different D50-simulating lighting sources), and was found to perform well.

Memory Color Based Assessment of the Color Quality of White Light Sources

Although color rendering indices such as the one discussed in the previous paper are needed for professional applications where color fidelity is important, for home and retail lighting color fidelity is not necessarily the most desirable lighting property, instead lights that make colors appear more “vibrant” or “natural” may be preferred. Recognizing this, very recently (July 2011) a new CIE technical committee (TC1-87) was formed to investigate an assessment index more suitable for home and retail applications.

Many such metrics have been proposed over the years, most of which use a Planckian or daylight illuminant as an optimal reference. However, some light sources produce more preferred color renditions than these reference illuminants. This paper focuses on an attempt to define color quality without the need for a reference illuminant.

The approach is based on “memory colors” – the colors that people remember for certain familiar objects. The theory is that if a light source renders familiar objects close to their memory colors, people will prefer it. Experiments were performed where the apparent color of 10 familiar objects was varied and observers selected the preferred color as well as the effect of varying the color (e.g., whether changing saturation relative to the preferred color is perceived as worse than changing the brightness, etc.). This data was fit to bivariate Gaussians in IPT color space to produce individual metrics for each object. The geometric mean of these was rescaled to a 0-100 range, with the F4 illuminant at 50 (which is also its score in the CIE-Ra metric) and D65 at 90 (D65 is a reference illuminant in CIE-Ra, but was found to be non-optimal for memory color rendition).

The authors did a large study to validate the new metric and found that it matched observer’s judgments of visual appreciation better than the other metrics. For future work, they are planning to study how cultural differences affect memory colors.

Appearance Degradation and Chromatic Shift in Energy-Efficient Lighting Devices

During the next few years, many countries will mandate replacement of the incandescent lamp technology which has served humanity’s lighting needs faithfully since 1879. Incandescent lamps, being blackbody radiators, appear “natural” to consumers – they have very good color rendering and remain on the Planckian locus over their lifetime (albeit shifted in color temperature). The general CIE color rendering index (CIE-Ra) is not a sufficient metric – the speaker showed three lights, all with CIE-Ra of 85 and correlated color temperature (CCT) of 3000K; they didn’t look alike at all.

Most consumers inherently recognize the difference between incandescent and energy-efficient lamps. The lighting from the latter just doesn’t “look natural” to them. When asking focus groups about important lighting considerations, they first mention appearance issues: color quality (color rendering), color temperature (warm, normal, or cool white), form factor (shaped like a bulb, a tube, or other), dimmability (will a household triac dimmer work with it?), and glare. Appearance issues are followed by efficiency, brightness, lifetime, environmental friendliness and instant on/off.

The two types of energy efficient lights in common use today are compact fluorescent lamps (CFL) and light emitting diode (LED). In CFLs, a mercury vapor UV light excites phosphors, which emit light in the visible spectrum. White light LEDs have a blue LED which excites phosphors. Both of these light types are characterized by two-stage energy conversion. There are other energy-efficient lighting devices (HIR, HID, OLED, hybrids), but these are not practical for residential lighting.

Since both LEDs and CFL phosphors are operated at high energy densities, heat causes them to degrade over time. Since white is obtained via multiple phosphors, the differential degradation (between phosphors or between phosphors and LED) causes a chromatic shift during usage.

The authors measured aging for all three types of lamp over 5000 hours. The incandescent barely changed. The CFL had some phosphor types degrade a lot & others somewhat, causing a shift toward green. The LED lamp had huge degradation in the phosphors and almost none in the blue LED – light comes from both so the color shifted quite a bit towards blue. Both energy-efficient lamps started with bad color rendition (CRI) and it got a lot worse; luminous efficacy (lumens/Watt) also decreased.

In theory, UV sources combined with trichromatic phosphors that age uniformly could solve the problem, but that challenge has not yet been solved. Emerging energy-efficient lamp types (ESL and others) are supposed to help but aren’t ready yet, which is worrying since the transition has already started.

During Q&A, the speaker stated that he doesn’t think color rendering indices are useful at all; instead he uses color rendering maps that show the color rendering for various points on the color gamut simultaneously. These color rendering maps can show which colors are most affected. Since computers are now fast enough to compute such a map in less than a second, why use a single number? Also, the CIE CRI in common use is overly permissive – it will give high scores to some pretty bad-looking illuminants. Of course, for this very reason the light manufacturers will fight against changing it.

Meta-Standards for Color Rendering Metrics and Implications for Sample Spectral Sets

Like the previously presented paper “Recent Development in Color Rendering Indices and Their Impacts in Viewing Graphic Printed Materials”, this is also a report on the work done by the TC-69 CIE technical committee working on proposals for a new standard color rendering index, but by a different subcommittee. Neither paper appears to represent a consensus; presumably one of these approaches (or a different one) will eventually be selected.

In a recent meeting, the technical committee recommended selecting a reflectance sample set for the new CRI that is simple and as “real” as possible. This paper will talk about potential “meta-standards” by which to select the new CRI standard, and what this means for the reflectance sample set.

Their approach is based on the idea that the CRI should be equally sensitive to perturbations in light spectra regardless of where in the visible spectrum the perturbation occurs. This implies that the average curvature of the reflectance sample set should be uniform, since an area with higher curvature will be more sensitive to perturbations in light spectra. The average curvature of the 8 current CRI samples is very non-uniform, unsurprising due to the low sample count.

At first they tried to select 1000 samples from the University of Leeds sample set (which includes over 100,000 reflectance spectra). The samples were picked to be roughly equally spaced throughout the color set. The average curvature was still highly non-uniform, since many of the materials share the same small set of basic dyes and pigments. Generating completely random synthetic spectra would solve this problem, but then there would be no guarantee that they would be “natural” in the sense of having similar spectral features and frequency distributions. The authors decided to go for a “hybrid” solution where segments of reflectance spectra from the Leeds database were “stitched” together and shifted up or down in wavelength. This resulted in a set of 1000 samples with a much smoother curvature distribution while keeping the “natural” nature of the individual spectra.

1000 samples may be too high for some applications, so the authors attempted to generate a much smaller set of 17 mathematically regular spectra which yield similar results to the set of 1000 hybrid samples. The subcommittee is proposing this set (named “HL17”) to the full technical committee for consideration.

Image Fusion for Optimizing Gamut Mapping

There are various methods for mapping colors from one gamut to another (typically reduced) gamut. Each method works well in some circumstances and less well in others. Previous work applied different gamut mapping algorithms to an image and automatically selected the one that generated the best image based on some quality measure. The authors of this paper tried to see if this can be done locally – if different parts of the same image can be productively processed with different gamut mapping algorithms, and if this produces better results than using the same algorithm for the whole image.

Their approach involved mapping the original with every gamut mapping algorithm in the set, and generating structural similarity maps for each algorithm. This was followed by generation of an index map for the highest similarity at each pixel. Each pixel was mapped with the best algorithm, and the results were fused into one image.

Simple pixel-based fusion results in artifacts, so the authors tried segmentation and bilateral filtering. Bilateral fusion ended up producing the best results, then segmented fusion, followed by picking the best overall algorithm for each image, and finally the individual algorithms. So the fusion approach was promising in terms of visual quality, but computation costs are high. They plan to improve this work as well as applying it to other imaging problems like locally optimized image enhancement and tone mapping operators.

Image-Adaptive Color Super-Resolution

The problem is to take multiple low-resolution images and estimate a high-resolution image. There has been work in this area, but challenges remain, especially correct handling of color images. The authors treated this as an optimization problem with simple constraints (individual pixel values must lie in the 0 to 1 range, warping and blurring must preserve energy over the image, as well as some assumptions on the possible properties of blurring and warping). They add a novel chrominance regularization term to hand color edges properly. The results shown appear to be better than those achieved by previous work.

Two-Field Color Sequential Display

Color-sequential displays mix primaries in time rather than in space as most displays do. Since the color filters are removed (replaced by a flashing red-green-blue backlight), the power efficiency is increased by a factor of three. However, very high frame rates are needed (problematic with LCD displays) and the technique is prone to color “breakup” artifacts.

This paper proposes a display composed of two temporal fields instead of three, to reduce flicker. Optimal pairs of backlight colors are found for each screen block to reduce color “breakup”. This is implemented via an LCD system with local RGB LED backlights. The authors built a demonstration system and experimented with various images. Most natural images are OK, but some man-made objects look bad. The number of segments can be increased, reducing the errors but not eliminating them. They were able to achieve reasonable results with 576 blocks.

Efficient Computation of Display Gamut Volumes in Perceptual Spaces

This paper discussed fast methods to compute gamut volume – the motivation is for use in optimizing display primaries (three or more). I’m not sure how important it is to do this fast, but that is the problem they chose to solve.

Computing gamut volume of three-primary displays in additive spaces is very easy (just the magnitude of the determinant of the primary matrix). The authors want to compute the gamut volume in CIELAB space, which is more perceptually uniform but has non-linearities which complicate volume computation. They found a way to refactor the math into a relatively simple form based on certain assumptions on the properties of the perceptual space. For three-primary displays in CIELAB this reduces to a simple closed-form expression.

Computing gamut volume for multi-primary displays is more complex. The authors represent the gamut as a tessellation of parallelepipeds. To determine the total volume in CIELAB space they solve a numerical problem in a way similar to Taylor series.

Appearance-Based Primary Design for Displays

LED-backlit LCD displays have recently entered the market. They have many advantages over traditional LCD displays: higher dynamic range, high frame rate, wider color gamut, thinner, more environmentally friendly, etc. There are two main types of such displays. RGB-LED-based LCD displays can potentially deliver more saturated primaries (and thus wider color gamuts) due to the narrow spectral width of the LEDs used, while white-LED-based LCD displays might provide high brightness and contrast but smaller gamuts by using high efficiency LEDs in combination with the LCD-panel RGB filters.

The choice between the two is primarily a tradeoff between saturation and brightness. However, the two are linked due to the Hunt effect, which causes perceived colorfulness to increase with luminance. The Stevens effect (perceived contrast increases with brightness) is also relevant. Could these effects lead to a win-win (increased perceived saturation and contrast, as well as actual brightness) even if actual saturation is sacrificed?

The authors investigated two possible designs. One adds a white LED to an RGB LED backlight (RGBW LED backlight). The other keeps the RGB LED backlight, and adds a white subpixel to the LCD (RGBW LCD). The RGBW LED backlight design proved to work best, with an increased white up to 40% providing increased colorfulness as well as brightness. The RGBW LCD white-subpixel design always decreased perceived colorfulness regardless of the amount.

This was determined via a paired comparison experiment. It is interesting to note that neither CIELAB nor CIECAM02 models predicted the result for the RGBW LED backlight – CIELAB predicted that colorfulness would decrease, while CIECAM02 predicted it would increase but not the right amount. In the case of the RGBW LCD subpixel design, both CIELAB and CIECAM02 predicted the results.

HDR Video – Capturing and Displaying Dynamic Real World Lighting

This paper (by Alan Chalmers, WMG, University of Warwick) described the HDR video pipeline under development at the University of Warwick. It includes a Spheron HDRv camera (capable of capturing 20 f-stops of exposure at full HD resolution and 30 fps), NukeX and custom dynamic IBL (image-based lighting) software for post-production, various HDR displays (including a 2×2 “wall” of Brightside DR37-P HDR displays), and a specialized HDR video compression algorithm (for which they have spun off a company, goHDR).

Prof. Chalmers made the case that the 16 f-stops which traditional film can acquire is not sufficient, and showed various examples where capturing 20 f-stops produced better results. He also discussed the recently begun European Union COST (Cooperation in Science and Technology) Action IC1005-7251 “HDRi” which focuses on coordinating European HDR activity and proposing new standards for the HDR pipeline.

High Dynamic Range Displays and Low Vision

This paper was presented by Prof. James Ferwerda from the Munsell Color Science Lab at the Rochester Institute of Technology. Low vision is the preferred term for visual impairment. It is defined as the uncorrectable loss of visual function (such as acuity and visual fields). Low vision (caused by trauma, aging, and disease) affects 10 million people in the USA, and 135 million people worldwide.

HDR imaging offers new opportunities for understanding low vision. This paper describes two projects: simulating low vision in HDR scenes, and using HDR displays to test low vision.

The framework behind tone reproduction operators (which simulate on an LDR display what an observer would have seen in the HDR scene) can be adapted to simulate an impaired scene observer instead of a normal-vision one. Aging effects (such as increased bloom and slower adaptation) can also be simulated.

The importance of using HDR displays to test vision comes from the fact that people with low vision have problems in extreme (light, dark) lighting situations, such as excessive glare or adaptation issues. In addition, there are theories that changes in adaptation time can be good early predictors of retinal disease. However, standard vision tests use moderate light levels so they are not capable of identifying adaptation or other extreme-lighting-induced issues.

Before experiments could be started on the use of HDR displays for vision testing, the NIH (very reasonably) wanted to ensure that these displays could not cause any damage to the test subjects’ vision. Damage caused by light is called “phototoxicity” and can be related to either extremely high light levels in general, or more moderate levels of UV or even blue light. Blue light has recently been identified as a hazard, especially to people with retinal disease. The International Committee on Non-Ionizing Radiation Protection (ICNIRP) has established guidelines for safe light exposure levels, including blue light.

The authors estimated the phototoxicity potential of HDR displays, using the Brightside/Dolby DR37-P as a test case. At maximum brightness, they computed the amount of light which would reach the retina, with the ICNIRP “blue light hazard” spectral filter applied. The result was 4 micro-Watts; since the ICNIRP limit for unrestricted viewing is 200 micro-Watts of blue light, there appears to be no phototoxicity issue with HDR displays. Another way of looking at this: to reach the ICNIRP limit, the display would have to produce the same luminance as a white paper in bright sunlight: 165,000 cd/m2 (for comparison, the DR37-P peak white is about 3000 cd/m2 and the current Dolby HDR monitor – the PRM-4200 – peaks at 600 cd/m2).

Appearance at the Low-Radiance End of HDR Vision: Achromatic & Chromatic

This paper (by John J. McCann, McCann Imaging) studies how human vision works at the low end, close to the absolute threshold of visibility. In particular, does spatial processing change? There are a lot of physiological differences between rods and cones – spatial distribution, wiring, etc., so it might be expected that spatial processing would differ between scotopic and photopic vision. A series of achromatic tests designed to demonstrate various features of spatial vision processing were tested in extreme low-light conditions. The result was exactly the same as in normal light conditions – it appears that spatial processing does not change.

The authors also did experiments with low-light color vision. Although rods by themselves cannot see color (which requires at least two different detector types with distinct spectral sensitivity curves), they can be used for color vision when combined with at least one cone type. In particular, light which has enough red to activate the L cones (but not S or M) and enough light in the right wavelengths to activate the rods will enable dichromatic color vision using the rods and L cones (firelight, a 2000° K blackbody radiator, has the best balance of spectral light for this). This enabled comparing the spatial component of color vision in low-light and normal-light conditions. As before, the observers saw all the same effects, showing that spatial processing was the same in both cases.

Hiding Patterns with Daylight Fluorescent Inks

This paper describes the use of daylight fluorescent inks (which absorb blue & UV light and emit green light, in addition to reflecting light as normal inks do) to create patterns inside arbitrary images which are invisible under normal daylight but appear with other illuminants.