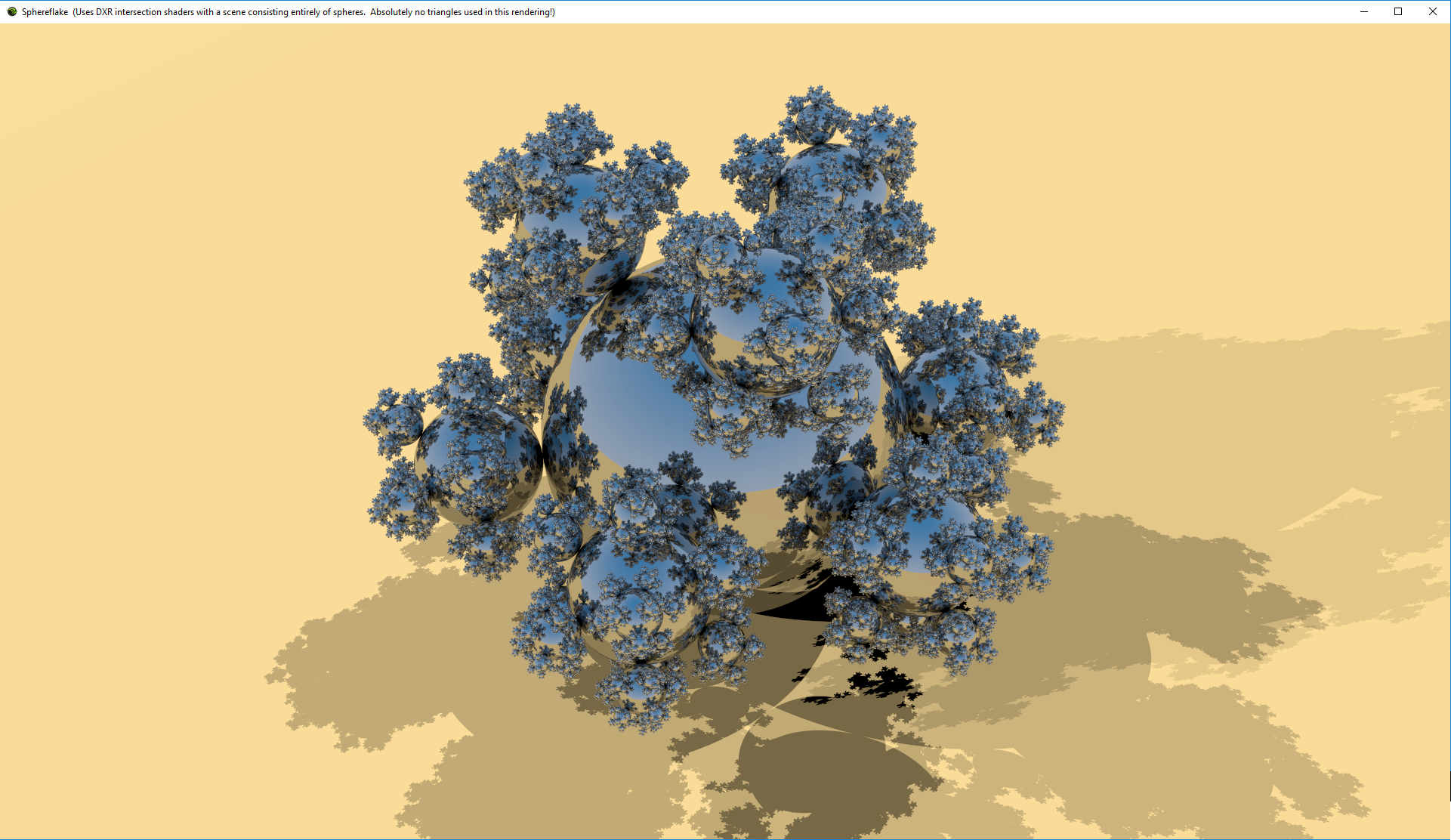

This last week I’ve been working a bit on stuffing Sphereflake into Chris Wyman’s sphere intersection demo for DXR. See my gallery of real-time ray tracing experiments for eye candy, statistics, and commentary.

This is my first DXR program (really, just a modification of Chris’s), and two things impressed me:

- Sheer speed and size of what can be rendered in real-time now vs. 32 years ago: 60 FPS vs. 60+ minutes per frame (~216Kx speedup there alone), 48 million spheres vs. 7k spheres, 1920 x 1110 vs. 512 x 512. And this is on a currently-available GPU that will be considerably surpassed in ten days with the release of the GTX 2080ti and friends.

- Programmability: add depth of field? Just jitter the eye ray’s origin. Want soft shadows? Jitter the shadow ray directions. Adding soft shadows took me about 15 minutes this morning, as a birthday treat to myself.

In all fairness, depth of field and soft shadows and whatnot are noisy, since I initially cast a single ray per pixel. I don’t use denoising, which is something that’s critical for acceptable real-time ray tracing performance whenever such effects are used. The images I show are what I see after a minute (or whenever I happened to do the screen capture; after a few seconds things usually looked good).

All that said, playing with the renderer is a lot of fun now. Add some path tracer functionality here or there and you have a new effect – no hours of hacky “rasterize and then do some funky post-processing effects.” I see this as a huge boon to CAD and pre-visualization packages that want to quickly add new effects or have users rapidly try out variants. It’s dangerously addictive, as I now want to add glossy reflection…