One regret Tomas and I have about the back cover of “Real-Time Rendering, 4th Ed.” is not going with this initial response: “‘The second-best book on rendering there is.’ — Matt Pharr”; would have been hilarious.

Author Archives: Eric

Print Quality

So, I don’t think my work on Real-Time Rendering, 4th Edition will ever be done, even though the thing’s published…

First, yes, we also were unhappy with how some of the images printed in the first printing of the book. And yes, I’m happy to report the second printing is better quality; we wish the first had been, as well, and we are all sorry it wasn’t. The main sources of problems are atoms and the process. There’s a limit to how thick a book can be and not have its spine break, or be readable without crushing your chest. The publisher pushed up the thickness on the second printing, which improved the readability of some figures (I’m ordering one now, after having seen it, so I can compare). Fingers crossed that the binding holds up. It’s why we published two chapters and two appendices online – a 1350+ page book is basically unprintable, let alone readable.

All diagrams and some of the images (those we have rights or permission for) are available for viewing and download on our website, about 2/3rds in total, and I added more today. If we are missing any that would help you understand the text better, please let me know and I’ll see if I can clear rights for them to be redisplayed there – my email’s on the website at the bottom. As important, we hope to provide slightly-improved images to the publisher for the far-distant day when a third printing might be made.

Which edition do you have? I think you can tell by thickness, with first printing being about 4.8 cm (1-7/8″) thick overall, and second printing being around 5.4 cm (2-1/8″), maybe more.

I was interested to read that Matt Pharr had the same problems with the latest edition of “Physically-Based Rendering.” He writes:

It was a terrible feeling, having put endless hours into the book, putting in all of our own best efforts to make the very best thing we could, and then having something awful being put in readers’ hands. We had no control of all sorts of details beyond the text itself

Yup, I can relate. The last thing we see is the print-ready PDF, and after that it’s hope for the best. At least no sections of the book were deleted, duplicated, printed upside-down, or in inverse colors.

I see about 18 out of 676 figures that are noisier or darker or have less contrast than I would like. I am interested in which figures you find difficult to understand. Personally, I’ve love it if the electronic version and paper version were “bound together” and always came as a set – the electronic version, though not as high-resolution as I’d like (unsurprisingly, Amazon didn’t want to provide the 2.2 GB PDF that we get when we compile the book ourselves), does have more readable figures.

My personal take-aways are two: don’t try to print images showing noise, they’ll just about never print well, and don’t try to print images that are somewhat dark, they’ll always seem to get darker when printed. The first is a new lesson learned, the second is one I’ve seen in previous editions but always hold out the hope that it’ll be better this time around.

The Book “Physically Based Rendering” is Now Free

No I’m not kidding; yes it’s the 3rd edition. See the announcement (an interesting read – I loved the first cover proposal), or the front page, or jump right to the table of contents. HTML only for now, though there is an ancient first draft available, but free is pretty great.

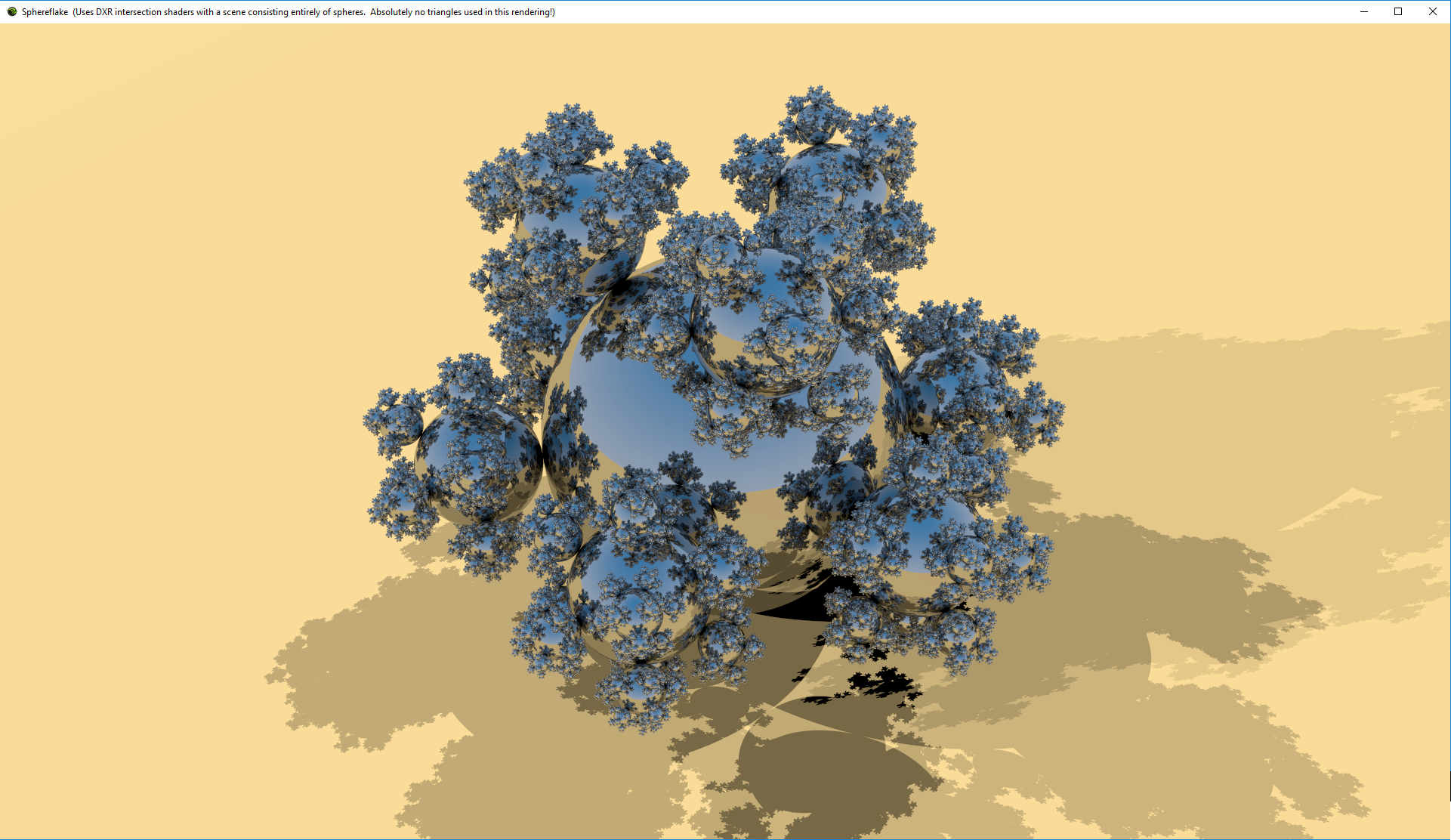

Sphereflakes For Evah

Just can’t get enough about real-time ray-traced Sphereflakes? I wrote an official-looking NVIDIA blog post that’s an extended-play version of my previous blog post.

The code’s here, but can only be run if you have a Titan V or new RTX card, and if you haven’t installed the Windows 1809 October update.

[I was told “evar” looked like a misspell, so I’m fixing it this time.]

Best Birthday Evar

This last week I’ve been working a bit on stuffing Sphereflake into Chris Wyman’s sphere intersection demo for DXR. See my gallery of real-time ray tracing experiments for eye candy, statistics, and commentary.

This is my first DXR program (really, just a modification of Chris’s), and two things impressed me:

- Sheer speed and size of what can be rendered in real-time now vs. 32 years ago: 60 FPS vs. 60+ minutes per frame (~216Kx speedup there alone), 48 million spheres vs. 7k spheres, 1920 x 1110 vs. 512 x 512. And this is on a currently-available GPU that will be considerably surpassed in ten days with the release of the GTX 2080ti and friends.

- Programmability: add depth of field? Just jitter the eye ray’s origin. Want soft shadows? Jitter the shadow ray directions. Adding soft shadows took me about 15 minutes this morning, as a birthday treat to myself.

In all fairness, depth of field and soft shadows and whatnot are noisy, since I initially cast a single ray per pixel. I don’t use denoising, which is something that’s critical for acceptable real-time ray tracing performance whenever such effects are used. The images I show are what I see after a minute (or whenever I happened to do the screen capture; after a few seconds things usually looked good).

All that said, playing with the renderer is a lot of fun now. Add some path tracer functionality here or there and you have a new effect – no hours of hacky “rasterize and then do some funky post-processing effects.” I see this as a huge boon to CAD and pre-visualization packages that want to quickly add new effects or have users rapidly try out variants. It’s dangerously addictive, as I now want to add glossy reflection…

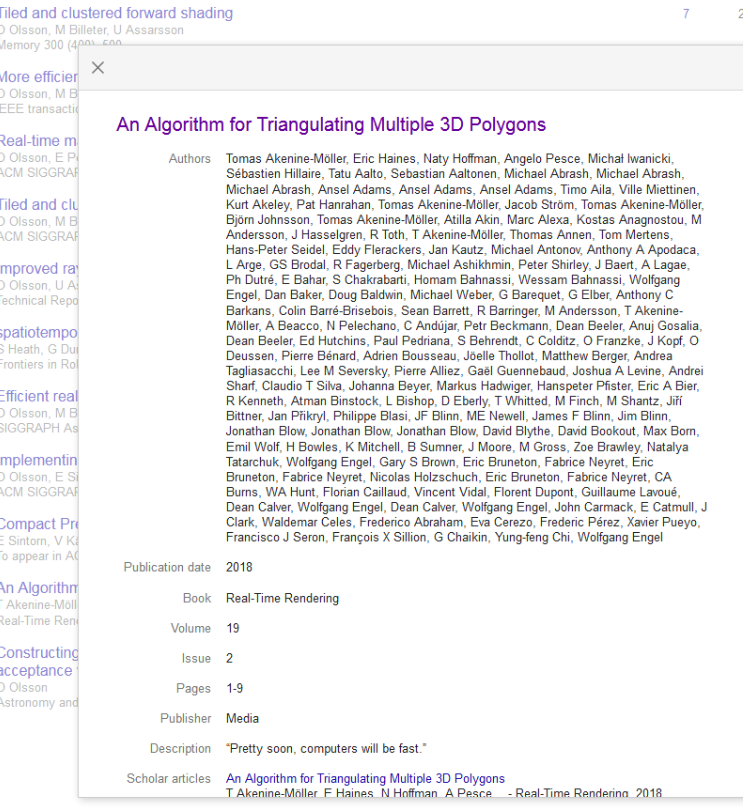

It Takes a Village…

It clearly takes a village to write the book Real-Time Rendering. Ola Olsson pointed out this entertaining bit on Google Scholar:

The fourth edition appears to be Volume 19 Issue 2. The article mentioned does exist, but is the very last reference in the book (#1978). The number of authors on the paper is impressive, quite an increase from the original three for this article. Ansel Adams, among others, gets listed three times as an author – excellent CV padding. My favorite, though, is the description of the article, a quote by Billy Zelsnack used at the beginning of our chapter “The Future.”

I poked around a bit more and found some alternate reality listings, such as this:

In both 4th editions our new three authors don’t show up. More disturbing is that in one universe’s edition Tomas Akenine-Möller also no longer exists (sad, since he’s listed six times as an author in the first image). And a strange universe it is, where the book has 40 citations, despite being out for less than 6 weeks. The prescience of some authors citing it is impressive, with one article published in the year 2000 referencing this fourth edition. Research must be wonderfully accelerated there, with developers being able to read about future breakthroughs that they can then write up.

Ray Tracing Monday, Again

I enjoyed the previous ray tracing monday two weeks ago, so let’s do it again.

Since it’s been two weeks, two resources:

- “Real-Time Ray Tracing” – this is a free chapter (number 26) of Real-Time Rendering that we just finished and is free for download. 46 pages with a focus on DXR, but also including a detour through everyone’s favorite topic, spatial data structures for efficient ray tracing. Tomas, Angelo, and Seb did the heavy lifting on this one over the past 3 months, I helped edit and added bits where I could.

- Ray Tracing Resources Page – along the way we ran across lots of resources. With the chapter finished as of today, I was inspired to make a page of links, mostly things I don’t want to lose track of myself or found of historical interest. Please do feel free to send me awesome resources to add, etc.

One entertaining thing I ran across was an image in the gallery for Chunky, a quite-good custom path tracer for Minecraft worlds:

Cornell blox

CC0 – Public Domain for the World

Steve Hollasch mentioned that there’s a “new” (well, new to me – it’s 9 years old) Creative Commons instrument, CC0. Their website has an explanation of the problem with trying to put something you made into the public domain, and how CC0 solves this. Open Knowledge International (no, I never heard of them, either) recommends it, which I’ll take as a good sign. I didn’t know of this CC0 beast, and suspect readers here don’t, either, so now you do. It’s mostly not a license, it’s a “dedication,” a way to ensure that something you created is considered unowned and free to reuse in every country.

If you want to make sure your code is properly credited to you, use something such as the MIT license instead, or some other (often more restrictive) choice. Creative Commons recommends not using their other licenses for code, but rather some common source code license. I’m assuming (it’s not super-clear) that CC0 is also fine for code you’re putting into the public domain. Update: aha, Steve Hollasch sent this follow-up link – CC0 can be applied to code, and that link shows you how to do it.

Update: I received a number of interesting responses from my tweet of this post. David Williams points out that CC0 is approved by the Free Software Foundation for putting code in the public domain, as it has a fallback license for countries where public domain is not recognized. Arvid Gerstmann notes that dual-licensing with CC0 and the MIT license may be an even better option, for those companies where the lawyers haven’t approved the lesser-known CC0 but have approved use of code with the MIT license.

I know this all sounds like “it doesn’t matter, I’m never going to enforce this,” but it does, sadly. With Graphics Gems we made up a license long ago (basically, “don’t be a jerk”) because someone was trying to sell his company to a larger firm, which had some software testing firm test the smaller company’s code for copyright infringements, and various bits of Graphics Gems code popped up. If CC0 had existed, or maybe even the questionable (and rude) WTFPL, we would have gone with that. Happily, there is now the CC0. (tweet)

SIGGRAPH 2018 Stuff

First, the links you need:

- Stephen Hill’s wonderful SIGGRAPH 2018 links page. Note the fair number of presentations recorded and quickly put up.

- Material from the High Performance Graphics conference preceding SIGGRAPH is all online. Hats off to them for doing this.

- Matt Pharr guest-edited a special section of the latest issue (vol. 37, no. 3, June 2018) of ACM TOG, full of papers on how production renderers work.

- Bonus link: this GDC 2018 link collection by Krzysztof Narkowicz; also, GDC 2014 and earlier by Javier “Jare” Arevalo (thanks to Stephen Hill for the tip-off).

Beyond all the deep ray learning tracing, which I’ve noted in other tweets and posts, the one technology on the show floor that got “you should go check it out” buzz was the Looking Glass Kickstarter, a good-looking and semi-affordable (starting in the $600 range, not thousands or higher) “holographic” display. 60 FPS color, 4 and 8 megapixel versions, but those pixels are divided up among the 45 views that must be generated each frame. Still, it looked lovely, and vaguely magical (and has Sketchfab support).

I mostly went to courses and talked with people. Here are a few tidbits of general interest:

- Shadertoy is one of my favorite websites, but it takes too long to load and looks like it’s locked up. I learned that you can avoid this problem! Sign in, go to your profile, Config, and check “Use previews (avoid compilation times)”. The site is so much more usable now. Too bad it’s not the default, I expect because they want to show you cool things without clicking, but then end up not showing anything for awhile, e.g., 22 seconds for the popular page to compile on my fast workstation. Now this page shows up immediately, and I don’t mind that the animations don’t run when my mouse hovers over the image. (thanks, David Hart!)

- Colin Barré-Brisebois mentioned in NVIDIA’s real-time ray tracing course that Schlick’s Fresnel approximation could not be used in their work, but I didn’t quite catch why. The notes don’t mention this – I need to follow up with him… message sent. Aha, he replies, “It was because of total internal reflection. The Schlick approx doesn’t handle it.”

- One of the speakers in Path Tracing in Production mentioned in passing that some film frames took 300 hours of compute per frame, 1600 rays per pixel. Aha, it was for “Transformers: The Last Knight.” I recall Jim Blinn had some rule-of-thumb long ago about how frames will take 20 minutes no matter how much faster computers get and algorithms improve efficiency. I think that amount needs updating, maybe based on cost (after all, the computers he was using for computation back then weren’t cheap).

- The PDF notes for this course were extensive, though the course lectures were not recorded (heavens forbid anyone capture an unauthorized bunny or chimp). It’ll be interesting once consumer body cams become a thing. Anyway, the notes capture all sorts of bits of wisdom, such as ways of finding structure to help denoise images (yes, film rendering companies use denoising, too), e.g., “we used the tangent vector of the fur to provide the denoiser with “normals” as it proved to have higher contrast than either the true normals of the fur, or the normals of the skin that the fur was spawned from.”

- You know quaternions. David Hart noted a different algebra, octonions, which I’d never heard of before. Bunch of videos on YouTube.

- Regrets: I missed the “Future Artificial Intelligence and Deep Learning Tools for VFX” panel, and there’s no video AFAIK. I wanted to go, because after Glassner’s intro to deep learning course (which was recorded, and well-attended & well-received), Doug Roble from Digital Domain showed me a little bit of their markerless facial mocap system, which looked great. He writes, “We’ll probably have some YouTube videos… soon.”

Finally, this, a clever photo booth at NVIDIA. The “glass” sphere looks rasterized, since it shows little refraction. Though, to make it solid, or even fill it with water, would have been massively heavy and unworkable. Sadly, gasses don’t have much of a refractive index, though maybe it’s as well – a chloroform-filled sphere might not be the safest thing. Anyway, it’s best as-is: people want to see their undistorted faces through the sphere. If you want realism, fix it in post.

Win a free copy of Real-Time Rendering, 4th Edition, and receive it someday…

Now that I’m back from SIGGRAPH, I can catch up on all the things. So here’s one: win a free copy of Real-Time Rendering, 4th Edition. Our publisher is giving away three copies, deadline to enter is August 31.

As far as actually receiving a copy of the book, well, if it’s any consolation, none of us authors have a physical copy at this point, either. Our publisher wrote on August 8th:

This reprint should be in the warehouse within the next 3 weeks. I assume the fulfillment dept will give customer orders priority over author copies.

So it’s a case of the shoemaker’s children go barefoot. Amazon says the book’s back in stock on August 27th.

I do like that the first three chapters are free on Amazon, for Kindle, and Google Play, so I hope that will tide people over until these ship. That this much content was made free was unexpected, a happy decision on the publisher’s part. If you’re done with those chapters and still waiting, don’t forget to read Pete’s now-free books on ray tracing.

I got to see a physical copy of our book at SIGGRAPH, so know such things exist. I also bought the book on Kindle (which at first had some download problem on my iPhone and PC, but downloaded fine the next day) and Google Play (surprised to find it there; same price as Kindle, by some amazing coincidence), as I wanted to see if a layout problem in my local copy was present in the book (happily, it wasn’t – ahhh, the mysteries of LaTeX).

One of the best parts of SIGGRAPH was actually meeting my coauthors. The wild party on the yacht that night in Vancouver Harbor was really something, too, but then I realized I made that up.

Eric, Angelo, Naty, Seb, Tomas, and Michal; photo courtesy of Mauricio Vives