Time to look both forward and back!

- It’s Public Domain Day, when various old works become legal to share and draw upon for new creative endeavors. The original Mickey Mouse, Lady Chatterly’s Lover, Escher’s Tower of Babel, and much else is now free, at least in the US. (Sadly, Canada’s gone the other direction, along with New Zealand and Japan.) Reuse has already begun.

- Speaking of copying, “3D prints” of paintings, where the robot uses brushes to reproduce a work, is now a commercial venture.

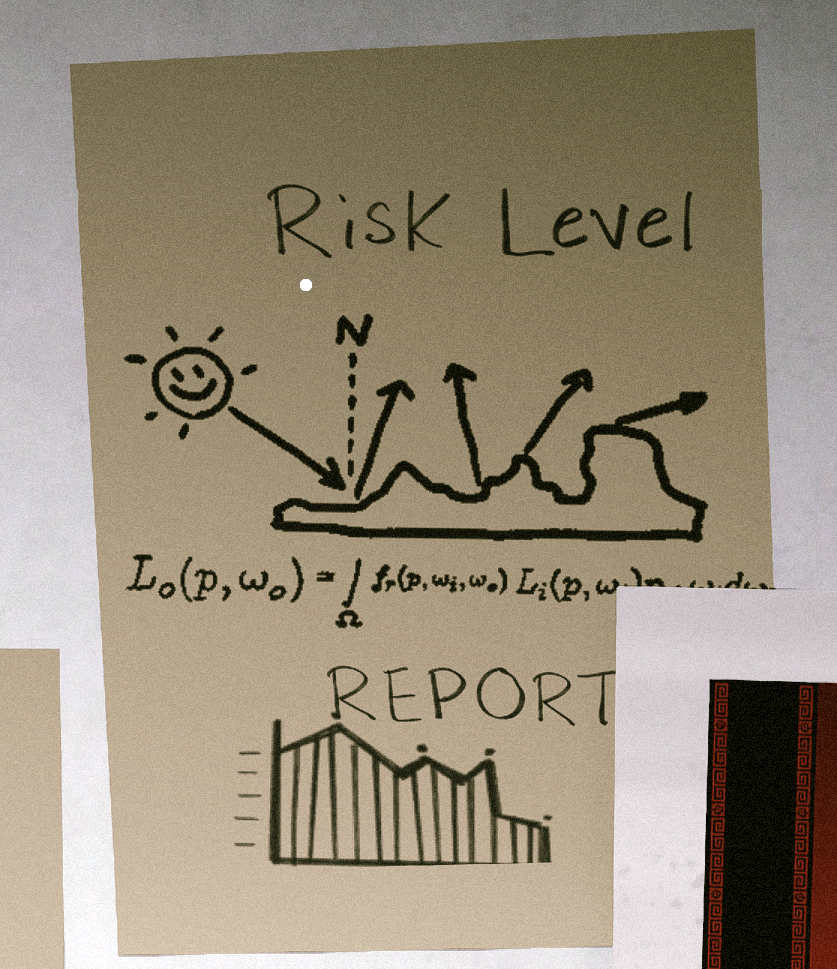

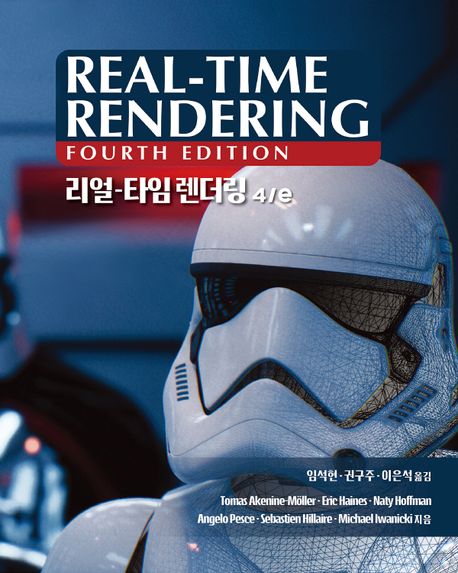

- Speaking of free works, happily the authors have put the new, 4th edition of Physically Based Rendering, published in March 2023, free on the web. Our list of all free graphics books (we know) is here.

- Speaking of books, Jendrik Illner started a page describing books and resources for game engine development. His name should be familiar; he’s the person that compiles the wonderful Graphics Programming weekly posts. I admit to hearing about the PBR 4th edition being up for free from his latest issue, #320 (well, it’s been free since November 1st, but I forgot to mark my calendar). This issue is not openly online as of today, being sent first to Patreon subscribers. Totally worth a dollar a month for me (actually, I pay $5, because he deserves it).

- ChatGPT was, of course, hot in 2023, but isn’t quite ready to replace graphics programmers. Pretty funny, and now I want someone to add a control called Photon Confabulation to Arnold (or to every renderer). Make it so, please.

- The other good news is that our future AI overlords can be defeated by somersaults, hiding in cardboard boxes, or dressing up as a fir tree.

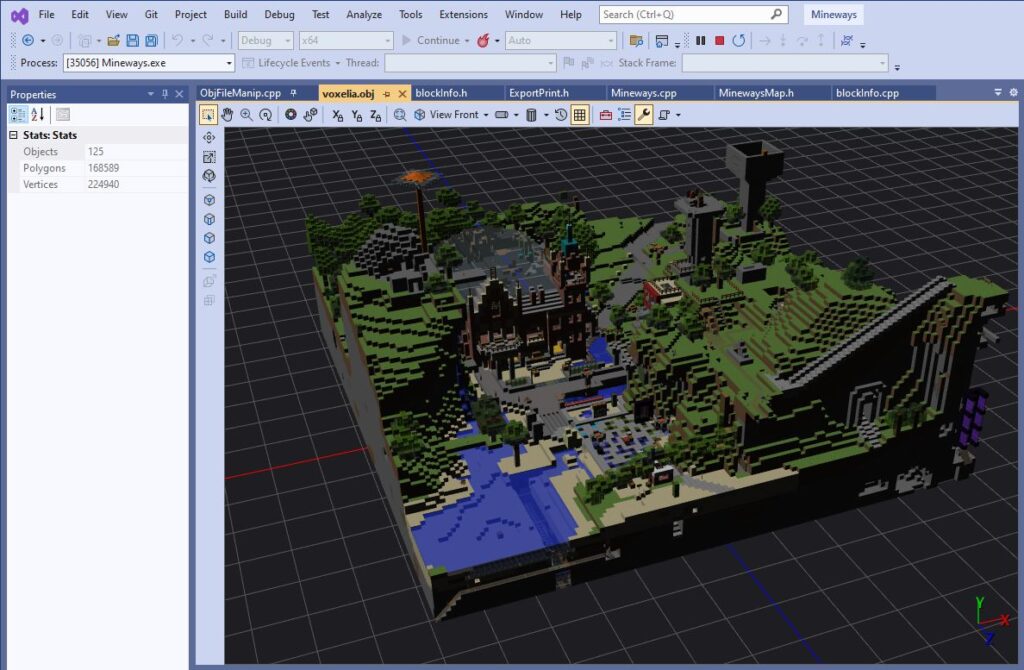

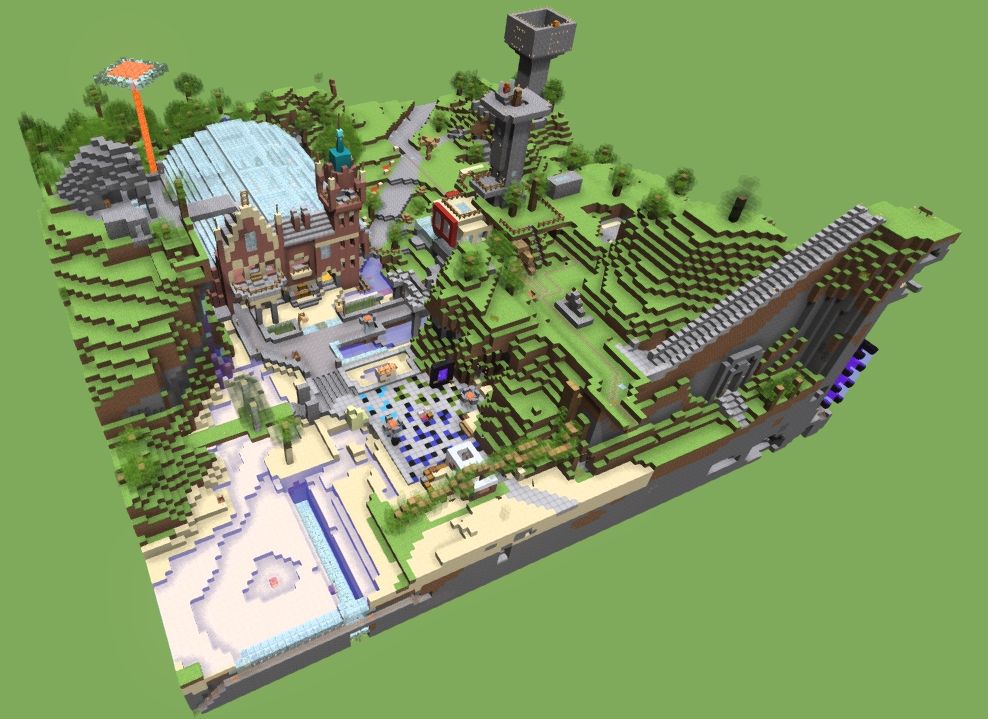

- What’s the new graphics thing in 2023? NeRFs are so 2020. This year the cool kids started using 3D Gaussian splatting to represent and render models. Lots and lots of papers and open source implementations came out (and will come out) after the initial paper presentation at SIGGRAPH 2023. Aras has a good primer on the basic ideas of this stuff, at least on the rendering end. If you just want to look at the pretty, this (not open source) viewer page is nicely done. Me, I like both NeRFs and gsplats – non-polygonal representation is fun stuff. I think part of the appeal of Gaussian splatting is that it’s mostly old school. Using spherical harmonics to store direction-dependent colors is an old idea. Splatting is a relatively old rendering technique that can work well with rasterization (no ray casting needed). Forming a set of splats does not invoke neural anything – there’s no AI magic to decode (though, as Aras notes, they form the set of splats “using gradient descent and ‘differentiable rendering’ and all the other things that are way over my head”). I do like that someone created a conspiracy post – that’s how you know you’ve made it.