Andrew Glassner has made an 8 week course about the graphics language Processing. The first half of the course is free; if you find you like it, the second half is just $25. Even if you don’t want to take the course, you should watch the 2.5 minute video at the site – beware, it may change your mind. The video gives a sense of the power of Processing and some of the wonderful things you can do with it. My small bit of experience with the language showed me it’s a nice way to quickly display and fiddle around with all sorts of graphical ideas. While I dabbled for a week, Andrew used it for half a decade and has made some fascinating programs. Any language that can have such a terrible name and still thrive in so many ways definitely has something going for it.

Author Archives: Eric

Why use WebGL for Graphics Research?

guest post by Patrick Cozzi, @pjcozzi.

This isn’t as crazy as it sounds: WebGL has a chance to become the graphics API of choice for real-time graphics research. Here’s why I think so.

An interactive demo is better than a video.

WebGL allows us to embed demos in a website, like the demo for The Compact YCoCg Frame Buffer by Pavlos Mavridis and Georgios Papaioannou. A demo gives readers a better understanding than a video alone, allows them to reproduce performance results on their hardware, and enables them to experiment with debug views like the demo for WebGL Deferred Shading by Sijie Tian, Yuqin Shao, and me. This is, of course, true for a demo written with any graphics API, but WebGL makes the barrier-to-entry very low; it runs almost everywhere (iOS is still holding back the floodgates) and only requires clicking on a link. Readers and reviewers are much more likely to check it out.

WebGL runs on desktop and mobile.

Android devices now have pretty good support for WebGL. This allows us to write the majority of our demo once and get performance numbers for both desktop and mobile. This is especially useful for algorithms that will have different performance implications due to differences in GPU architectures, e.g., early-z vs. tile-based, or network bandwidth, e.g., streaming massive models.

WebGL is starting to expose modern GPU features.

WebGL is based on OpenGL ES 2.0 so it doesn’t expose features like query timers, compute shaders, uniform buffers, etc. However, with some WebGL 2 (based on ES 3.0) features being exposed as extensions, we are getting access to more GPU features like instancing and multiple render targets. Given that OpenGL ES 3.1 will be released this year with compute shaders, atomics, and image load/store, we can expect WebGL to follow. This will allow compute-shader-based research in WebGL, an area where I expect we’ll continue to see innovation. In addition, with NVIDIA Tegra K1, we see OpenGL 4.4 support on mobile, which could ultimately mean more features exposed by WebGL to keep pace with mobile.

Some graphics research areas, such as animation, don’t always need access to the latest GPU features and instead just need a way to visualization their results. Even many of the latest JCGT papers on rendering can be implemented with WebGL and the extensions it exposes today (e.g., “Weighted Blended Order-Independent Transparency“). On the other hand, some research will explore the latest GPU features or use features only available to languages with pointers, for example, using persistent-mapped buffers in Approaching Zero Driver Overhead by Cass Everitt, Graham Sellers, John McDonald, and Tim Foley.

WebGL is faster to develop with.

Coming from C++, JavaScript takes some getting use to (see An Introduction to JavaScript for Sophisticated Programmers by Morgan McGuire), but it has its benefits: lighting-fast iteration times, lots of open-source third-party libraries, some nice language features such as functions as first-class objects and JSON serialization, and some decent tools. Most people will be more productive in JavaScript than in C++ once up to speed.

JavaScript is not as fast as C++, which is a concern when we are comparing a CPU-bound algorithm to previous work in C++. However, for GPU-bound work, JavaScript and C++ are very similar.

Try it.

Check out the WebGL Report to see what extensions your browser supports. If it meets the needs for your next research project, give it a try!

Seven Things for February 1, 2014

Here we go:

- Four free mini-courses from SIGGRAPH on computer graphics, how nice is that? First one’s by Andrew Glassner, one of my favorite lecturers.

- GPU Pro 5 teaser articles are up for viewing. I look forward to the day when we can just get the dang book immediately – really, I have to wait until March? The world could end before then, and then where would I be?

- GPU Pro 6 has a call for participation. Do it!

- A great illusion at Shadertoy, and a new shading illusion (I hope two links here makes up for the two separate GPU Pro listings).

- A little dated, but a thorough rundown of various global illumination related effects on the GPU.

- Some worthwhile arguments against doing a z-buffer prepass. I tend to agree (and of course YMMV), as doing a good sort goes a long way towards getting the benefits.

- And since I haven’t been posting half as much as I’d like, here’s an amazing collection of graphics blogs, that should keep y’all satisfied.

3D Printed Sphereflake

Well, it’s not printed in silver or steel or somesuch, but it’s still fun to see. This is from Alexander Enzmann, who did a lot of work on the SPD model software, outputting a wide variety of formats. Since the spheres in the sphereflake normally touch each other at only one point, he modified the program a bit to push the spheres only 80% of the way along their axis translation, so giving more overlap between each pair. He printed this on his Solidoodle printer.

512 and counting

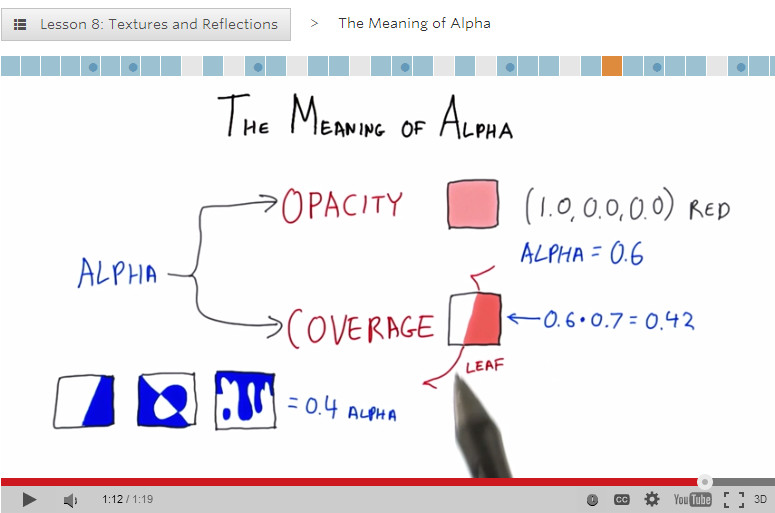

I noticed I reached a milestone number of postings today, 512 answers posted to the online Intro to 3D Graphics course. Admittedly, some are replies to questions such as “how is your voice so dull?” However, most of the questions are ones that I can chew into. For example, I enjoyed answering this one today, about how diffuse surfaces work. I then start to ramble on about area light sources and how they work, which I think is a really worthwhile way to think about radiance and what’s happening at a pixel. I also like this recent one, about z-fighting, as I talk about the giant headache (and a common solution) that occurs in ray tracing when two transparent materials touch each other.

So the takeaway is that if you ever want to ask me a question and I’m not replying to email, act like you’re a student, find a relevant lesson, and post a question there. Honestly, I’m thoroughly enjoying answering questions on these forums; I get to help people, and for the most part the questions are ones I can actually answer, which is always a nice feeling. Sometimes others will give even better answers and I get to learn something. So go ahead, find some dumb answer of mine and give a better one.

By the way, I finally annotated the syllabus for the class. Now it’s possible to cherry-pick lessons; in particularly, I mark all lessons that are specifically about three.js syntax and methodology if you already know graphics.

HPG CFP 2014

Really, you just need this link. I think HPG is the most useful conference I keep tabs on, from a “papers that can help me out” standpoint. SIGGRAPH’s better for a “see what’s happening in the field as a whole” view, and often there’s useful stuff in the courses and sketches, but in the area of papers HPG far outstrips SIGGRAPH in the number of papers directly useful to me. I can’t justify going as often as I like (especially when it’s in Europe), but HPG’s a great conference.

Anyway, here’s the CFP boilerplate, to save your precious fingers from having to click on that link (it’s actually amazing to me how much links are not clicked on; in my own life I tend to consider clicking on a link something of a commitment).

High-Performance Graphics 2014 is the leading international forum for performance-oriented graphics and imaging systems research including innovative algorithms, efficient implementations, languages, parallelism, compilers, hardware and architectures for high-performance graphics. High-Performance Graphics was founded in 2009, synthesizing multiple conferences to bring together researchers, engineers, and architects to discuss the complex interactions of parallel hardware, novel programming models, and efficient algorithms in the design of systems for current and future graphics and visual computing applications.

The conference is co-sponsored by Eurographics and ACM SIGGRAPH. The 2014 program features three days of paper and industry presentations, with ample time for discussions during breaks, lunches, and the conference banquet. It will be co-located with EGSR 2014 in Lyon, France, and will take place on June 23—25, 2014.

Topics include:

- Hardware and systems for high-performance graphics and visual computing

- Graphics hardware simulation, optimization, and performance measurement

- Shading architectures

- Novel fixed-function hardware design

- Hardware for accelerating computer

- Hardware design for mobile, embedded, integrated, and low-power devices

- Cloud-accelerated graphics systems

- Novel display technologies

- Virtual and augmented reality systems

- High-performance computer vision and image processing techniques

- High-performance algorithms for computational photography, video, and computer vision

- Hardware architectures for image and signal processors (ISPs)

- Performance analysis of computational photography and computer vision applications on parallel architectures, GPUs, and specialized hardware

- Programming abstractions for graphics

- Interactive rendering pipelines (hardware or software)

- Programming models and APIs for graphics, vision, and image processing

- Shading language design and implementation

- Compilation techniques for parallel graphics architectures

- Rendering algorithms

- Spatial acceleration data structures

- Surface representations and tessellation algorithms

- Texturing and compression/decompression algorithms

- Interactive rendering algorithms (hardware or software)

- Visibility algorithms (ray tracing, rasterization, transparency, anti-aliasing, …)

- Illumination algorithms (shadows, global illumination, …)

- Image sampling strategies and filtering techniques

- Scalable algorithms for parallel rendering and large data visualization

- Parallel computing for graphics and visual computing applications

- Physics and animation

- Novel applications of GPU computing

Important Dates:

- Paper submission deadline: April 4, 2014

- Notification of acceptance: May 12, 2014

- Camera-ready papers due: May 22, 2014

- Conference: June 23—25, 2014

More information: www.HighPerformanceGraphics.org

Long Plane Rides and JCGT

I’m about to embark on a 20-hour (or so) plane trip to Shanghai. With most of that time being in the plane, I’m loading up on stuff to read on my iPad. (Tip: GoodReader is great for copying files from your DropBox to your iPad.) JCGT does a great job of helping me fill up. Just go to the “Read” area and there’s a long list of articles, select the ones that sound interesting, and download away (well, having all the papers be called “paper.pdf” is not ideal, but that will eventually get fixed). No messing around with logging in, no digging to find things, just “here’s a nicely-illustrated list, have at it”. It’s amazing to me how much the little illustrations help me quickly trim the search.

In contrast, I had to do a few minutes of clever searching to find the SIGGRAPH 2013 Proceedings. Shame on you, ACM DL, for not responding properly to the searches “SIGGRAPH 2013” or “SIGGRAPH 2013 papers”. The first search shows everything but the papers, since the papers are part of TOG; the second search gives practically random results.

![]()

Some Presents for Xmas

Here are a few cool things I noticed and seem appropriate to post today.

First, this person is doing cool real-life procedural texturing. Or I should say, is really covering up, since we know the aliens are the ones who are really making these.

The Graphics Codex now has three sample PDFs available for free download, to give you a sense of what’s in the app/book. Find the links in the right-hand column.

The Christmas Experiments gives 24 little graphical presents, scroll down to make them appear. I haven’t opened them all up yet, as I was working backward and only got as far as this one, which is lovely and interactive.

Merry

Improved Graphics Transforms Demo

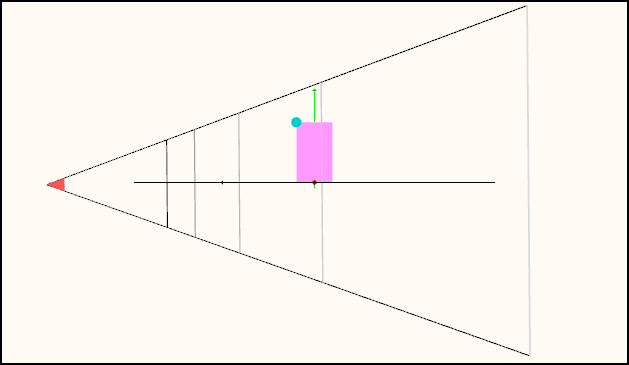

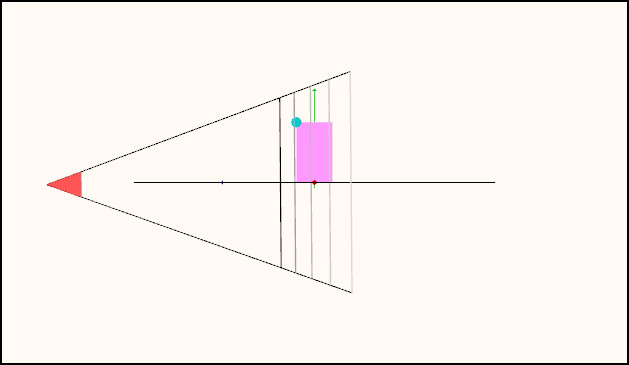

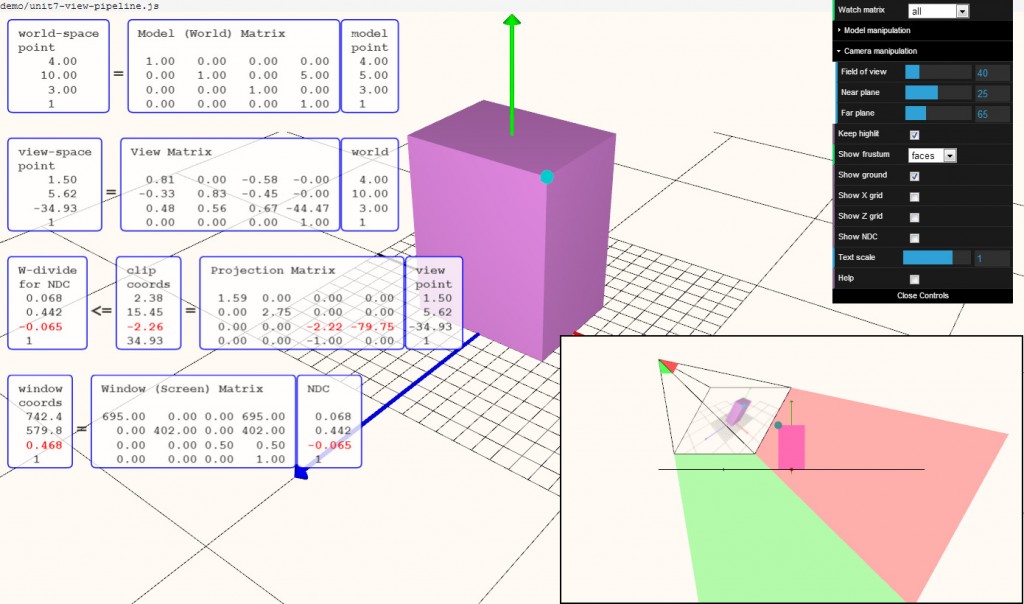

With the holidays upon us, it’s time to hack! Well, a little bit. I spent a fair bit of time improving my transforms demo, folding in comments from others and my own ideas. Many thanks to all who sent me suggestions (and anyone’s welcome to send more). I like one subtle feature now: if the blue test point is clipped, it turns red and clipping is also noted in the transforms themselves.

The feature I like the most is that which shows the frustum. Run the demo and select “Show frustum: depths”. Admire that the scene is rendered on the view frustum’s near plane. Rotate the camera around (left mouse) until it’s aligned to a side view of the view frustum. You’ll see the near and far plane depths (colored), and some equally spaced depth planes in between (in terms of NDC and Z-depth values, not in terms of world coordinates).

Now play with the near and far plane depths under “Camera manipulation” (open that menu by clicking on the arrow to the left of the word “Camera”). This really shows the effect of moving the near place close to the object, evening out the distribution of the plane depths. Here’s an example:

The mind-bender part of this new viewport feature is that if you rotate the camera, you’re of course rotating the frustum in the opposite direction in the viewport, which holds the view of the scene steady and shows the camera’s movement. My mind is constantly seeing the frustum “inverted”, as it wants both directions to be in the same direction, I think. I even tried modeling the tip where the eye is located, to give a “front” for the eye position, but that doesn’t help much. Probably a fully-modeled eyeball would be a better tipoff, but that’s way more work than I want to put into this.

You can try lots of other things; dolly is done with the mouse wheel (or middle mouse up and down), pan with the right mouse. All the code is downloadable from my github repository.

Click on image for a larger, readable version.

Graphics Pipeline Demo

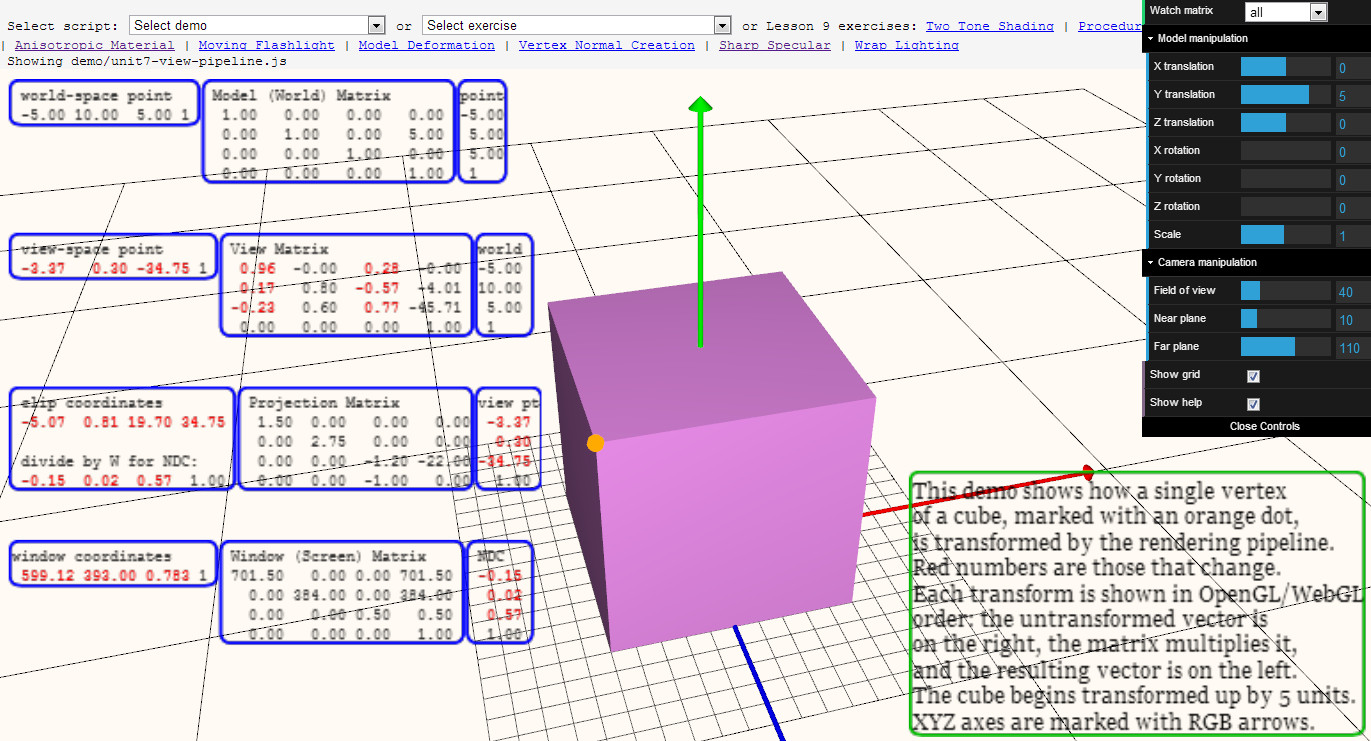

Try it out (you have to have WebGL enabled etc.)

I made this demo as a few students of the Interactive Graphics MOOC were asking for something showing the various transforms from beginning to end.

It’s not a fantastic demo (yet), but if you roughly understand the pipeline, you can then look at a given point and see how it goes through each transform.

It’s actually kind of a fun puzzle or guessing game, if you understand the transforms: if I pan, what values will change? What if I change the field of view, or the near plane?

I’d love suggestions. I can imagine ways to help guide the user with what various coordinate transforms mean, e.g. putting up a pixel grid and labeling it when just the window coordinates transform is selected, or maybe a second window showing a side view and the frustum (but I’m not sure what I’d put in that window, or what view to use for an arbitrary camera).

I’ve been bumping into limitations of three.js as it is, but I’m on a roll so that’s why I’m asking.