Andrew Woo and Pierre Poulin will be signing their new book “Shadow Algorithms Data Miner” at the CRC Press/AK Peters booth, #929, at SIGGRAPH, Wednesday, 3-4 pm.

Author Archives: Eric

SIGGRAPH schedule for your device

Dan Wexler pointed out this great page by skitten, which lets you quickly load up your Google Calendar with all SIGGRAPH events. Dan notes, “I’ve used these the past three years and they are fantastic”. Non-West-Coasters: don’t try to use these events for planning before SIGGRAPH, unless you go lock the time zone to West Coast times (or if you like to be three hours late for everything).

So if you want to thank Dan in person, see their talk, Intelligent Brush Strokes, around 4:20 pm Thursday, room 408A. Or virtually thank him by checking out the one-page abstract or the related, and brand-new, Glaze iPhad/iPone app.

JCGT Gathering at SIGGRAPH 2012

If you’re interested in the new open-access “Journal of Computer Graphics Techniques“, some of us editors, contributors, and other birds of a feather are informally meeting up at SIGGRAPH 2012. You’re welcome to come and chat (half the point of SIGGRAPH, amirite?):

Time: 5:30-6:30 pm Monday (just before this party in the same hotel)

Place: Marriott HQ hotel lobby, behind registration. This area:

Yes, this time conflicts with the Electronic Theater on Monday, etc., etc. – every time almost every day from 9 am to 8 pm on conflicts with something, and oddly no one wanted a 7 am meeting.

Morgan McGuire, the Editor-in-Chief of JCGT, won’t be attending SIGGRAPH, but has a great blog entry about JCGT’s progress and status. The whole post is worth reading, and I’ll repeat the last part here:

The answer comes in three parts. First, it isn’t that expensive to publish a graphics journal electronically. All of the writing, editing, and reviewing is done by volunteers and most of the software is free open source (LaTeX, BibTeX, Apache, MySQL, mod_xslt2, Emacs, Ubuntu, etc.). The board is unpaid, as is the case for most academic editorial positions. Graphics authors and editors are capable of producing professional-quality typesetting, layout, and diagrams on their own.

Second, Williams College has a grant from the Mellon Foundation to create digital archives to match the quality and reliability of the college’s substantial physical scholarly archives. Those physical archives are in rare books, visual art, scholarly journals, and congressional papers. I find the breadth and depth of those fascinating: the college’s holdings include original drafts of the US Declaration of Independence and Constitution, first editions of major scientific works such as Principia Mathematica, and paintings by major artists such as Picasso. The college is well-positioned to archive and conserve digital computer graphics papers and unlike a commercial publisher, an academic library has no agenda for those materials beyond preserving knowledge for all.

Third, the minor incidental costs of advertising, hosting, and legal are being picked up out of pocket by a few of us. Of of the financial contributions and dues I’ve given to graphics organizations, this was the one I was most pleased to make. We’re not accepting donations or seeking outside funding–that would subject us to bookkeeping overhead and legal requirements. If you want to support the journal, the best way to do so is to read it, write for it, and offer your services as a reviewer.

One other tidbit: the first paper accepted by JCGT has been downloaded over 10,000 times in four weeks. Clearly there’s a high curiosity factor for an inaugural article, but reaching this wide an audience is a good sign.

“Advances in Real-Time Rendering in Games” Course Syllabus for SIGGRAPH 2012

WebGL Resources

by Patrick Cozzi, a guest blogger

(I was corresponding with Patrick and found he knew way too much about WebGL, so asked him to write something down. – Eric)

Although I am a long-time C++ and OpenGL developer, I’ve been developing full-time in JavaScript and WebGL for the past year and a half on an open-source 3D engine, Cesium, for virtual globes and maps. Here are some of my favorite WebGL resources.

Reading

- Learning WebGL – Giles Thomas does a great job reporting the latest WebGL news and demos in his WebGL around the net posts.

- WebGL Camp is a mini WebGL conference. There have been four in the bay area, one in Orlando, and one in Switzerland. Videos, slides, and demos for all of them are online. Of particular interest is the WebGL game engine, turbulenz: slides • video.

- There are a few intro books including WebGL Beginner’s Guide by Diego Cantor and Brandon Jones, and WebGL: Up and Running by Tony Parisi. I haven’t read them, but the authors are well respected in the WebGL community.

- Our new book, OpenGL Insights, has 15 chapters related to WebGL, including a sample chapter, The ANGLE Project: Implementing OpenGL ES 2.0 on Direct3D by Daniel Koch and Nicolas Capens, that describes ANGLE, the default WebGL implementation on Windows in Chrome and Firefox.

SIGGRAPH

- The WebGL BOF, organized by Ken Russell, will have a series of five-minute lighting talks with a focus on demos, including a Cesium demo I’m giving. Last year the room was packed – people standing, sitting on the floor, and crowding around the door. Let’s hope the room is a lot bigger this year.

- Graphics Programming for the Web is a timely new course by Pushkar Joshi, Mikaël Bourges-Sévenier, Ken Russell, and Zhenyao Mo covering WebGL and other relavant HTML5 techniques. It sounds like it will be pretty broad, which is great for C++ developers like me that recently started to pretend to be web developers.

- Although not WebGL-specific, I’ll be at the Rest 3D BOF organized by Rémi Arnaud. I’ll even miss part of Beyond Programmable Shading for it. Rest 3D is defining a REST API for accessing 3D content over HTTP. If it gets widespread adoption from content providers, WebGL apps using the API will have access to a ton of content, which is a big win for everyone.

Need to convince management/leads to consider WebGL?

- WebGL is cross-platform, and doesn’t require an install, plugin, or admin rights. IE doesn’t support WebGL, but there are several options. We’ve found Chrome Frame to be the best because it installs without requiring admin rights, and also brings Chrome’s fast JavaScript engine.

- WebGL browser support is increasing. Check out the WebGL Stats by Florian Bösch. It currently reports that 65.6% of desktop browsers across all OSes support WebGL. (more stats for Firefox here).

- JavaScript doesn’t suck that much, really. JavaScript: The Good Parts by Douglas Crockford and his other JavaScript writings are great reads. There are downsides too, of course; for example, I have a much harder time rationalizing about performance in JavaScript than I do in C++. Fortunately, the built-in Chrome profiler is painless to use.

Tools

- The WebGL Inspector by Ben Vanik allows us to step through WebGL calls or just draw calls, and view textures, buffers, shaders, and the current state – think gDEBugger for WebGL. I like to use it as a sanity check to make sure we are not making too many draw calls or loading too many textures.

- Our WebGL Report uses a pipeline diagram to display the system’s WebGL capabilities such as maximum texture size and number of texture image units.

Finally, the WebGL wiki has a ton of great resources including a list of frameworks and more.

OpenGL Insights and more

In my previous blog post I mentioned the newly-released book OpenGL Insights. It’s worth a second mention, for a few reasons:

- The editors and some contributors will be signing their book at SIGGRAPH at the CRC booth (#929) at Tuesday, 2 pm. This is a great chance to meet a bunch of OpenGL experts and chat.

- Five free chapters, which can be found here. In particular, “Performance Tuning for Tile-Based Architectures” is of use to anyone doing 3D on mobile devices. Most of these devices are tile-based, so have a number of significant differences from normal PC GPUs. Reading this chapter and the (previously-mentioned) slideset Bringing AAA Graphics to Mobile Platforms (PDF version) should give you a good sense of the pitfalls and opportunities of mobile tile-based architectures.

- The book’s website has an OpenGL pipeline map page (direct link to PDF here). Knowing what happens when can clarify some mysteries and solve some bugs.

- The website also has a tips page, pointing out some of the subtleties of the API and the shading language.

While I’m at it, here are some other worthwhile OpenGL resource links I’ve been collecting:

- ApiTrace: a simple set of wrapper DLLs that capture graphics API calls (also works for DirectX). You can replay and examine just about everything – think “PIX for OpenGL”, only better. For example, you can edit a shader in a captured run and immediately see the effect. Also, it’s open-source and as of this writing is actively being developed.

- ANGLE: software to translate OpenGL ES 2.0 calls to DirectX 9 calls. This package is what both Chrome and Firefox use to run WebGL programs on Windows. Open source, of course. Actually, just assume everything here is open-source unless I say different (which I won’t).

- Edit: Patrick Cozzi (one of the editors of OpenGL Insights) notes that there are several options for WebGL on IE. “Currently, I think the best option is to use Chrome Frame. It painlessly installs without admin rights, and also brings Chrome’s fast JavaScript engine to IE. We use it on http://cesium.agi.com and I actually demo it on IE (including installing Chrome Frame) by request quite frequently.”

- Microsoft Internet Explorer won’t support WebGL, so someone else did as a plug-in.

- Equalizer: a framework for coarse-parallel OpenGL rendering (think multi-display and multi-machine).

- Oolong and dEngine: OpenGL ES rendering engines for the iPhone and iPad. Good for learning how things work. Oolong is 90% C++, dEngine is pure C. Each has its own features, e.g. dEngine supports bump mapping and shadow mapping.

- A bit dated (OpenGL ES 1.1), but might be of interest: a readable rundown of the ancient Wolf3D engine and how it was ported to the iPhone.

- gl2mark: benchmarking software for OpenGL ES 2.0

- Matrix libraries: GLM is a full-blown matrix library based on OpenGL naming conventions, libmatrix is a template library for vector and matrix transformations for OpenGL, VMSL is a tiny library for providing modern OpenGL with the modelview/projection matrix functionality in OpenGL 1.0.

- G3D: well, it’s more a user of OpenGL, but worth a mention. It’s a pretty nice C++ rendering engine that includes deferred shading, as well as ray tracing. I use it a lot for OBJ file display.

- OpenGL works with cairo, a 2D vector-based drawing engine. Funky.

Seven things for July 25

Here we go:

- Beautiful demo of various effects, the realtime hybrid raytracing demo RIGID GEMS. Do note there are controls. The foreground blurs for the depth-of-field are a little unconvincing, but the rest is lovely! (thanks to Steve Worley for the tip)

- Books to check out at SIGGRAPH, or now (I’m sure there are more – let me know): OpenGL Insights and Shadow Algorithms Data Miner. Five chapters of OpenGL Insights are free to read here. There are quite a few graphics books published since last SIGGRAPH, we have them listed here.

- Scalable Ambient Obscurance looks worthwhile, and there’s even a demo and source.

- I can’t say I grok it all yet, but Bringing AAA Graphics to Mobile Platforms (from GDC) has a lot of chewy information on what’s fast and slow on typical mobile hardware, as well as how it works. PDF version on the Unreal Engine site.

- A somewhat older (a whole year or so old) article on changing resolution on the fly to maintain frame rate. (Thanks, Mauricio)

- 3D printing opens up a wide range of legal issues, It Will Be Awesome if They Don’t Screw it Up gives an overview of some of these. There are a number of areas where the law hasn’t had to concern itself yet.

- Echo chamber: stuff you should probably know about already, but just in case. 1) Ouya, a monster money-raiser Kickstarter project for an open console. Tim Lottes comments; my take is “Android games on a console? Weak.” but I’d love to see them succeed. 2) Source Filmmaker, a free film making system from Valve. People are getting busy with it.

To conclude, a photo that looks like a rendering bug; read about it here. If you like these sorts of things, see more at the “2 True 2B Good” collection.

So what am I missing?

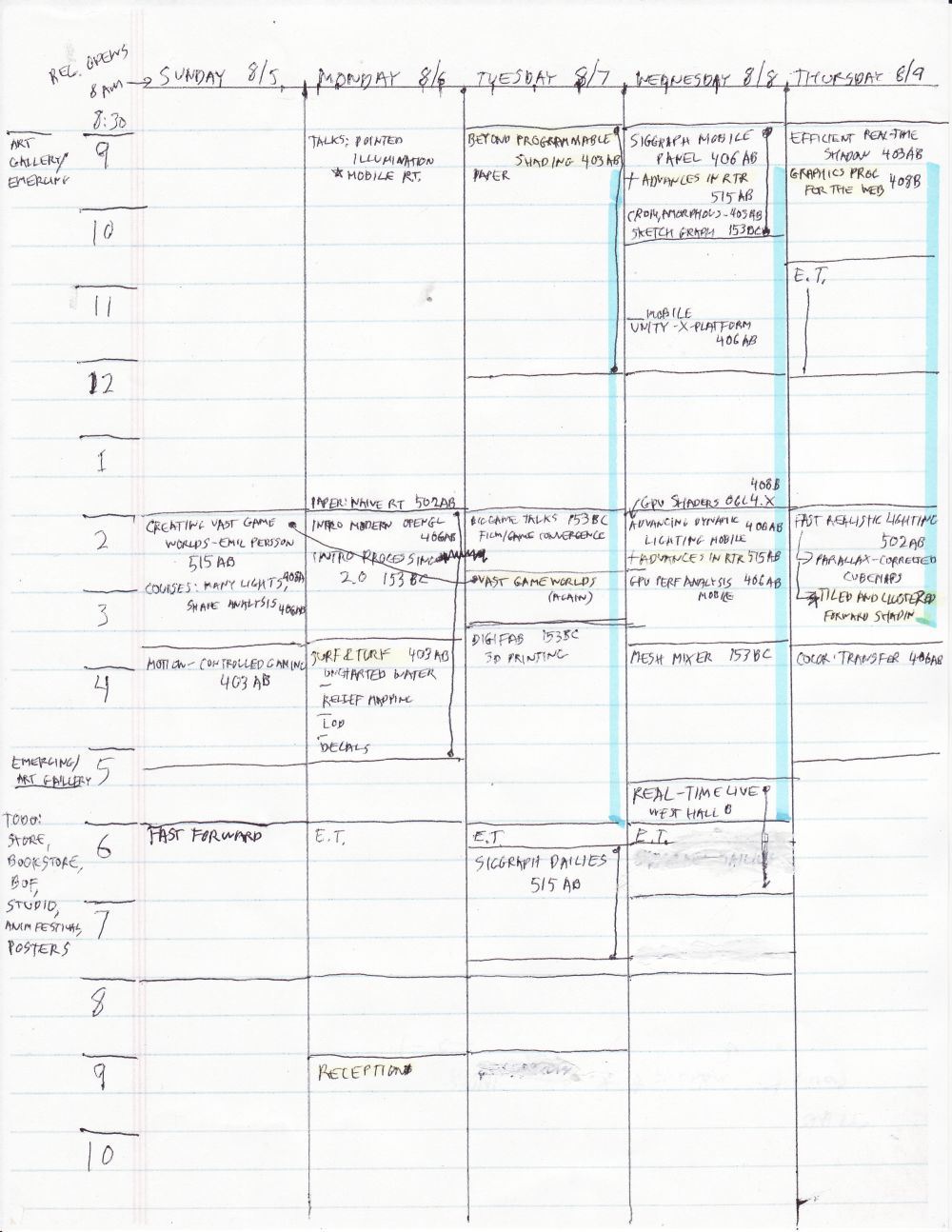

My schedule for SIGGRAPH so far (sans social gatherings), using this technology where you can put everything on this incredibly light-weight portable screen with an extremely high battery life (though the erase feature sucks if you use the high-contrast “ink” display mode):

I’ve tried various apps over the years and this is what works for me. On the back is plenty of room for quick notes on things to follow-up on after SIGGRAPH, if I write small enough.

Oh, and yes, Emil Persson’s talk is going to happen twice (not his fault, and I consider this A Very Good Thing), as, apparently, is the Processing 2.0 talk, also. Ah, wait, I just heard back from Andres, and the second Processing talk (on Tuesday) is cancelled.

Edits: added Fast Forward (thanks, Hanspeter). Also, I entirely forgot to look at the Exhibitor Talks, which have a few things of interest.

Oh, and here’s a neat Google Calendar thingy for SIGGRAPH 2012 that Dan Wexler pointed me at: http://skitten.org/2012/07/siggraph-2012-google-calendars/

Seven Things for June 22nd

Here goes:

- The Journal of Computer Graphics Techniques has published its first accepted article: “Importance Sampling of Reflection from Hair Fibers”. Free for download, of course.

- Mauricio Vives pointed out that the thirteenth article in a series going back to 2010 is now up: Fluid Simulation for Video Games. Not my particular interest, but my gosh this collection is impressive, it’s practically book-length at this point, and includes code snippets and a demo with source (you’ll need to install TBB to compile).

- A new book has been announced, coming out in October: The CUDA Handbook. The author, Nicholas Wilt, was a software architect at NVIDIA who worked on CUDA since its inception.

- John Owens pointed out an interesting way to search Google Scholar, by publications with the word graphics. This gives an interesting weighing of influence – no real surprises, and note that some conferences are not included (I highly doubt that EGSR, I3D, and HPG don’t make the cut).

- Sebastien Lagarde gives an in-depth analysis showing how the Phong and Blinn specular highlight models are related by a factor of 4 in the power. This is an old result from Fisher & Woo in Graphics Gems IV, but it’s nice to see an independent verification and analysis.

- Thinking of Graphics Gems, I wanted to mention this old piece of news now: Jim Arvo (editor of Graphics Gems II) passed away back on October 19th of last year. He did seminal work in ray tracing, Monte Carlo sampling, light transport, and many other areas, and was also just a great guy. See his homepage while it still is there.

- There are mutant women who live amongst us with a fourth type of cone cell. There’s more information on Wikipedia.

SIGGRAPH 2012 early registration deadline is today

If you’re reading this after June 18th, oh well…

Me, I wouldn’t rate SIGGRAPH the premier interactive rendering research conference any more: I3D or HPG publish far more relevant results overall. SIGGRAPH still has a lot of other great stuff going on, and there are enough things of interest to me this year that I’m happy to be attending:

- I guess the courses are the main draw for me right now, and some of these have become informal venues for interactive rendering R&D presentations (e.g. the Advances course).

- SIGGRAPH Mobile could be interesting. Given the huge profit margins of GPUs for mobile vs. PCs, it’s where the market has moved. It feels a little “back to the future”, with GPU speeds getting reset about a decade vs. PC performance, but there’s some interesting research being done, e.g. this paper (not at SIGGRAPH but at HPG, I noticed it today on Morgan McGuire’s Twitter feed and thought it was fascinating).

- I was thinking of arriving Sunday afternoon, but then noticed some interesting talks in the Game Worlds talks on Sunday, 2-3:30 pm.

- Other talks will be of interest, I’ll need to wade through the list.

- Emerging Technologies and the Exhibition Floor usually have something that grabs my attention (if nothing else, I can browse through new books), and I maybe should give Real Time Live a visit.

- And, meeting people, of course – it’s inspiring and fun to hear what others are up to. Sometimes a little chance conversation will later have great value.