- Fairly new book: Practical Rendering and Computation with Direct3D 11, by Jason Zink, Matt Pettineo, and Jack Hoxley, A.K.Peters/CRC Press, July 2011 (more info). It’s meant for people who already know DirectX 10 and want to learn just the new stuff. I found the first half pretty abstract; the second half was more useful, as it gives in-depth explanation of practical examples that show how the new functionality can be used.

- Two nice little Moore’s Law-related articles appeared recently in The Economist. This one is about how the law looks to have legs for a number of more years, and presents a graph showing how various breakthroughs have kept the law going over the past decades. Moore himself thought the law might hold for ten years. This one talks about how computational energy efficiency is doubling every 18 months, which is great news for mobile devices.

- I used to use MWSnap for screen captures, but it doesn’t work well with two monitors and it hangs at times. I finally found a replacement that does all the things I want, with a mostly-good UI: FastStone Capture. The downside is that it actually costs money ($19.95), but I’m happy to have purchased it.

- Ray tracing vs. rasterization, part XIV: Gavan Woolery thinks RT is the future, DEADC0DE argues both will always have a place, and gives a deeper analysis of the strengths and weaknesses of each (though the PITA that transparency causes rasterization is not called out) – I mostly agree with his stance. Both posts have lots of followup comments.

- This shows exactly how far behind we are in blogging about SIGGRAPH: find the Beyond Programmable Shading course notes here – that’s just a mere two months overdue.

- Tantalizing SIGGRAPH Talk demo: KinectFusion from Microsoft Research and many others. Watch around 3:11 on for the great reconstruction, and the last minute for fun stuff. Newer demo here.

- OnLive – you should check it out, it’ll take ten minutes. Sign up for a free account and visit the Arena, if nothing else: it’s like being in a sci-fi movie, with a bunch of games being played by others before your eyes that you can scroll through and click on to watch the player. I admit to being skeptical of the whole cloud-gaming idea originally, but in trying it out, it’s surprisingly fast and the video quality is not bad. Not good enough to satisfy hardcore FPS players – I’ve seen my teenage boys pick out targets that cover like two pixels, which would be invisible with OnLive – but otherwise quite usable. The “no download, no GPU upgrade, just play immediately” aspect is brilliant and lends itself extremely well to game trials.

Author Archives: Eric

Seven things for 10/10/11

- If you can get WebGL running properly on your browser, check out Shader Toy. Coolest thing is that you can edit any shader and immediately try it out.

- Another odd little WebGL application is a random spaceship maker, with a direct tie-in to Shapeways to buy a 3D version of any model you make.

- Speaking of Shapeways, I liked their “one coffee cup a day project“. The low-resolution cup is particularly good for computer graphics people, though I’m told that in real life it’s a fair bit more rounded off, due to the way the ceramic sets. Ironic. Also, note that these cups are actually quite small in real life (smaller than even espresso cups), which is too bad. Still, clever.

- Source code for iOS versions of Castle Wolfenstein and the original DOOM is now available.

- Patrick Cozzi has a nice rundown of his days at SIGGRAPH this August, with a particular emphasis on OpenGL and mobile. The links for each day are at the bottom of the entry.

- Nice fractal video generated in near-real time (300 ms/frame) running a GLSL shader using this code. Reddit thread here, about an earlier video now pulled back online.

- This site gives a darn long list of educational institutions offering videogame design degrees. It’s at least a place to start, if you’re looking for such things. That said, I’ve heard counterarguments from game company professionals to such specialized degrees, “just learn to program well and we’ll teach you the videogames business”.

Bonus thing: Draw a curve of your data for a number of years and see what it most closely correlates. Peculiar.

Predicting the Past

Inspired by Bing (a person, not a search engine) and by the acrobatics I saw tonight in Shanghai, time for a blog post.

So what’s up with graphics APIs? I’ve been working on a project for a fast 3D graphics system for Autodesk for about 4 years now; the base level (which hides the various flavors of DirectX and OpenGL) is used by Maya, Max, AutoCAD, Inventor, and other products. There are various higher-level optimizations we’ve added (and why Microsoft’s fxc effect compiler suddenly got a lot slower is a mystery), with some particularly nice work by one person here in the area of multithreading. Beyond these techniques, minimizing the raw number of calls to the API is the primary way to increase performance. Our rule of thumb is that you get about 1000-1500 calls a frame (CAD isn’t held to a 60 FPS rule, but we still need to be interactive). The usual tricks are to sort by state, and to shove as much geometry and processing as possible into a single draw call and so avoid the small batch problem. So, how silly is that? The best way to make your GPU run fast is to call it as little as possible? That’s an API with a problem.

This is old news, Tim Sweeney railed against API limitations 3 years ago (sadly, the article’s gone poof). I wrote about his ideas here and added my own two cents. So where are we since then? DirectX 11 has been out awhile, adding three more stages to the pipeline for efficient tessellation of higher-order surfaces. The pipeline’s feeling a bit unwieldy at this point, with a lot of (admittedly optional) stages. There are still some serious headaches for developers, like having to somehow manage to put lighting and material shading in the same pixel shader (one good argument for deferred lighting and similar techniques). Forget about optimization; the arcane API knowledge needed to get even a simple rendering on the screen is considerable.

I haven’t heard anything of a DirectX 12 in the works (except maybe this breathless posting, which I feel obligated to link to since I’m in China this month), nor can I imagine what they’d add of any significance. I expect there will be some minor XBox 72o (or whatever it will be called) -related tweaks specific to that architecture, if and when it exists. With the various CPU+GPU-on-a-chip products coming out – AMD’s Fusion family, NVIDIA’s Tegra 2, and similar from other companies (I think I counted 5, all totaled) – some access costs between the two processors become much cheaper and so change the rules. However, the API still looks to be the bottleneck.

Marketwise, and this is based entirely upon my work in scapulimancy, I see things shifting to mobile. If that isn’t at least the 247th time you’ve heard that, you haven’t been wasting enough time on the internet. But, it has some implications: first, DirectX 12 becomes mostly irrelevant. The GPU pipeline is creaky and overburdened enough right now, PC games are an important niche but not the focus, and mobile (specifically, iPad and other tablets) is fine with the functionality defined thus far by existing APIs. OpenGL ES will continue to evolve, but I doubt we’ll see for a good long while any algorithmically (vs. data-slinging) new elements added to the API that the current OpenGL 4.x and DX11 APIs don’t offer.

Basically, API development feels stalled to me, and that’s how it should be: mobile’s more important, PCs are a (large but slowly evolving) niche, and the current API system feels warped from a programming standpoint, with peculiar constructs like feeding text strings to the API to specify GPU shader effects, and strange contortions performed to avoid calling the API in order to coax the GPU to run fast.

Is there a way out? I felt a glimmer while attending HPG 2011 this year. The paper “High-Performance Software Rasterization on GPUs” by Samuli Laine and Tero Karras was one of my (and many attendees’) favorites, talking about how to efficiently implement a basic rasterizer using CUDA (code’s open sourced). It’s not as fast as dedicated hardware (no surprise there), but it’s at least in the same ball-park, with hardware being anywhere from 1.5x to 8.1x faster for their test cases, median being 3.6x. What I find exciting is the idea that you could actually program the pipeline, vs. it being locked away. They discuss ideas for optimization such as loosening the “first in, first out” rule for triangles currently enforced by all APIs. With its “yet another language” dependency, I can’t say I hope GPGPU is the future (and certainly CUDA isn’t, since it cuts out non-NVIDIA hardware vendors, but from all reports it’s currently the best way to experiment with GPGPU). Still, it’s nice to see that the fixed-function bits of the GPU, while important, are not an insurmountable limit in considering more flexible and general interactive rasterization programming models. Or, ray tracing – always have to stick that in there.

So it’s “forward to the past”, looking at traditional algorithms like rasterization and ray tracing and how to gain efficiency (both in raw speed and in development time) on various modern architectures. That’s ultimately what it’s about for me, at least: spending lots of time fighting the API, gluing together strings to make shaders, and all the other craziness is a distraction and a time-waster. That said, there’s a cost/benefit calculation implicit in all of this. For example, using C# or Java is way more productive than C++, I’d say about 2x, mostly because you’re not tracking down memory problems like leaks and access uninitialized or non-existent values. But, there’s so much legacy C++ code around that it’s still the language of graphics, as previously discussed here. Which means I expect none of the API weirdness to change for a solid decade, at the minimum. Please do go ahead and prove me wrong – I’d be thrilled!

Oh, and acrobatics? Hover your cursor over the image. BTW, the ERA show in Shanghai is wonderful, unlike current APIs.

Advances in RTR Course Notes up

I’m finally back from a nice post-SIGGRAPH vacation in the Vancouver area. Both our computers broke early on in the trip, so it was a true vacation.

I hope to post on a bunch of stuff soon, but wanted to first mention something now available: the slides and videos presented in the popular SIGGRAPH course “Advances in Real-Time Rendering in 3D Graphics”. Find them here, and the page for previous years (well, currently just 2010) here. Hats off to Natalya Tatarchuk and all the speakers for quickly making this year’s presentations available.

A.K. Peters books at SIGGRAPH and beyond

OK, so I like the publisher A.K. Peters, for obvious reasons. They’re also kind/smart enough to send me review copies of upcoming graphics-related books. I’ve received two recently, with one of particular interest:

… and free to veterans and unemployed professionals

Mauricio Vives pointed out that the Autodesk program I mentioned yesterday, where students and educators can get Autodesk products and training for free, also applies to veterans and “displaced professionals.” See this page for the logic. The fine print on the registration page is:

An Autodesk Assistance Program participant is either a veteran or unemployed individual who has (a) previously worked in the architecture, engineering, design or manufacturing industries, has completed the online registration for the Autodesk Assistance Program, and upon request by Autodesk is able to provide proof of eligibility for that program.

This is a nice thing.

All Autodesk software free to students and educators, and betas for everyone

I think I need to pop my head out of my gopher-hole more often and see what my company’s doing. It turns out Autodesk software – including Maya, 3DS Max, Mudbox, AutoCAD, and everything else – is now free to students and educators. Just register and you’re good to go. Wow, this is a big change from the old system, and is definitely great to see.

There are also a number of betas from Autodesk free to anyone: one is 123D, a modeler that is aimed to help out the Maker crowd and 3d printing. I’ve installed this but haven’t played with it yet.

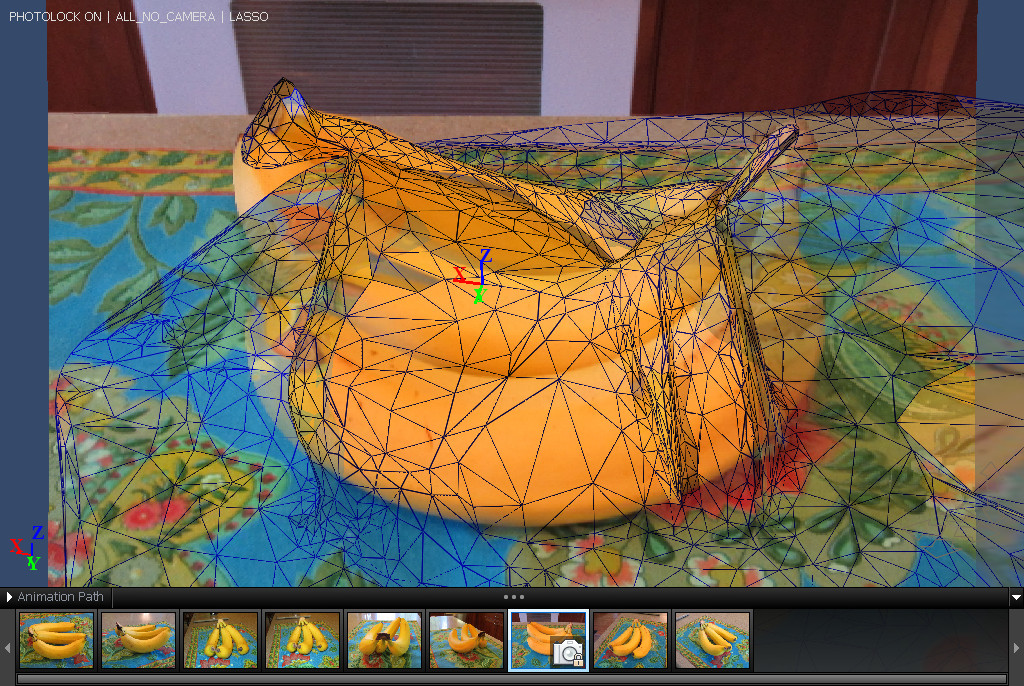

Another project is Photofly 2.0, where you upload a number of images and it makes a 3D model from the data (i.e., photogrammetry). This is similar to My3dScanner. I tried these two out on a set of photos of a bunch of bananas, some taken with a flash and some without, a hard test case. I definitely didn’t follow the guidelines. My3dScanner threw up its hands, Photosynth’s point cloud was incomprehensible, Photofly gave it a sporting chance, getting a cloud and making a mesh – no magic bullet yet, but fun to try. I’m now even tempted to RTFM, as results were better than I thought.

Photosynth (examine set of photos here):

Photofly’s cubist rendering – it did output an interesting Wavefront OBJ model:

Seven Things for July 26th, 2011

- First, if you’re going to HPG 2011, I’ll save you five minutes of searching for where it is: it’s at the Goldcorp Centre for the Arts, Google map here. Note also that things don’t start until 1:30 on Friday.

- SIGGRAPH parties? I know nothing, except that the official SIGGRAPH reception is 9 to 11 PM Monday at the convention center, and the ACM SIGGRAPH Chapters Party is 8:30 PM to 2 AM on, oh, Monday again. Odd scheduling.

- Timothy Lottes cannot be stopped: FXAA 3.11 is out (with improvements for thin lines), and 3.12 will soon appear. Note that the shader has a signature change, so your calling shader code will have to change, too.

- At the Motorola developer site there’s a quick summary of various image compression types used for mobile phones and PCs.

- Sebastien Hillaire implement the God Rays effect from GPU Gems 3, showing results and problems. Code and executable available for download.

- I’ve been enjoying some worthwhile articles on patents and copyrights lately, both new and old. Worth a mention: Myrhvold madness; a comic (a bit old but useful) on copyright – a good overview; The Public Domain, a free book by a law professor who helped establish Creative Commons; the July 2011 CACM (behind the paywall, though) had a nice article on why the U.S. dropped “opt-in” copyright back in 1989 (blame Europe). Best idea gleaned, from The Public Domain: the length of copyright is meant to motivate people to create works for payment, so a retroactive increase in the length of copyright (e.g., to protect Mickey Mouse) makes no sense – it creates no motivation for works already created.

- Polygon Pictures’ office corridor would be a bad place to be if you worked way too many hours. Otherwise, nice!

“OpenGL Insights” CFP Reminder

The call for participation for the “OpenGL Insights” book ends in a month. If you have a good tutorial or technique about OpenGL that you’d like to publish, please send on a proposal to them for consideration.

FXAA Rules, OK?

So there are those people out there that punch other people’s punchlines. Someone’s three quarters of the way through telling a joke, and a listener says, “oh, right, this one ends ‘to get to the other side'”. You don’t want to be that guy, but that’s a little bit how I feel writing about FXAA, given that there’s a whole course at SIGGRAPH next month about these sorts of antialiasing techniques. I blame Morgan McGuire’s Twitter feed, as he (and 17 others) retweeted Timothy Lottes’ posting that he had released shader code for FXAA. I’d seen FXAA mentioned before, NVIDIA put it in their DirectX 11 SDK. Which, frankly, is sadly misleading – the implication is that it works only on GTX 200-level hardware and above, when in fact it works on DirectX 9 shader model 3.0 hardware, GLSL 1.20, XBox 360, and Playstation 3, to name a few, and is optimized in various ways for newer GPUs. Anyway, seeing this shader code available, I was interested to try it out. Morgan mentioning that he liked it a lot got me a lot more interested. A few hours later…

So what the heck am I blathering about? To start, there are a number of these ??AA methods that are based on post-processing a color (and sometimes, also normal and depth) buffer. MLAA, morphological antialiasing, was the first used for 3D images, back in 2009. The basic idea is “find edges and smooth them”. The devil’s in the details, which is what the SIGGRAPH course will delve into (and I’ll certainly attend): how wide an area do you search to try to find a straight edge? how do you deal with curves and corners? how do you avoid oversmoothing thin edges, blurring them twice? how does it look frame to frame? and, most important if you want to use it interactively, how do you do this efficiently?

I’ve wanted an MLAA-like solution for two years, since before HPG 2009 when I noticed the MLAA paper on Ke-Sen’s pages and talked to Alexander Reshetov about it (who was very helpful and forthcoming). I even got a junior programmer to attempt to implement it in a shader, but the implementation was quite slow (due to a very wide search area) and ultimately flawed, and we didn’t have time to get back to it. Last year at SIGGRAPH there was a talk by a group in France, led by Venceslas Biri and Adrien Herubel, about implementing MLAA on the GPU, and they released source code. I spent a bit of time with their code, but it was developed on Linux and I had some problems getting it to work on Windows properly. My “I’ll just take a few hours and see where I get” time was gone, and still no easy solution. There were some other interesting bits out there, like the article in GPU Pro 2, Practical Morphological Anti-Aliasing, with even a github project, but there were different versions for DX9 and 10 (and not OpenGL), lots of files involved, and I didn’t want to get involved. Even Humus had a code sample, but I was still a bit shy to committing more time. (Also, his needs geometric information, and I wanted to antialias NPR edges formed by dilation, i.e., image processing, which have no underlying geometry).

Then the FXAA shader code was released: well-commented, with clear integration instructions, just needs a color buffer, and all in one shader file. FXAA is not the solution to all of life’s problems (or is it?), but for me, it’s wonderful. It took me all of an hour to fold into our system as a shader (and then another three debugging why the heck it wasn’t registering properly – our shader system turns out to be very particular about path names). The code runs on just about everything and has extensive comments. There are control knobs for the fiddlers out there, but I haven’t messed with these – it looks great out of the box to me.

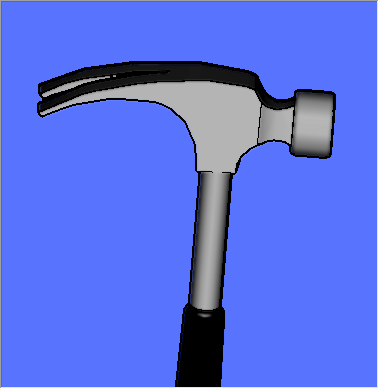

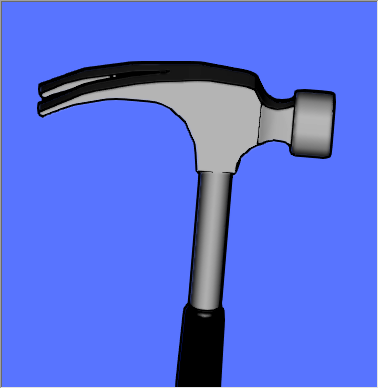

So, after all that breathless buildup, here’s the punchline:

On the left is your typical jaggy image, on the right is FXAA. Sure, it’s not perfect – nearly-vertical lines can look considerably better with a wider edge search area (as seen in MLAA), dropouts could be picked up by supersampling or MSAA, thin lines can have problems – but this shader gives a huge improvement with no extra samples, and just one pretty-quick pass (plus – full disclosure – a preprocess of computing the luminance/luma (grayscale) and shoving it in the alpha channel). Less than 1 millisecond cost per frame on a GTX 480? Works on sRGB and linear? Code’s in the public domain? Sign me up!

See lots more examples on Timoty Lottes’ page. Read his whitepaper for algorithm details and his newer posts for tweaks and improvements. An easy-to-use demo of an earlier version of his shader can be downloaded here – just hit the space bar to toggle FXAA on and off. Enjoy!