- I3D 2020 registration is open. It’s free, and registration lets you interact: ask questions, enter the virtual posters session space, speak up at the town hall, etc. Find the trailer here, whole schedule here, and keynotes here.

- Like clockwork, Stephen Hill’s SIGGRAPH 2020 Links page is up. SIGGRAPH itself provides an Open Access page to some sessions from this and past years.

- Speaking of open, if you’re not providing pre-prints of your work, you’re limiting your audience. The ACM has officially allowed pre-prints to also be posted to ArXiv now. Here’s the how-to guide.

- Here is a wonderful interactive article on photogrammetry, with 3D scenes contained within it. Just keep scrolling to see it all, or break off at various points to explore the environments along the way.

- Dave Eberly has a new book out, “Robust and Error-Free Geometric Computing.” My copy’s on order.

- This article discusses the history and goals of USD, the open source Universal Scene Description format that Pixar has developed and that is gaining traction in the film industry. Bonus: learn of the liver-peas rubric.

- This:

Author Archives: Eric

Seven things for July 30, 2020

Well, I have about 59 things, but here are the LIFO bits:

- Reverse Engineering the Rendering of Witcher 3, part 20 – Light Shafts: an incredibly detailed article about the beams of light glare effect. And it truly is part 20, see the index for the first 19. These will keep you busy for many hours.

- You’re back? OK, since it’s three weeks later, check out the Physically Based Shading in Theory and Practice course page, which I assume will have links to presentations once this SIGGRAPH course has been presented.

- This article on C++ compiler optimizations is incredible, I enjoyed it way more than I thought I would. There are some truly miraculous code substitutions that can take place under the hood. The article also provides ways to code to ensure the optimizer can do its magic.

- Need to make a video presentation? The grapevine notes that the free version of DaVinci Resolve is currently The One for video editing.

- WebGL not good enough for you? Want more control? WebGPU is for you.

- Shading languages too easy for you now? How about encoding shading instructions into textures? Bonus: no capitalization and poor punctuation.

- You can now boot a Windows 95 PC inside Minecraft and play Doom on it (and, yes, you can also play Minecraft on it). Though if you want to go old school, there’s a 64-bit redstone computer with a display that includes graphics acceleration, such as Bresenham’s line drawing and flood fill.

Cancel your SIGGRAPH hotel; attend EGSR, HPG, and I3D 2020 virtually, free

SIGGRAPH: don’t forget to cancel your hotel reservation if you have one. Physical SIGGRAPH is gone this year, but your reservation lives on – I just noticed mine was still in the system. If your reservation is like mine, you’ll want to cancel by July 6th or pay a night’s fee penalty. SIGGRAPH is of course virtual this year. August 24-28 is the week scheduled – beyond this, I do not know.

EGSR: It’s happening this week. I should have posted this a few days ago, but it’s all on YouTube, so you haven’t actually missed anything.

The 31st Eurographics Symposium on Rendering will be all virtual and free to attend this year: watch it here. Talks can be watched live or afterwards on YouTube. Full conference program: https://egsr2020.london/program/

Registration is optional, but gets to access to the chat system where you can ask authors questions: https://egsr2020.london/. There’s also two virtual mixers, Wednesday and Thursday: https://egsr2020.london/social-mixers/ – now to determine what 12:00 UTC is… (ah, I think that’s 8 AM U.S. Eastern Time).

HPG 2020: The High-Performance Graphics conference will happen virtually July 13-16. It is free, though there is a nominal fee if you want to attend the interactive Q&A sessions.

I3D 2020: The Symposium on Interactive 3D Graphics and Games is virtual and free to all this year. Dates are September 14-18. Details will be posted here as they become available.

As usual, publications and their related resources for these conferences are added by Ke-Sen Huang to his wonderful pages tracking them.

I’ll update this post as I learn more.

Ray Tracing Gems 2 updated deadlines

I’ll quote the tweet by Adam Marrs (and please do RT):

Due to the unprecedented worldwide events since announcing Ray Tracing Gems 2, we have decided to adjust submission dates for the book.

Author deadlines have been pushed out by five months. RTG2 will now publish at SIGGRAPH in August 2021, in Los Angeles.

More info here.

To save you a click, here are the key dates:

- Monday February 1st, 2021: first draft articles due

- Monday March 22nd, 2021: notification of conditionally and fully accepted articles

- Monday April 5th, 2021: final revised articles due

And if you’re wondering, SIGGRAPH 2021 starts August 1st, 2021.

The key thing in the CFP: “Articles will be primarily judged on practical utility. Though longer articles with novel results are welcome, short practical articles with battle-tested techniques are preferred and highly encouraged.”

It’s nice to see this focus on making the book more about “gems,” concise “here’s how to do this” articles. There are lots of little topics out there covered in (sometimes quite) older books and blogs; it would be nice to not have to read five different ones to learn best practices. So, please do go propose an article. Me, I’m fine if you want to mine The Ray Tracing News, Steve’s Computer Graphics Index, etc.

The Center of the Pixel is (0.5,0.5)

With ray tracing being done from the eye much more now, this is a lesson to be relearned: code’s better and life’s easier if the center of the pixel is the fraction (0.5, 0.5). If you are sure you’re doing this right, great; move on, nothing to see here. Enjoy this instead.

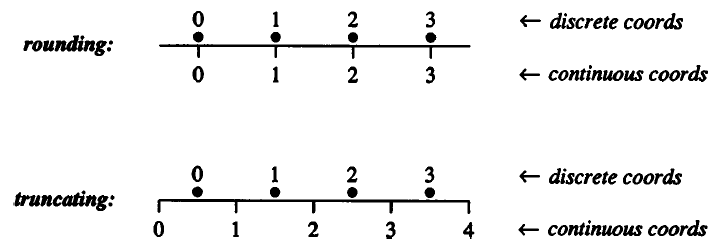

Mapping the pixel center to (0.5,0.5) is something first explained (at least first for me) in Paul Heckbert’s lovely little article “What Are the Coordinates of a Pixel?”, Graphics Gems, p. 246-248, 1990.

That article is hard to find nowadays, so here’s the gist. Say you have a screen width and height of 1000. Let’s just talk about the X axis. It might be tempting to say 0.0 is the center of the leftmost pixel in a row, 1.0 is the center next to it, etc. You can even then use rounding, where a floating-point coordinate of 73.6 and 74.4 both then go to the center 74.0.

However, think again. Using this mapping gives -0.5 as the left edge, 999.5 as the right. This is unpleasant to work with. Worse yet, if various operators such as abs() or mod() get used on the pixel coordinate values, this mapping can lead to subtle errors along the edges.

Easier is the range 0.0 to 1000.0, meaning the center each pixel is at the fraction 0.5. For example, integer pixel 43 then has the sensible range of 43.0 to 43.99999 for subpixel values within it. Here’s Paul’s visualization:

OpenGL has always considered the fraction (0.5,0.5) the pixel center. DirectX didn’t, at first, but eventually got with the program with DirectX 10.

The operations for proper conversion from integer to float pixel coordinates is to add 0.5; float to integer is to use floor().

This is old news. Everyone does it this way, right? I bring it up because I’m starting to see in some ray tracing samples (pseudo)code like this for generating the direction for a perspective camera:

float3 ray_origin = camera->eye;

float2 d = 2.0 *

( float2(idx.x, idx.y) /

float2(width, height) ) - 1.0;

float3 ray_direction =

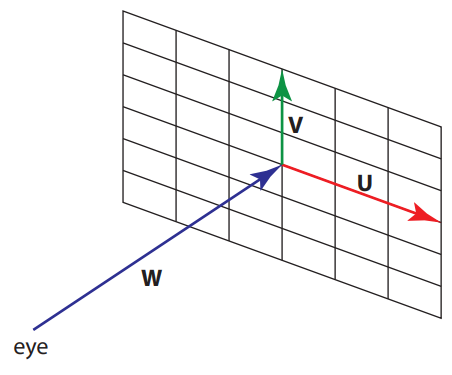

d.x*camera->U + d.y*camera->V + camera->W;

The vector idx is the integer location of the pixel, width and height the screen resolution. The vector d is computed and used to generate a world-space vector by multiplying it by two vectors, U and V. The W vector, the camera’s direction in world space, is added in. U and V represent the positive X and Y axes of a view plane at the distance of W from the eye. It all looks nice and symmetric in the code above, and it mostly is.

The vector d is supposed to represent a pair of values from -1.0 to 1.0 in Normalized Device Coordinates (NDC) for points on the screen. However, the code fails. Continuing our example, integer pixel location (0,0) goes to (-1.0,-1.0). That sounds good, right? But our highest integer pixel location is (999,999), which converts to (0.998,0.998). The total difference of 0.002 is because this bad mapping shifts the whole view over half a pixel. These pixel centers should be 0.001 away from the edge on each side.

The second line of code should be:

float2 d = 2.0 *

( ( float2(idx.x, idx.y) + float2(0.5,0.5) ) /

float2(width, height) ) - 1.0;

This then gives the proper NDC range for the centers of pixels, -0.999 to 0.999. If we instead transform the floating-point corner values (0.0,0.0) and (1000.0,1000.0) through this transform (we don’t add the 0.5 since we’re already in floating point), we get the full NDC range, -1.0 to 1.0, edge to edge, proving the code correct.

If the 0.5 annoys you and you miss symmetry, this formulation is elegant when generating random values inside a pixel, i.e., for when you’re antialiasing by shooting more rays at random through each pixel:

float2 d = 2.0 *

( ( float2(idx.x, idx.y) +

float2( rand(seed), rand(seed) ) ) /

float2(width, height) ) - 1.0;

You simply add a random number from the range [0.0,1.0) to each integer pixel location value. The average of this random value will be 0.5, at the center of the pixel.

Long and short: beware. Get that half pixel right. In my experience, these half-pixel errors would occasionally crop up in various places (cameras, texture sampling, etc.) over the years when I worked on rasterizer-related code at Autodesk. They caused nothing but pain on down the line. They’ll appear again in ray tracers if we’re not careful.

Seven Things for April 17, 2020

Seven things, none of which have to do with actually playing videogames, unlike yesterday’s listing:

- Mesh shaders are A Big Deal, as they help generalize the rendering pipeline. If you don’t yet know about them, Shawn Hargreaves gives a nice introduction. Too long? At least listen to and watch the first minute of it to know what they’re about, or six minutes for the full introduction. For more more more, see Martin Fuller’s more advanced talk on the subject.

- I3D 2020 may be postponed, but its research papers are not. Ke-Sen Huang has done his usual wonderful work in listing and linking these.

- I mentioned in a previous seven things that the GDC 2020 content for graphics technical talks was underwhelming at that point. Happily, this has changed, e.g., with talks on Minecraft RTX, World of Tanks, Wolfenstein: Youngblood, Witcher 3, and much else – see the programming track.

- The Immersive Math interactive book is now on version 1.1. Me, I finally sat still long enough to read the Eigenvectors and Eigenvalues chapter (“This chapter has a value in itself”) and am a better person for it.

- Turner Whitted wrote a retrospective, “Origins of Global Illumination.” Paywalled, annoyingly, something I’ve written the Editor-in-Chief about – you can, too. Embrace being that cranky person writing letters to the editor.

- I talk about ray tracing effect eye candy a bit in this fifth talk in the series, along with the dangers of snow globes. I can neither confirm nor deny the veracity of the comment, “This whole series was created just so Eric Haines would have a decent reason to show off his cool glass sphere burn marks.” BTW, I’ll be doing a 40 minute webinar based on these talks come May 12th.

- John Horton Conway is gone, as we likely all know. The xkcd tribute was lovely, SMBC too. In reading about it, one resource I hadn’t known about was LifeWiki, with beautiful things such as this Turing machine.

Seven Things for April 16, 2020

Here are seven things, with a focus on videogames and related things this time around:

- Minecraft RTX is now out in beta, along with a tech talk about it. There’s also a FAQ and known issues list. There are custom worlds that show off effects, but yes, you can convert your Java worlds to Bedrock format. I tried it on our old world and made one on/off video and five separate location videos, 1, 2, 3, 4, 5. Fun! Free! If you have an RTX card and a child, you’ll be guaranteed to not be able to use your computer for a month. Oh, and two pro tips: “;” toggles RTX on/off, and if you have a great GPU, go to Advanced Video settings and crank the Ray Tracing Render Distance up (you’ll need to do this each time you play).

- No RTX or home schooling? Try Minecraft Hour of Code instead, for students in grades 2 and up.

- There’s now a minigame in Borderlands 3 where you solve little DNA alignment puzzles for in-game bonuses. The loot earned is absurdly good at higher levels. Gearbox finally explained, with particularly poorly chosen dark-gray-on-black link text colors, what (the heck) the game does for science. It seems players are generating training sets for deep learning algorithms, though I can’t say I truly grok it.

- Beat Saber with a staff is hypnotic. You can also use your skills outside to maintain social distancing.

- A few Grand Theft Auto V players now shoot bullets to make art. Artists have to be careful to not scare the NPCs while drawing with their guns, as any nearby injuries or deaths can affect the memory pool and so might erase the image being produced.

- Unreal Engine’s StageCraft tech was used to develop The Mandalorian. I’m amazed that a semicircular wall of LED displayscould give realistic backgrounds at high enough resolution, range, and quality in real time. It has only 28 million pixels for a 270 degree display, according to the article – sounds like a lot, but note a single 4K display is 3840 * 2160 = 8.3 million pixels.

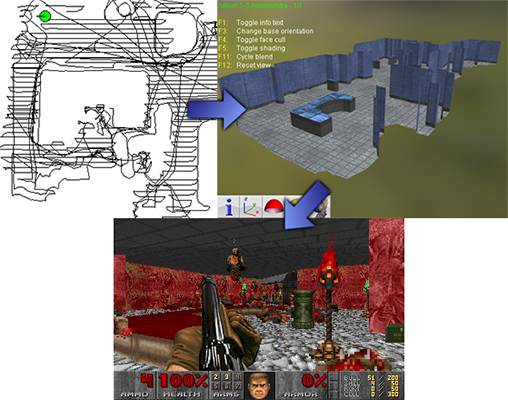

- Stuck inside and want to make your housing situation an infernal hellscape, or at least more of one? Doomba‘s the solution. It takes your Roomba’s movement information and turns it into a level of classic Doom.

Made it this far? Bonus link, since it’s the day after U.S. taxes were due, but now deferred until July 15th: Fortnite virtual currency is not taxable.

Seven Things for March 31, 2020

Seven things, mainly because I want to bring attention to the first and last items:

- The ACM Digital Library is now free to everyone until June 30th. Get downloadin’!

- I3D 2020 is postponed until (at least) September, but the papers accepted have been announced and will be published in May.

- GDC 2020 didn’t happen in the flesh, but there are talks online. Sadly, though, there look to be none about computer graphics techniques.

- GTC 2020 this week was also virtual. It included a few graphics-related talks, and session content is starting to appear to watch. For example, there’s a quick demo of RTX in Blender’s Cycles renderer, a talk about RTX Minecraft, and a panel of the future of GPU raytracing, among others. You have to register, but it’s free.

- Who knows the fate of SIGGRAPH this year, but I’m happy to see that the dynamic diffuse global illumination research presented at GDC and SIGGRAPH last year is now a free, open-source SDK.

- The free book Ray Tracing in One Weekend has been updated and its code base improved. If you’ve been “meaning to check it out someday,” today’s that day.

- AMD’s joined the party, announcing dedicated ray tracing support in their new RDNA 2 GPU line; video demo here.

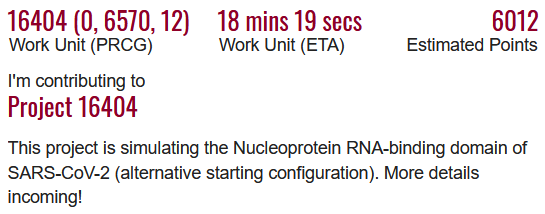

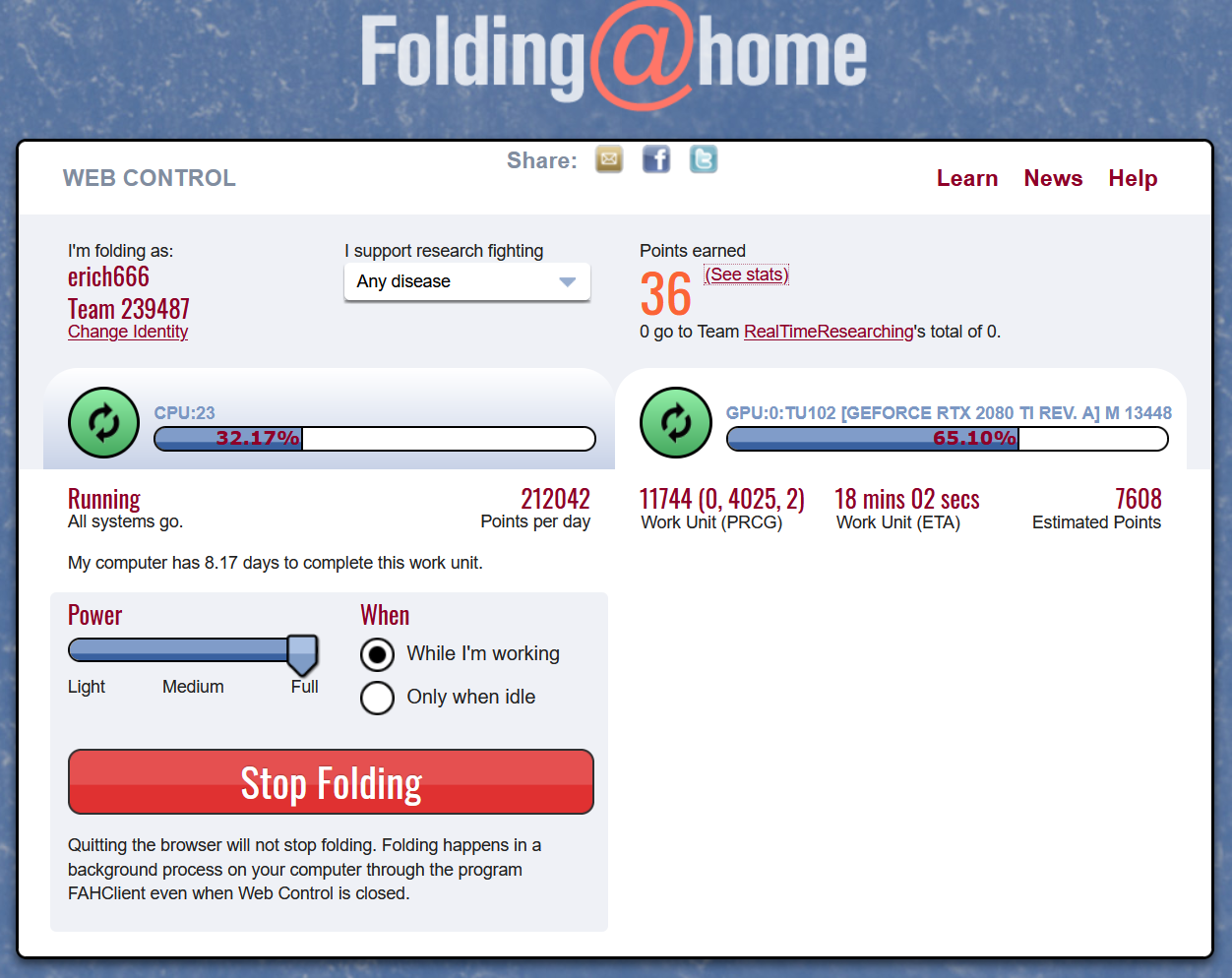

Previous post followup: I noticed today I got a work unit for coronavirus on Folding@Home. The psychology here is pretty odd, “oh, foo, my work unit is just helping cure cancer – better luck next time.” Oh, and join our team! We’re in 2326th place, but with your help we’ll get to 2325th in no time. In first place is CureCoin, crypto-currency meets protein folding, aka, “what does anything even mean any more?” We’re living in a William Gibson novel.

It Can’t Hurt: Folding@Home

Remember Folding@Home? I admit it fell off my radar. You, yes, you, have an overpowerful computer and barely use most of its resources most of the time. Folding@Home applies these resources to medical research. They’re now including exploration into COVID-19 as part of their system (update here).

Download it. Good installation instructions here (though join our team, not them). FAQ here. I invite you to join my “I created it 10 minutes ago” team: RealTimeResearching, #239487 (update: and thanks to the people who’ve joined – it’s comforting to me knowing you’re doing this, too. 35+ strong and counting.)

More Folding@Home COVID-19 related information here. Folding@Home is now more powerful than the top seven supercomputers in the world, combined.

Update: word has spread like, well, a virus. They’re currently getting so much home support that they don’t have enough work units to go around! Keep your client running. Want updates? Follow Greg Bowman.

Attending (or wanting to attend) I3D 2020?

Registration will go up in a few weeks for I3D 2020, May 5-7, at the ILM Theater, The Presidio, San Francisco. Before then, here a few things you should know and take advantage of.

COVID-19 update: At this point I3D 2020 is proceeding on schedule for May 5-7. We continue to watch the situation and expect to make a final determination in early April. The advance program, registration, and more details will be available soon. Before then we recommend booking refundable elements, such as your hotel room.

First, are you a student? For the first time, we have a travel grant program in place, due to strong support from sponsors this year. The main thing you need is a PDF of a letter from your advisor. If your department cannot fully support the cost of your attendance, you should apply for it. Deadline is March 1. Deadline’s passed.

Next, the posters deadline is March 13th.

Third, and important to all, reserve your lodging now. Really. The reason is that lodging might get tight; I noticed that IBM’s Think conference, at Moscone Center, is the same week. In the past, over 30,000 people attended that event. We are currently looking into a hotel discount, but that seems unlikely for the Marina District. If we do get one, you can always switch.

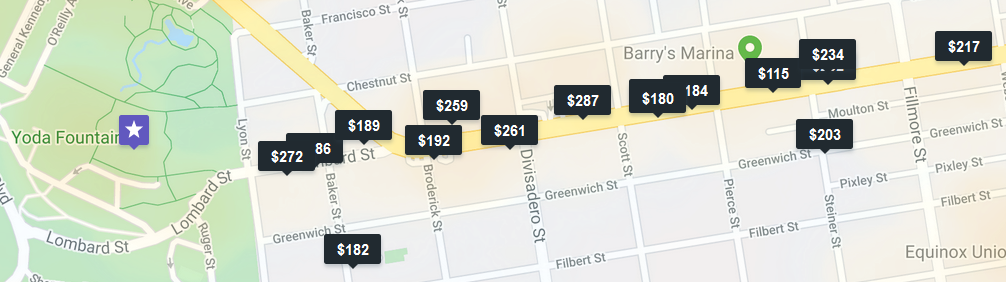

Here’s a search for lodging, using Kayak, which gave the most options of the searchers I tried. You’ll want to zoom into the Presidio area (shame on Kayak for not recording my current map view), shown below, if you want to be able to walk to the conference. I capped this search at $308/day – change that value and the dates at the top of that search page as you wish. Pay attention to cancellation details. If you are sharing lodging with a few people, move that cost slider up, as there are some property rentals for larger groups.

Other area searches: AirBNB and TripAdvisor. Me, I’m at the Days Inn – Lombard. It’s a bed, which is all I need, it’s on NVIDIA’s approved lodging list, and it’s a 15 minute walk to the Yoda Fountain. You want something a little nicer and much closer? This Travelodge is a 4 minute walk from the fountain (the $272 on the left):

If you want to take a look down Lombard and scope it out, starting at the gate to the Presidio at Lyon Street, click here. If you’d like to see too many photos of the I3D 2020 venue (spoiler alert!), you can see my fact-finding album, with a mix of beautiful and boring.

The number of submissions for I3D this year continues to be high:

Total reviewed submissions: 60

Conference paper acceptance: 17

PACM journal acceptance: 9

Acceptance rate: 43%

The schedule will also be up soon. In the meantime, our keynote speakers will be great, with one more speaker from Unreal to be announced.

Hope to see you there!