I saw this work at the New Orleans Museum of Art – tres ray tracing. See if you can figure out why it looks how it does, from these images and film clip. Last image in album is the reveal.

I saw this work at the New Orleans Museum of Art – tres ray tracing. See if you can figure out why it looks how it does, from these images and film clip. Last image in album is the reveal.

The influx of registration spam (fake accounts registering) is just too much for me to keep up with.

Sorry.

Time to clear the link collection before GDC/GTC – download some web pages for the plane ride.

OK, even I’m getting a bit tired of writing about Ray Tracing Gems (not to mention Real-Time Rendering) goings ons. But, a few things:

Really, all this info except the Real-Time Rendering signing is covered on http://raytracinggems.com

Book signing (in yellow) map for GDC, right next to the restrooms – classy 🙂

On November 1st of every year, the Finnish government publishes everyone’s salary. It’s a nice leveler of the playing field for workers, as they can see if they’re paid fairly and what to expect at another company.

In the U.S. we generally know elected officials’ salaries (president $400,000, senator $174,000, etc.) but not much else. Except for non-profits, who have to file public tax returns. Update: Angelo Pesce pointed out this useful site.

I’m doing my taxes this weekend. To avoid that time-sucking task – one that takes only five minutes in The Netherlands – I decided to go look up the ACM’s and IEEE’s forms. It takes a bit of searching, but it’s interesting to see where the money goes. To start, here are some ACM salaries from their 2015 return (actual return here):

Half a million for the outgoing Chief Executive Director – not bad. I’ve asked around, and on one level this amount is a bit shocking, but it’s evidently (for good or ill) the norm for non-profits of this size.

Putting this info on the blog makes me feel a bit embarrassed; it’s breaking a social norm here, revealing a salary. But, it’s public knowledge! We’re now used to services such as Zillow to see someone’s property value – something we would have had to work hard to do back in 2005 (e.g., go to some government office and look up the deed). However, in the U.S., knowing someone’s salary is usually not something you can look up and is pretty taboo. So, cheap thrills, and it’s easy to do so now for at least a few people.

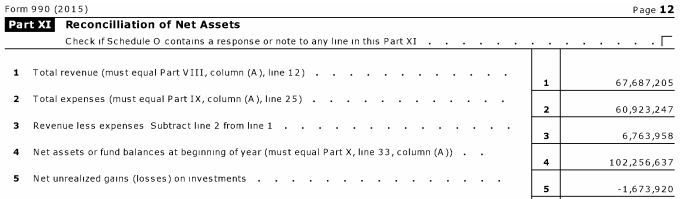

Wading through the rest of the return turns up various tidbits. For example, the ACM’s overall budget:

So they added about $6.7 million to their total assets in 2015, and ended the year with assets of:

![]()

I find these documents worth poking at, just to get a sense of what’s important. For example, the ACM makes its revenue as follows (see Part VIII for details):

Expenses, in Part IX, go on and on, with a large portion of the $60 million going to conferences:

Conferences raise $29 million each year (the revenue snippet), so I guess I conclude that conferences netted $5 million for the ACM in 2015. That cheers me to hear, vs. the opposite. Me, I’m curious how much the ACM Digital Library costs and how much revenue is raised, from individuals and institutions, but these numbers are not found here. I asked once back in 2012; the ACM doesn’t split out the DL income and costs from their other publication efforts.

There are lots of other tidbits in the return, but take a look for yourself.

Let’s go visit the IEEE – hmmm, wait, there are two of them. But both have small budgets, less than $7 million, so that’s not them. Searching a bit (quick, what does “IEEE” stand for?), I found them:

The Assistant Secretary and Executive Director (one person) gets $1.2 million – OK. The actual Director & Secretary doesn’t get paid at all, which I find entertaining somehow:

![]()

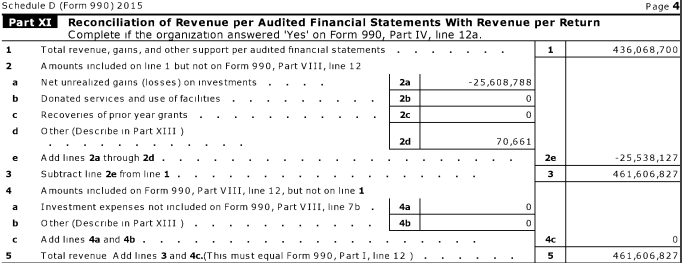

Now, the IEEE’s budget is a lot higher that the ACM’s, $436 million vs. $60 million:

It’s a different report format, so it’s not clear to me what assets they have.

It’s fun to poke around, e.g.:

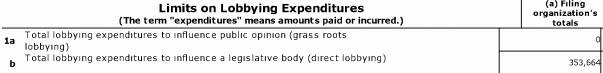

ACM doesn’t seem to have any lobbying expenses. I wonder what the IEEE lobbies for – they’re really not spending much, but it’s more than zero. Better electricity? Lobbying is not in and of itself bad (I like it when the AMA lobbies against the use of tobacco just fine), but it’s interesting to see and great for forming unwarranted conspiracy theories.

OK, enough goofing off, this took way more time than I expected; back to my own taxes.

Tomas and I turned over all our final files for Ray Tracing Gems to the publisher on January 2, and we’re gathering edits from the authors. The Table of Contents for the 32 articles is now public. The publisher’s webpage is up. There’s an Amazon page in progress (BTW, the after-the-colon title, “High-Quality and Real-Time Rendering with DXR and Other APIs,” was requested by the publisher to help search engines find the book).

The hardback book should be available at GDC and GTC, with a free electronic version(s) available sometime before or around then, along with a source code repository. Also, the book is open access, under this CC license. This means that the authors, or anyone else, can redistribute these articles as they wish, as long as it’s a non-commercial use and they credit the book as the source.

Here’s the cover, which should be on the other sites soon.

By the way, if you want to read an article about ray tracing actual gems, this one is a good place to start. I happened upon it by accident, and it’s educational, approachable, and not dumbed down. The design criteria for a good gem cut are fun to read about: maximize reflected light as well as contrast, take into account that the viewer’s head will block off light, and so on. If you need a more serious paper from graphics people, there’s this article. Surprisingly, though fairly old, it is newer than any of the articles cited by the first, much newer article.

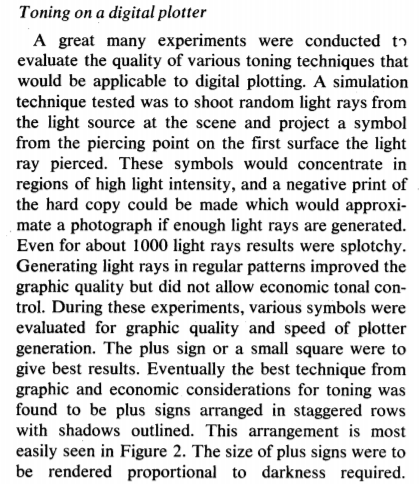

Well, not exactly Atomic Age, which I think of as late 40’s through mid 60’s, but close – what would you call it when you’re rendering on a plotter and drawing “+” signs for shading?

Arthur Appel’s paper from 1968 is considered the first use of ray casting for rendering, for eye rays and shadow rays.

One surprising part is that the paper includes the idea of shooting rays from the light and depositing the results on the surfaces – early photon mapping, or radiance caching, or something (details aren’t clear).

Update: an anonymous source let me know, “When I worked briefly at the IBM TJ Watson lab, I made a point of seeking out Art Appel. He was friendly and nice. As I recall, he said that he was working as technical support at the time of the ray tracing work. As he described it, most of his day was spent hanging around, waiting to answer the phone and help people with computer issues (this may have been a self-effacing description of a very different or important job, for all I know). He said that he had lots of free time during the day, and he was interested in using the computer to make images, so his ray tracing work was basically a hobby!”

No I’m not kidding; yes it’s the 3rd edition. See the announcement (an interesting read – I loved the first cover proposal), or the front page, or jump right to the table of contents. HTML only for now, though there is an ancient first draft available, but free is pretty great.

First, the links you need:

Beyond all the deep ray learning tracing, which I’ve noted in other tweets and posts, the one technology on the show floor that got “you should go check it out” buzz was the Looking Glass Kickstarter, a good-looking and semi-affordable (starting in the $600 range, not thousands or higher) “holographic” display. 60 FPS color, 4 and 8 megapixel versions, but those pixels are divided up among the 45 views that must be generated each frame. Still, it looked lovely, and vaguely magical (and has Sketchfab support).

I mostly went to courses and talked with people. Here are a few tidbits of general interest:

Finally, this, a clever photo booth at NVIDIA. The “glass” sphere looks rasterized, since it shows little refraction. Though, to make it solid, or even fill it with water, would have been massively heavy and unworkable. Sadly, gasses don’t have much of a refractive index, though maybe it’s as well – a chloroform-filled sphere might not be the safest thing. Anyway, it’s best as-is: people want to see their undistorted faces through the sphere. If you want realism, fix it in post.

OK, everything happened today, so I am believing the concept of time is no longer meaningful.

First, NVIDIA announced its consumer versions of their RTX ray tracing GPUs, which should come as a shock to no one after last week’s Ray Tracing Monday at SIGGRAPH. My favorite “show off the ray tracing” demo was for Battlefield V.

Then, this:

https://twitter.com/Peter_shirley/status/1029342221139509249

I love free. To get up to speed on ray tracing, go get the books here (just in case you can’t click on Pete’s link above), or here (our site, which shows related links, reviews, etc.). Then go to the SIGGRAPH DXR ray tracing course site – there’s even an implementation of the example that’s the cover of Pete’s first book.

Up to speed already? Start writing an article for Ray Tracing Gems. At SIGGRAPH we found that a few people thought they had missed the proposals deadline. There is no proposals deadline. The first real deadline is October 15th, for completed articles. We will judge submissions, and may reject some, but our goal is to try to work with any interested authors before then, to make sure they’re writing something we’ll accept. So, you can informally write and bounce ideas off of us. We avoided the “proposals” step in order to give people more time to write and submit their ideas, large and small.

BTW, as far as free goes, we’re aiming to make the e-book version of Ray Tracing Gems free, and also having the authors maintain reprint rights for their works.

All for now. Day’s not over yet.