The Graphics Codex is a little $3.99 Apple app developed by Morgan McGuire, a noted researcher and practitioner in graphics, especially interactive graphics. He’s written numerous research papers and a number of books on videogame development, consults for NVIDIA, teaches at Williams College, and has worked on games such as Titan Quest (recently named #65 in PC Gamer’s top 100 games of all time). From talking with him, the Graphics Codex is basically his reference notebook. It holds the compact nuggets of knowledge he wants to have instantly available at his fingertips (literally, since it’s an iPad/iPhone app; it also runs on iPods running iOS 5.x).

This Graphics Codex been available for a few weeks, but this new version, 1.2, has faster scrolling and display, among other features. Morgan felt this was an important improvement so I’ve been holding off blogging about the app. Upgrades are free and simple, as with most apps. Morgan says he’s working on version 1.3, which will focus on iOS 5.1 support, color theory, and diagrams useful for explaining computer graphics topics.

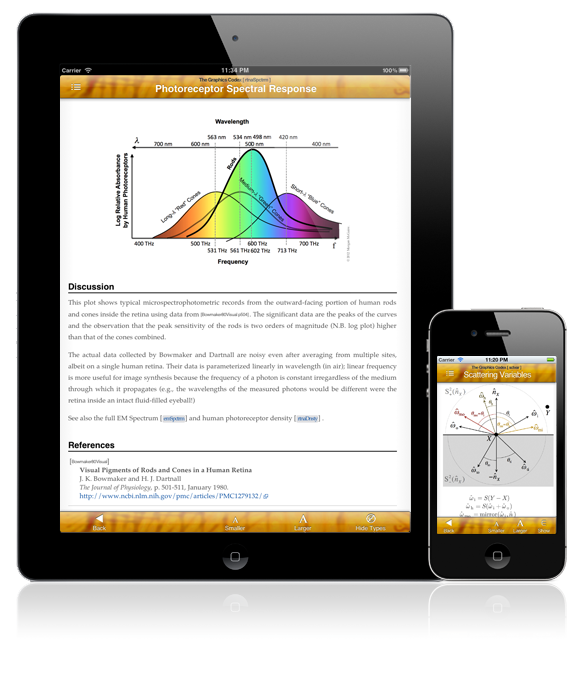

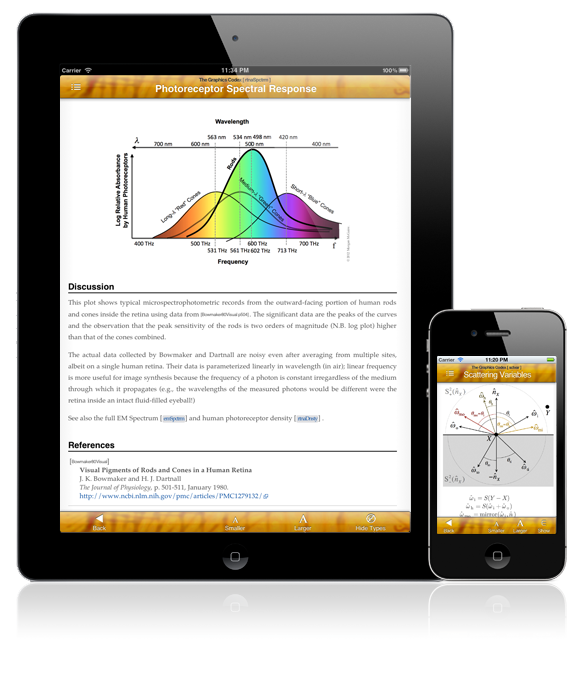

So, what is it? Well, let’s start with pictures:

We all have our own favorite pieces of information we like to see included. This codex fits me pretty well: I see the reflection and refraction formulas, and various matrix types described (perspective, rotation, scale, translation, skew, determinants, etc.). I see handy things like the formatting for printf, and the latex and HTML codes for Greek letters and for math symbols. I see pseudocode for object/object intersection and distance formulas, as well as various common sorting algorithms. I see raster and 3D file formats (nicely linking to original documents on the web, when available). That’s just for starters.

There are a lot of topics included, and you can see the whole list before purchasing. In the app topics are listed alphabetically, index-style, but that’s fine, as the normal way to access this work is to search the index. Some topics I don’t know a thing about, which is great – knowing what you don’t know is important. Seeing some of these concepts inspires me to learn about them (elsewhere – like I say, this app is a reference, not a textbook). Some topics I may rarely or never look at, such as the examples shown in the images above, but I like knowing they’re there. Given that the author is a professor and consultant, I understand why they exist: these are teaching aids, information you can easily pull up and show a student, client, or other developer to help explain a concept or algorithm.

That said, there are some minor gaps. Things that came to mind for me to test but that I didn’t find: compositing (“over”, etc.), sampling and filtering (e.g. sinc and Gaussian curves), dithering (but when did I last use that?), and regular expressions (which admittedly sometimes have variations between computer languages). There’s other stuff I wouldn’t mind having: all HTML letter codes, a decimal/hexadecimal table, etc., but these are trivial to find & bookmark from sites on the web. Some domain-specific things like the various architectural projections (e.g., the various axonometric projections) would be nice, but that’s very specific to me and Wikipedia mostly fills the gap. Someday I imagine there could be a framework for such codex apps to allow you to add your own index entries, similar to how you can make your own reference work on Wikipedia (update: Morgan notes that this feature exists for his app, it’s called “email him and ask for a topic to be added”). The difference is that this app gives you the core, relevant ideas and algorithms of computer graphics in a usable form, with a consistent style, and cross-referenced to only directly relevant articles. A single author and editor, focused on a single area, adds considerable value.

This is not the first time someone has collected such reference entries. The most direct “competitor” that comes to mind is Phil Dutre’s great Global Illumination Compendium. This is a free PDF, go get it. Its focus is indeed global illumination, and it’s quite an extensive reference in this area. I would say there’s about a 25% overlap with the Graphics Codex. Another resource that comes to mind is Steve Hollasch’s collection of USENET articles, free on the web. This collection is a bit ancient, but math and physical formulas don’t change quickly. It’s a pretty shotgun-scattered set of articles, more like Graphics Gemlets, but an interesting place to wander through and try for information.

Back to the Graphics Codex. Each index entry is nicely formatted and readable, and every page (except the Bibliography, which I have reported as a bug) can be made larger or smaller. This larger/smaller functionality works well, reformatting the entry to be fully visible side-to-side, vs. typical PDF zoom, where the page can become wider than the display.

All the entries are aimed to be for reference, such as hard information that you basically understand but want to get the precise formula or code. This is information you could eventually find on your bookshelf or on the web, but instead is quickly available for you by simply searching the index. You can’t actually search the entries themselves, and the bibliography doesn’t have back links, i.e., “which Codex entries reference this article?” These are minor niggles: entries use cross-references to other entries, and most entries have a reference to related books or papers, sometimes links directly to the reference, if online. Reference back-links are more useful in a textbook; for this reference, they’d probably mostly be clutter.

Summary

Negatives:

- Can’t copy and paste, unlike a computer-viewable version. (There might be an app for that…?)

- Doesn’t have everything I personally might need.

- Entries themselves are not searchable.

Positives:

- Searchable index makes finding things a snap.

- Nicely formatted, color illustrations and pseudocode snippets.

- Cross-referencing and original source references, with links.

- Weighs much less than the related pile of books.

- Has many to most things I like to have handy.

All in all, worth $3.99 to me.

The above are my own impressions, before reading the email Morgan sent me about the app. Here’s what Morgan said:

What I’m doing with the app is converting all of my course notes and the professional notes that I take with me consulting into easily searchable topics. This way I always have the reference material with me, without having to carry all of my graphics books between my home, office, lab, and remote sites. I usually cite not only the paper and book that material comes from, but the exact page, so that I can quickly find more information when I am in the same place as my books. DirectX, OpenGL, Unity, Mitsuba, G3D, and JOGL entry points link to their official documentation on the web. Unlike a PDF or Apple IBook, The Graphics Codex does all typesetting live so it reflows for the orientation and size of your mobile device, and zooming in recomputes the text rather than scrolling it off the side of the page.

I’m prioritizing topics that people e-mail me about and vote for on the website…and anything that I look up in my regular work immediately goes into the next version. Version 1.2 not only adds a bunch of new topics from convolution to quaternions but an all-new UI and the ability to show the types and units of subexpressions.