The inestimable Ke-Sen Huang has again done us all a service: the HPG 2010 paper list is up. Just a few authors’ papers are visible at this point, but undoubtedly more will appear as the weeks roll by.

Category Archives: Resources

7 Things for May 2

7 things, with images for each as some quick eye candy – is it worth my adding these images?

- Here’s a nice rundown of much of the graphical goodness (and badness, e.g. temporal antialiasing) of the Halo: Reach beta. It’s worth a skim just to get a sense of the state of the art in a wide range of areas. The motion blur video appears to not be available currently. (thanks, Mauricio)

- Unlimited Detail Technology is a voxel-based renderer with an interesting history: it was developed by a self-taught hobbyist who once ran a supermarket chain. There’s been interest in voxels for awhile, e.g. Jon Olick’s SIGGRAPH presentation in 2008 (slides here). Voxel rendering reminds me of the CPU-side heightfield renderer used in Novalogic’s Comanche and Delta Force game series from 1992 on. Novalogic’s was a 2.5 D system using contour following, while the Unlimited Detail system is full 3D voxels. Looking at UD’s presentations, it seems like a form of 3D clipmapping, where the level of detail of the voxels needed are determined by distance. The look reminds me of dribble sand castles. The coolest part: no GPU needed, it’s all CPU. I can imagine 18 limitations to this system: animation/deformation, sharp-edges not possible, shading models have limitations, transparency doesn’t work, textures are difficult to apply, fuzzy objects can’t be rendered, etc. Still, fun to see and a fascinating option. (another thanks, Mauricio)

- The Ruin Island demo was created by some students in France. Parallax occlusion mapping, depth of field, NPR toon rendering, motion blur, glow and bloom, and more – it’s a grab-bag of effects in OpenGL. What’s nice is that the source code is provided. (Geeks3D)

- Norbert Nopper has a small set of standalone OpenGL 3.2 and GLSL 1.5 tutorial programs with code for various effects. (Morgan McGuire)

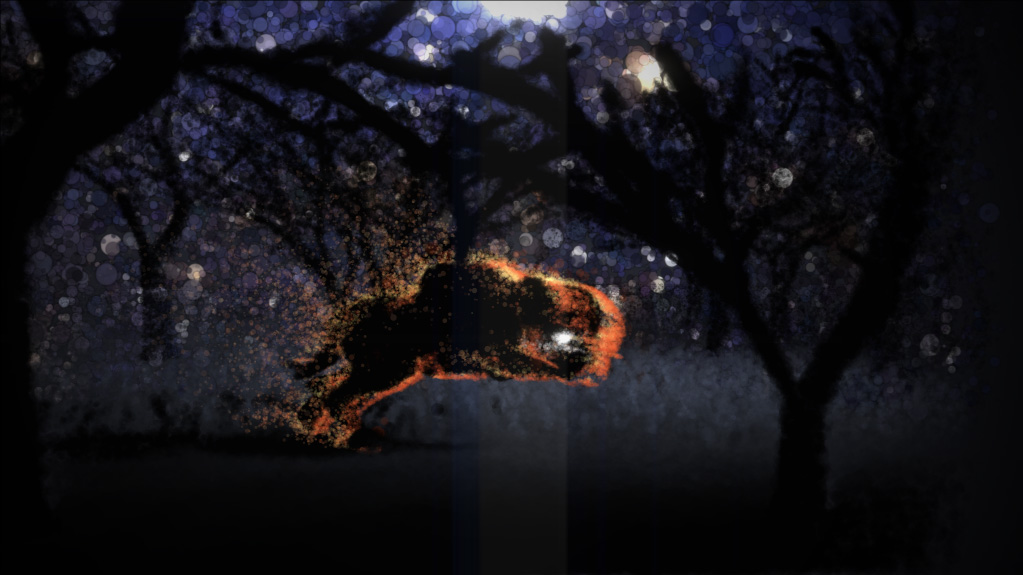

- The demoscene demo agenda circling forth uses particle clouds for a beautiful look. Note that the links for the video and demo are just under the image at the top of the page.

- The photorealistic Octane Renderer uses CUDA for acceleration. To try it out you’ll need a fairly up-to-date NVIDIA driver, the demosuite, and the executable. It’s actually pretty cool to see the frameless rendering in action, it’s quite interactive for their simple scenes. There’s golden thread rendering: the longer you sit, the better the image gets. (Geeks3D)

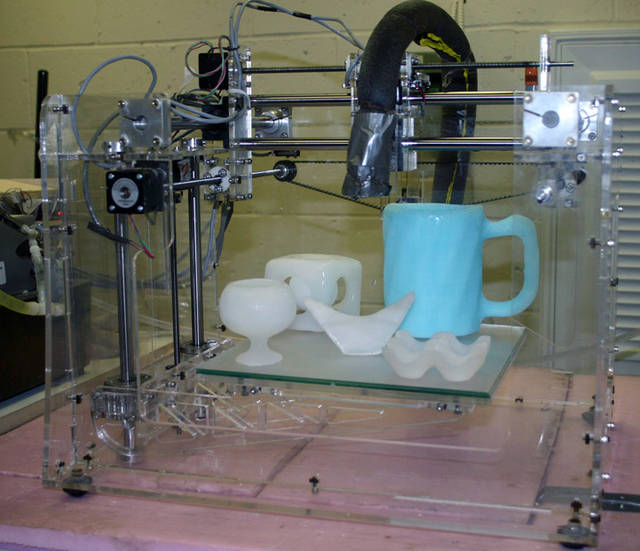

- 3D printing with ice. (BoingBoing)

Halo: Reach motion blur:

Unlimited Detail voxel image:

Ruin Island demo:

OpenGL 3.2 Nopper demo image:

agenda circling forth:

Octane Rendering, after 2 merged frames (interactive update) and after 5685 frames (a few minutes):

3D ice printing:

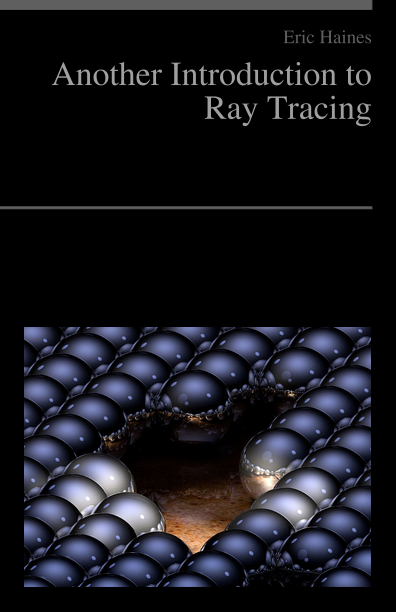

Another Introduction to Ray Tracing

I was waiting around a bit for my younger son’s doctor’s appointment this morning, so I decided to edit a book. I finished it just now, it’s called Another Introduction to Ray Tracing. It’s 471 pages in book form. You can download it for free, or order a paperback copy from PediaPress for $22.84 plus shipping. I won’t earn a dime from it, but since it took me less than two hours to make, no problem.

I was waiting around a bit for my younger son’s doctor’s appointment this morning, so I decided to edit a book. I finished it just now, it’s called Another Introduction to Ray Tracing. It’s 471 pages in book form. You can download it for free, or order a paperback copy from PediaPress for $22.84 plus shipping. I won’t earn a dime from it, but since it took me less than two hours to make, no problem.

So what’s happening here? Due to investigating Alphascript and Betascript publishing a month ago, reporting it on Slashdot, and following up on a lot of great comments, I learnt a number of interesting tidbits. Here’s a rundown.

First, VDM Publishing itself is sort of a vanity press, but with no cost to the author. It seeks out authors of PhD theses and similar, asking for permission to publish. This is not all that unreasonable: because the works are only published on demand, the authors do not have to pay anything, they even get a few hardcopies for free. Here’s an example from our field that I reported on in February. That said, it’s mostly a win for VDM Publishing, who charge steep prices for the resulting works. Such not-quite-books mix in with other books on Amazon. It takes a bit of searching to realize that the work is a thesis and likely could be downloaded for free. A bit misleading, perhaps, but not all that horrifying. Caveat Emptor.

VDM Publishing also has an imprint called LAP, Lambert Academic Press, which does the same thing, publishing theses such as this one by Nasim Sedaghat. With a little Googling you can find Nasim, and then find the related paper for free.

VDM’s imprints Alphascript and Betascript Publishing I’ve already described, they’re little more than random repackagers of Wikipedia articles. Here’s an example book. I posted one-star reviews for a few of these books on Amazon; what’s funny is that the owner of the firm actually responded to my criticism (with a one-size-fits-all response in slightly broken English).

Four weeks ago Alphascript had 38,909 and Betascript 18,289 books listed on Amazon. To my surprise they now have 39,817 and 18,295 books, a total increase of only (only!) 914 new books – looks like they’re slowing down. They’ll have to work hard to catch up with Philip M. Parker’s 107,182 books or his publishing firm ICON Group International, with 473,668 books. The New York Times has an interesting article about this guy.

Betascript Publishing has two books found on Amazon related to ray tracing: Ray Tracing (Graphics) and Rasterization (which includes a section on ray tracing). The ray tracing book is 88 pages long and $46, more than 50 cents a page. My book, at $22.84 for 471 pages, is less than a nickel a page. So my new book’s better per pound. I actually worked a little compiling my book, making logical groupings, picking relevant articles, creating chapter headings, the whole nine yards (never did figure out how to make a cover from an existing Wikipedia image, though). The exercise showed me the limits of Wikipedia as a book-making resource: the individual articles are fine for what they are, some are wonderful, and editing them in a somewhat logical flow has some merit. However, there’s no coherence to the final product and there are large gaps between one article and the next. How to generate rays for a given camera? Sorry, not in my book.

Still, it was great to learn of PediaPress and the ability to make my own Wikipedia book for free. Poking around their site, I even found a book on 3D computer graphics, called 3D Computer Graphics (catchy, neh?). Seeing others making books, I decided to share my own, so now it’s official. Mind you, I haven’t actually read through my book, nor even really checked the flow of articles – no time for that. I mostly grouped by subject and title after identifying likely pages. That said, I do like having a PDF file of all these articles that I can search through.

Obviously authors are not about to be replaced by Betascript books any time soon. If you want to read a real introduction to the topic, a book like Ray Tracing from the Ground Up might serve you better, even if it is a whole dime a page. This cost/benefit ratio for a good book is something I’ll never get over, that books are sold at prices that are equivalent to the cost for just an hour or two for a computer programmer’s time and yet yield so much in the right hands.

SIGGRAPH 2010 papers up

See what was accepted at Ke-Sen’s site, which undoubtedly will grow in the number of paper links over the ensuing weeks as authors put their preprints up for download. So far, this is the one that’s #1 in the “just plain fun” category.

Thought for the Day

From here, no idea who made it; I’d like to shake his hand. Found here, but the poster found it via StumbleUpon.

Having seen way too much bloom on the Atacama Desert map for the Russian pilots in Battlefield: Bad Company 2 (my current addiction, e.g. this and this), I can relate.

Edit: please do read the comments for why both pairs of images are wrong. I’m so used to HDR == tone mapping that I pretty much forgot the top pair is also technically incorrect (HDR is the range of the data, tone mapping can take such data and map it nicely to a displayable 8-bit image without banding) – thank you, Jaakko.

Best Book Title Ever, Period

I’ll get back to actual informational posts realsoonnow when I have some time, but I had to put this up immediately.

Amazon sent this one on to me, a book recommendation entitled Polygon Mesh: Unstructured Grid, 3D Computer Graphics, Solid Modeling, Convex Polygon, Rendering, Vertices, Computational Geometry. I am a bit sad there’s no cover image nor “Look Inside!” feature; it’s these little touches that no doubt would have convinced me to lay out $47 for such a fine-sounding volume, even though it’s only 88 pages long. The book Rasterisation: Vector Graphics, Raster Graphics, Pixel, Rendering, 3D Computer Graphics, Persistence of Vision, Ray Tracing by the same editor has a nice cover (though no “Look Inside!”), but at $62 is just a dollar too much for me.

The first of three editors for both books, Lambert M. Surhone, has 18,247 books that he’s worked on personally. Miriam T. Timpledon and Susan F. Marseken are also pretty productive, with 17,697 titles each. If they would only take a chance to break out and edit their own books, they could overtake Lambert in no time, I’m sure.

Welcome to Betascript Publishing. The idea is to grab (possibly) related Wikipedia pages, print them out, and put them in a book. More about this here. I don’t know who would buy such books, but I guess you need just 100 customers to net you perhaps $5000 or more. Peculiar. I expect with 18,247 titles, there are likely to be a hundred that sound like real books. The part that is sad to me is to see such books listed on foreign bookseller pages. I guess the good news is that the system works only once per customer, though I would guess the next step is to make 18,247 imprint names with 18,247 different editor names.

I thought the editors’ names were perhaps anagrams. Using the Internet Anagram Server, the first combination for each name is:

Lambert M. Surhone gives Blather Summoner

Miriam T. Timpledon gives Immolated Imprint

Susan F. Marseken gives Frankness Amuse

Probably just a coincidence. Anyway, I am frankly unamused by the idea of books automatically being produced, then automatically being recommended by Amazon, given that some people will undoubtedly pay for something they could get for nothing.

Now I just need an AI that will automatically buy these with robo-dollars and the cycle will be complete. Really, better yet would be to write a script that would automatically post a review for each one and note the content is free on Wikipedia. That would be the best automation of all.

Update: I wrote Amazon to complain. They reply (among other boilerplate sentences), “As a retailer, our goal is to provide customers with the broadest selection possible so they can find, discover, and buy any item they might be seeking.” They forgot the words “and pay us.” No one in their right mind seeks to pay for information they could get for free. It turns out Betascript is just one of three imprints under VDM Publishing – reading the Wikipedia article on VDM Publishing is fascinating, especially the discussion section. Amazon currently lists 38,909 Alphascript and 18,289 Betascript books, plus 321 books in German by Fastbook Publishing.

If you’re as disgusted by Amazon’s behavior as I am, I suggest two strategies: write and complain (and get a boilerplate response, but enough complaints might add up) by going here and clicking on Contact Us in the right column, and post 1-star reviews for any of these you run across, e.g., mine here – something to do while waiting for your code to compile.

Update: as of January 24th, 2011, Alphascript is up to 112,420 titles and Betascript has 230,460 titles. What a crock – shame on you, Amazon. Sadly, Miriam and Susan never caught up to Lambert: he has 230,535 titles to his name, while they each have only 69,395.

One more update: see my followup article here.

CFP for “Game Development Tools” book

Marwan Ansari has put out a call for participation (i.e., articles) for an upcoming book, “Game Development Tools”. The CFP is short, so I’ve included it below. Please pass on the URL http://gamedevelopmenttools.com/ for the book’s website to anyone you think would be interested.

To me, the things I most appreciate from Marwan are his two articles in ShaderX 2: Tips & Tricks (free for download). These are still relevant today: “Advanced Image Processing with DirectX® 9 Pixel Shaders” (written with Jason Mitchell, now at Valve, and Evan Hart, and the article “Image Effects with DirectX® 9 Pixel Shaders”. He’s worked for a number of games companies since his ATI days.

The CFP:

We invite you to submit a proposal for an innovative article to be included in a forthcoming book, Game Development Tools, which will be edited by Marwan Y. Ansari and published by A. K. Peters. We expect to publish the volume in time for GDC 2011.

We are open to any tools articles that you feel would make a valuable contribution to this book.

Some topics that would be of interest include:

- Content Pipeline tools (creation, streamlining, management)

- Graphics/Rendering tools

- Profiling tools

- Collada import/export/inspection

- Sound tools

- In-Game debugging tools

- Memory management & analysis

- Console tools (single and cross platform)

This list is not meant to be exclusive and other topics are welcome.

The schedule for the book is as follows:

June 30th – All proposals in.

July 15th – Final list of accepted authors are informed and begin articles.

August 15th – First draft in to editor

September 15th – Drafts sent to other book authors for peer review

October 15th – Final articles in to editor

November 30th – Final articles to publisher (A K Peters)

GDC 2011 – Book is released.

Please send proposals to marwan at gamedevelopmenttools dot com.

Just Cause 2 makes 3

A demo of the game Just Cause 2 is available on Steam today. What’s interesting is that this is the third DirectX 10-only game to be released. There have been any number of DirectX 10 enhanced games, but until a few months ago there was just one DirectX 10-only game release, Stormrise, a mediocre game released in March 2009. Shattered Horizon then came out in November from Futuremark, who are known more for their graphics benchmarks. Just Cause 2 is a sequel, and distributed by a well-known publisher. Humus describes the logic in going DirectX 10-only.

I’m looking forward to see how DirectX 11’s DirectCompute gets used in commercial applications. Perhaps the day there’s a DirectX 11-only game of any significance is the day we need to start writing a fourth edition. Let’s see: DirectX 10 was released November 2006 with Vista, so it took about three and a quarter years for an anticipated game to be released that was DirectX 10-only (and even now it’s considered dangerous by many to do so). DirectX 11 was released in October 2009, so if the same rule holds, then we’ll need to start writing in February 2013. Pre-order today!

Even now, 13% of Steam gamers have only SM 2.0. Games like World of Warcraft and Left 4 Dead 2 don’t require more, for example. So what’s the magic percentage where the AAA games decide to set the minimum level to the next shader model? I don’t recall it being much of a deal between shader model 2.0 and 3.0 games; there was a little hype, but I think this was because going from SM 2.0 to 3.0 involved just a card upgrade, vs. an OS upgrade. Which is funny, in that an OS upgrade is usually cheaper than a new GPU, but I think it’s also because it’s more critical, like a heart transplant vs. a cornea transplant.

Poking around, I found the interesting graphs below. I’m sure games have been left off, and some are miscategorized, e.g. Cryostatis is the only one under SM 4.0, and it doesn’t require DirectX 10. But, let’s assume this data is semi-reasonable; I’m guessing the games are categorized more by a “recommended configuration” than a minimum. So Shader Model 1.x game releases (and remember, 1.x was pretty darn limited) peaked in 2006, 2.0 peaked in 2007 but outnumbered 3.0 until 2009. SM 3.0 hasn’t peaked yet, I’d say (ignore 2010 and 2011 graph values at this point, of course). Remember that SM 2.0 hardware came out around 2002, so it peaked 5-6 years later and still was strong 7 years later (and perhaps longer, we’ll see). SM 3.0 came out in 2004, and seems likely to continue to be strong through 2010 and into 2011. 4.0 came out in 2006, so I’d go with it peaking in 2011-2012 from just staring at these charts. Which entirely ignores the swirl of other data—Vista and Windows 7, Xbox trends, GPU trends, blah-di-blah—but it’ll be interesting to see if this prediction is about right. (Click on a graph for the lists of games for that shader model.)

I3D 2010 Report

From Mauricio Vives, our first guess blogger; I thank him for this valuable detailed report.

Written February 26, 2010.

This past weekend I attended the 2010 Symposium on Interactive 3D Graphics and Games, known more simply as “I3D.” It is sponsored by ACM SIGGRAPH, and was held this year in Bethesda, Maryland, just outside Washington. Disclaimer: I work for Autodesk, so much of this report comes from the perspective of a design software developer, but any opinions expressed are my own.

Overview

I3D is a small conference of about 100 people that covers computer graphics and interaction research, principally as it applies to games. I also attended the conference in 2008 near San Francisco, when it was co-chaired by my colleague at Autodesk, Eric Haines.

About half of the attendees are students or professors from universities all over the world, and the rest are from industry, typically game developers. As far as I could tell, I was the only attendee from the design software industry. NVIDIA was well represented both in attendees and presentations, and the other company with significant representation was Firaxis, a local game developer most well known for the Civilization series.

The program has a single track, with all presentations given in the same room. Unlike SIGGRAPH, this means that you can literally see everything the conference has to offer, though it is necessarily more focused. As you will see below, I was impressed with the quality and quantity of material presented.

Since this conference is mostly about games, all of the presented research has a focus on a real-time implementation, often for games running at 60 frames per second. Games have a very low tolerance for low frame rates, but they often have static environments and constrained movement which allows for precomputation and hence high performance and convincing results. Conversely, customers of design software like Autodesk’s products produce arbitrary and changing data, and want the most accurate possible results, so precomputation and approximations are less useful, though a frame rate as low as 5 or 10 fps is often tolerable.

However, an emerging trend in graphics research for games is to remove limitations while maintaining performance, and that was very evident at I3D. The papers and posters generally made a point to remove limitations, in particular so that geometry, lighting, and viewpoints can be fully dynamic, without lengthy precomputation. This is great news for leveraging these techniques beyond games.

In terms of technology, this is almost all about doing work on GPUs, preferably with parallel algorithms. NVIDIA’s CUDA was very well-represented for “GPGPU” techniques that could not use the normal graphics pipeline. With the wide availability of CUDA, a theme in problem-solving is to express as much as possible with uniform grids and throw a lot of threads at it! As far as I could tell, Larrabee was entirely absent from the conference. Direct3D 11 was mentioned only in passing; almost all of the papers used D3D9, D3D10, or OpenGL for rendering.

And a random statistic: a bit more than half of the conference budget was spent on food!

Links

The conference web site, which includes a list of papers and posters, is here.

The Real-Time Rendering blog has a recent post by Naty Hoffman that discusses many of the papers and has links to the relevant author web sites.

Photos from the conference are available at Flickr here. I also took photos at I3D 2008, held at the Redwood City campus of Electronic Arts, which you can find here.

Papers

The bulk of the conference program consisted of paper presentations, divided into a few sessions with particular themes. I have some comments on each paper below, with more on the ones of greater personal interest.

Physics Simulation

Fast Continuous Collision Detection using Deforming Non-Penetration Filters

There is discrete collision detection, where CD is evaluated at various time intervals, and continuous CD, where an exact, analytic result is computed. This paper is about quickly computing continuous CD using some simple expressions that vastly reduce the number of tests between primitives.

Interactive Fluid-Particle Simulation using Translating Eulerian Grids

This was authored by NVIDIA researchers. The goal is a fluid simulation that looks better as processors get more and faster cores, i.e., scalable physics. This is actually a combination of techniques implemented primarily with CUDA, and rendered with a particle system. It allows for very dense and detailed results, and uses a simple trick to have the results continue outside the simulation “box.”

Character Animation

Here there was definitely a theme of making it easier for artists to prepare and animate characters.

Learning Skeletons for Shape and Pose

This is about creating skeletons (bones and weights) automatically from a few starting poses and shapes. The author noted that this was likely the only paper developed almost entirely with MATLAB (!).

Frankenrigs: Building Character Rigs From Multiple Sources

This paper has a similar goal: use existing artist-created character rigs to automatically create rigs for new characters, with some artist control to adjust the results. This relies on a database of rigged parts that an art team probably already has, thus it is a data-driven solution for the time-consuming tasks in character rigging.

Synthesis and Editing of Personalized Stylistic Human Motion

This is about taking a walk animation for a single character, and using that to generate new walk animations for the same character, or transfer them to new characters.

Fast Rendering Representations

Real-Time Multi-Agent Path Planning on Arbitrary Surfaces

Path finding in games is a huge problem, but it is normally constrained to a planar surface. This paper implements path planning on any surface, and does it interactively on both the CPU and GPU using CUDA.

Efficient Sparse Voxel Octrees

Is it time for voxel rendering to make a comeback? These researchers at NVIDIA think so. Here they want to represent a 3D scene similar using voxels with as little memory as possible, and render it efficiently with ray casting. In this case, the voxels contain slabs (they call them contours) that better define the surface. Ray casting through the generated octree is done with using special coordinates and simple bit manipulation. LOD is pretty easy: voxels that are too small are skipped, or the smallest level is constrained, similar to MIP biasing.

This paper certainly had some of the most impressive results from the conference. The demo has a lot of detail, even for large environments, where you think voxels wouldn’t work that well. One of the statistics about storage was that the system uses 5-8 bytes per voxel, which means an area the size of a basketball court could be covered with 1 mm resolution on a high-end NVIDIA GPU. This comprises a lot of techniques that could be useful in other domains, like point cloud rendering. Anyway, I recommend looking at the demo video and if you want to know more, see the web site, which has code and the compiled demo.

On-the-Fly Decompression and Rendering of Multiresolution Terrain

This paper targets GIS and sci-vis applications that want lossless compression, instead of more-common lossy compression. The technique offers variable rate compression, with 3-12x compression in practice. The decoding is done entirely on the GPU, which means no bus bottleneck, and there are no conditionals on decoding, so it can be very parallel. Also of interest is that decoding is done right in the rendering path, in the geometry shader (not in a separate CUDA kernel), and it is thus simple to perform lighting with dynamically generated normals. This is another paper that has useful ideas, even if you aren’t necessarily dealing with terrain.

GPU Architectures & Techniques

A Programmable, Parallel Rendering Architecture for Efficient Multi-Fragment Effects

The problem here is rendering effects that require access to multiple fragments, especially order-independent transparency, which the current hardware graphics pipeline does not handle well. The solution is impressive: build a entirely new rendering pipeline using CUDA, including transforms, culling, clipping, rasterization, etc. (This is the sort of thing Larrabee has promised as well, except the system described here runs on available hardware.)

This pipeline is used to implement a multi-layer depth buffer and color buffer (A-buffer), both fixed size, where fragments are inserted in depth-sorted order. Compared to depth peeling, this method saves on rendering passes, so is much faster and has very similar results. The downside is that it is a slower than the normal pipeline for opaque rendering, and sorting is not efficient for scenes with high depth complexity. Overall, it is fast: the paper quoted frame rates in the several hundreds, but really they should be getting their benchmark conditions complex enough to measure below 100 fps, in order to make the results relevant.

Parallel Banding Algorithm to Compute Exact Distance Transform with the GPU

The distance transform, used to build distance maps like Voronoi diagrams, is useful for a number of image processing and modeling tasks. This has already been computed approximately on GPUs, and exactly on CPUs. This claims to the first exact solution that runs entirely on GPUs. The big idea, as you might expect, is to implement all phases of the solution in a parallel way, so that it uses all available GPU threads. This uses CUDA, and the results are quite fast, even faster than the existing approximate algorithms.

Spatio-Temporal Upsampling on the GPU

The results of this paper are almost like magic, at least to my eyes. Upsampling is about rendering at a smaller resolution or fewer frames, and interpolating the in-between results somehow, because the original data is not available or slow to obtain. Commonly available 120 Hz / 240 Hz TVs now do this in the temporal space. There is a lot of existing research on leveraging temporal or spatial coherence, but this work uses both at once. It takes advantage of geometry correlation within images, e.g. using normals and depths, to generate the new useful information.

I didn’t follow all of the details, but the results were surprisingly free of artifacts, at least for the scenes demonstrated. This could be useful any place where you might want progressive rendering, real-time ray tracing, because rendering full-resolution is very expensive. This technique or some of the ones it references (like this one) could offer much better results than just rendering at a lower resolution and doing simple filtering like is often done for progressive rendering.

Scattering and Light Propagation

Cascaded Light Propagation Volumes for Real-Time Indirect Illumination

This paper almost certainly had the most “street cred” by virtue of being developed by game developer Crytek. Simply put, this is a lattice-based technique for real-time indirect lighting. The most important features are that it is fully dynamic, scalable, and costs around 5 ms per frame. A very quick overview of how it works: render reflective shadow map for each light, initialize the grid with this information to define many secondary light sources, then propagate light through the grid in 30 directions (faces) from each cell into the adjacent 6 cells, approximate the results with spherical harmonics, and render.

To manage performance and storage, this uses cascades (several levels of detail) relative to the viewer, hence the use of the term “cascaded” in the title. The same data and technique can be used to render secondary occlusion, multiple bounces, glossy reflections, participating media using ray marching… just a crazy amount of nice rendering stuff. The use of a lattice has some of its own quality limitations, which they discuss, but nothing too bad for a game. This was a lot to take in, and I did not follow all of the details, but the results were very inspiring. Apparently this will appear in the next version of their game engine, which means consumers will soon come to expect this. Crytek apparently also discussed this at SIGGRAPH last year.

Interactive Volume Caustics in Single-Scattering Media

Caustics is basically “light focusing,” and scattering media is basically “fog / smoke /water,” so this is about rendering them together interactively, e.g. stage lights at a concert with a fog machine, or sunlight under water. It is fully dynamic, and offers surprisingly good quality under a variety of conditions. It is perhaps too slow for games, but would be fine for design software or a hardware renderer which can take a few seconds to render.

Epipolar Sampling for Shadows and Crepuscular Rays in Participating Media with Single Scattering

This paper has a really long title, but what it is trying to do is simple: render rays of light, a.k.a. “god rays.” Normally this is done with ray marching, but this is still too slow for reasonable images, and simple subsampling doesn’t represent the rays well. The authors observed that radiance along the ray “lines” don’t change much, except for occlusions, which leads to the very clever idea of the paper: construct the (epipolar) lines in 2D around the light source, and sparsely sample along the lines, adding more samples at depth changes. The sampling data is stored as a 2D texture, one row per line, with samples are in columns. It’s fast, and looks great.

NPR and Surface Enhancement

Interactive Painterly Stylization of Images, Videos and 3D Animations

This is another title that direct expresses its goal. Here the “painterly” results are built by a pipeline for stroke generation, with many thousands of strokes per image, which also leverages temporal coherence for animations. It can be used on videos or 3D models, and runs entirely on the GPU. If you are working with NPR, you should definitely look at their site, the demo video, and the referenced papers.

Simple Data-Driven Modeling of Brushes

A lot of drawing programs have 2D brushes, but real 3D brushes can represent and replace a large number of 2D brushes. However, geometrically modeling the brush directly can lead to bending extremes that you (as an artist) usually want to avoid. In this paper from Microsoft Research, the modeling is data-driven, based on measuring how real brushes deform in two key directions. The brush is geometrically modeled with only a few spines having a variable number of segments as bones.

This has some offline precomputation, but most of the implementation is computed at run time. This was one of the few papers with a live demo, using a Wacom tablet, and it was made available for attendees to play with. See an example from an attendee at the Flickr gallery here.

Radiance Scaling for Versatile Surface Enhancement

This is about rendering geometry in such a way the surface contours are not obscured by shading. This is the problem that techniques like the “Gooch” style try to solve. However, the technique in this paper does it without changing the perceived material, sort of like an advanced sharpening filter for 3D models.

It describes a scaling function based on curvature, reflectance, and some user controls, which is then trivially multiplied with the normally rendered image. The curvature part is from a previous paper by the authors, and reflectance is based on BRDF, where you can enhance BRDF components independently. You should definitely have a quick look at the results here.

Shadows and Transparency

Volumetric Obscurance

This is yet-another screen-space ambient occlusion (SSAO) technique. Instead of point sampling, it samples lines (or beams of area) to estimate the volume of sample spheres that are obscured by surrounding geometry. It claims to get smoother results than point sampling, without requiring expensive blurring, and with the performance (or even better) of point sampling. You can see some results at the author’s site here. While it has a few interesting ideas, this may or may not be much better than an existing SSAO implementation you may already have. I found the AO technique in one of the posters (see below) more compelling.

Stochastic Transparency

This was selected as the best paper of the conference. Like one of the earlier papers, this tries to deal with order-independent transparency, but it does it very differently. The author described it as “using random numbers to approximate order-independent transparency.” It has a nice overview of existing techniques (sorting, depth peeling, A-buffer). The new technique does away with any kind of sorting, is fast, and requires fixed memory, but is only approximate. It was demonstrated interactively on some very challenging scenes, e.g. thousands of transparent strands of hair and blades of grass.

The idea is to collect rough statistics about pixels, similar to variance shadow maps, using a combination of screen-door transparency, multisampling (MSAA), and random masks per fragment (with D3D 10.1). This can generate a lot of noise, so much of the presentation was devoted to mitigating that, such as using per-primitive random number seeding to look OK in motion. This is also extended to shadow maps for transparent shadows. Since this takes advantage of MSAA and is parallel, quality and performance will increase with normal trends in hardware. It was described as not quite fast enough for games (yet), but (again) it might be fast enough for other applications.

Fourier Opacity Mapping

The goal of this work is to add self-shadowing to smoke effects, but it needs to be simple to integrate, scalable, and execute in just a few milliseconds. The technique is based on opacity shadow mapping (2001), which stores a transmittance function per texel, but has significant visual artifacts. Here a Fourier basis is used to encode the function, and you can adjust the number of coefficients (samples) to determine the quality / performance tradeoff. Using just a few coefficients results in “ringing” of the function, but it turns out that OK for smoke and hair. The technique was apparently implemented successfully in last year’s Batman: Arkham Asylum.

Normals and Textures

Assisted Texture Assignment

This paper is about making it much easier and faster for artists to assign textures to game environments (levels). It is an ambiguous problem, with limited input to make decisions. The solution relies on adjacency and shape similarities, e.g. two surfaces that are parallel are likely to have the same texture. The artist picks a surface, and related surfaces are automatically chosen. After a few textures are assigned, the system produces a list of candidate textures based on previous choices. There is some preprocessing that has to be performed, but once ready, the system seems to work great. Ultimately this is not about textures; rather, this is an advanced selection system.

LEAN Mapping

“LEAN” is a long acronym for what is essentially antialiasing of bump maps. Without proper filtering, minified bump maps provide incorrect specular highlights: the highlights change intensity and shape as the bump maps gets small in screen space. The paper implements a technique for filtering bump maps using some additional data on the distribution of bumped normals, that can be filtered like color textures. The math to derive this is not trivial, but the implementation is simple and inexpensive.

The results look great in motion, at glancing angles, minified, magnified, and with layered maps. It also has the distinctive property of turning grooves into anisotropy under minification, something I have never seen before.

Efficient Irradiance Normal Mapping

There are a few well-known techniques in games for combining light mapping and normal mapping, but they are very rough approximations of the “ground truth” results. This paper introduces an extension based on spherical harmonics, but only over a hemisphere, that significantly improves the quality of irradiance normal mapping. Strangely, no mention was made of performance, so I would have to assume that it runs as fast as the existing techniques, just with different math.

Posters

The posters session was preceded by a brief “fast forward” presentation with each author having a minute to describe their work. There were about 20 posters total, and I have comments on a few of them.

Ambient Occlusion Volumes (link)

This is a geometric solution to the problem of rendering convincing ambient occlusion, compared to the screen-space (SSAO) techniques which are faster, but less accurate. The results are very close to ray-traced results, and while it appears to be too slow for games right now (about 30 ms to render), that will change with faster hardware.

Real Time Ray Tracing of Point-based Models (link)

The title says it all. I didn’t look into this too much, but I wanted to highlight it because it is getting cheaper to get point cloud data, and it would be great to be able to render that data with better materials and lighting.

Asynchronous Rendering

This poster has an awfully generic name, but it is really about splitting rendering work between a server and a low-spec client, like a mobile phone. In this case, the author demonstrated precomputed radiance transfer (PRT) for high-quality global illumination, where the heavy processing was done on the server, while still allowing the client (here it was an iPhone) to render the results and allow for interactive lighting adjustments. For me the idea alone was interesting: instead of just having the server or client do all the work, split it in a way that leverages the strengths of each.

Speakers

A few academic and industry speakers were invited to give 90-minute presentations.

Biomechanical and Artificial Life Simulation of Humans for Computer Animation and Games

The keynote address was given by Demetri Terzopoulos of UCLA. I was not previously familiar with his work, but apparently he has a very long resume of work in computer graphics, including one of the most cited papers ever. The talk was an overview of his research from the last 15 years on modeling human geometry, motion, and behavior. He started with the face, then the neck, and then the entire body, each modeled in extensive detail. His most recent model has 75 bones, 846 muscles, and 354,000 soft tissue elements.

The more recent work is in developing intelligent agents in urban settings, each with a set of social behaviors and goals, though with necessarily simple physical models. The eventual and very long-term goal is to have a full-detail physical model coupled with convincing and fully autonomous behavior.

Interactive Realism: A Call to Arms

The dinner talk was given by Peter Shirley of NVIDIA. This was the “motivational” talk, with his intended goal of having computer graphics that are both pleasing and predictive. Some may think that we have already reached the point of graphics that are “good enough,” but he disagrees. He referenced recent games and research to point out the areas that he feels needs the most work. From his slides, these are:

- Volume lighting / shadowing

- Indoor-outdoor algorithms

- Coarse / fine lighting

- Artist / designer-in-the-loop

- Motion blur and defocus blur

- Material models

- Polarization

- Tone mapping

He concluded with some action items for the attendees, which includes reforming the way computer graphics research is done, and lobbying for more funding. From the talk and subsequent Q&A, it looks like a lot of people are not happy with the way SIGGRAPH handles papers, a world I know very little about.

The Evolution of Precomputed Lighting for Games

The capstone address was given by Peter-Pike Sloan of Disney Interactive Studios. He presented essentially a history of precomputed lighting for games from Quake, to Halo 3, and beyond. Such lighting trades off flexibility for quality and performance, i.e. you can get very convincing and fast lighting with some important restrictions. This turned out to be a surprisingly large topic, split mostly between techniques for static and dynamic elements, like environments and characters, respectively.

You may wonder why this is relevant beyond a history lesson, the trend in research being for techniques to not require precomputation, and that includes lighting. But precomputed lighting is still relevant for low-end hardware, like mobile devices, and cases where artist control is more important than automated results.

Wrap It Up!

Thanks for making it this far. As you can see, it was a very busy weekend! Like the 2008 conference, this was a great opportunity to see the state-of-the-art in computer graphics and interaction research in a more intimate setting. I hope this was useful, and please reply here if you have any comments.

One that fooled me

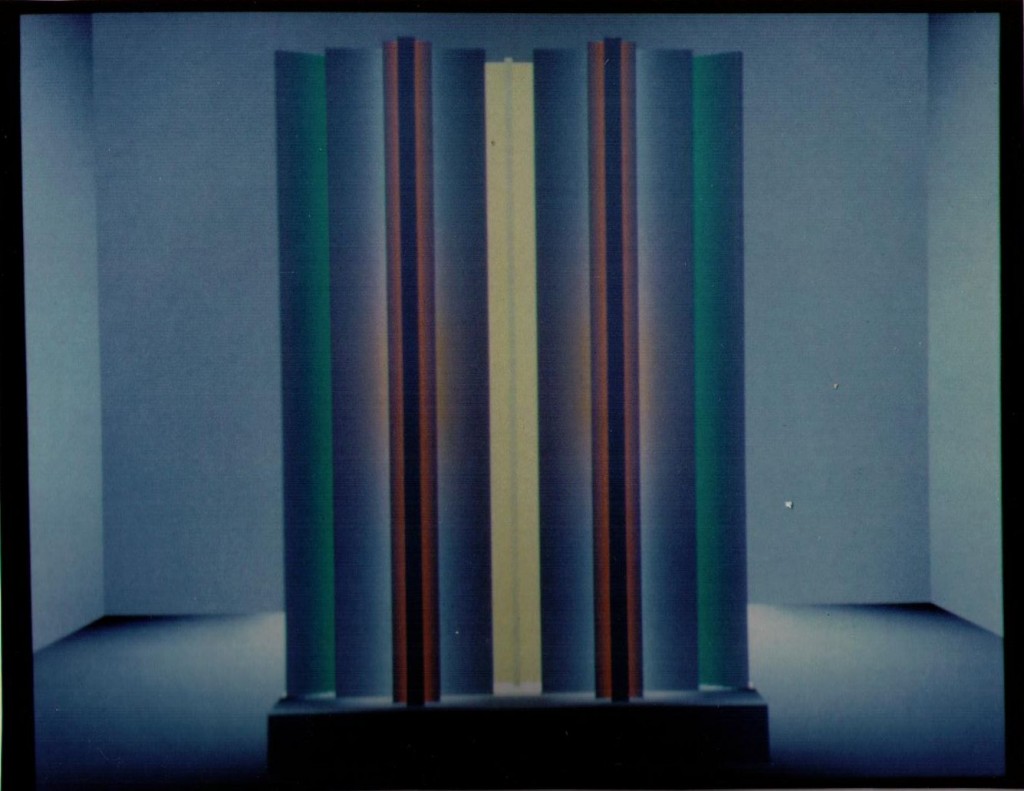

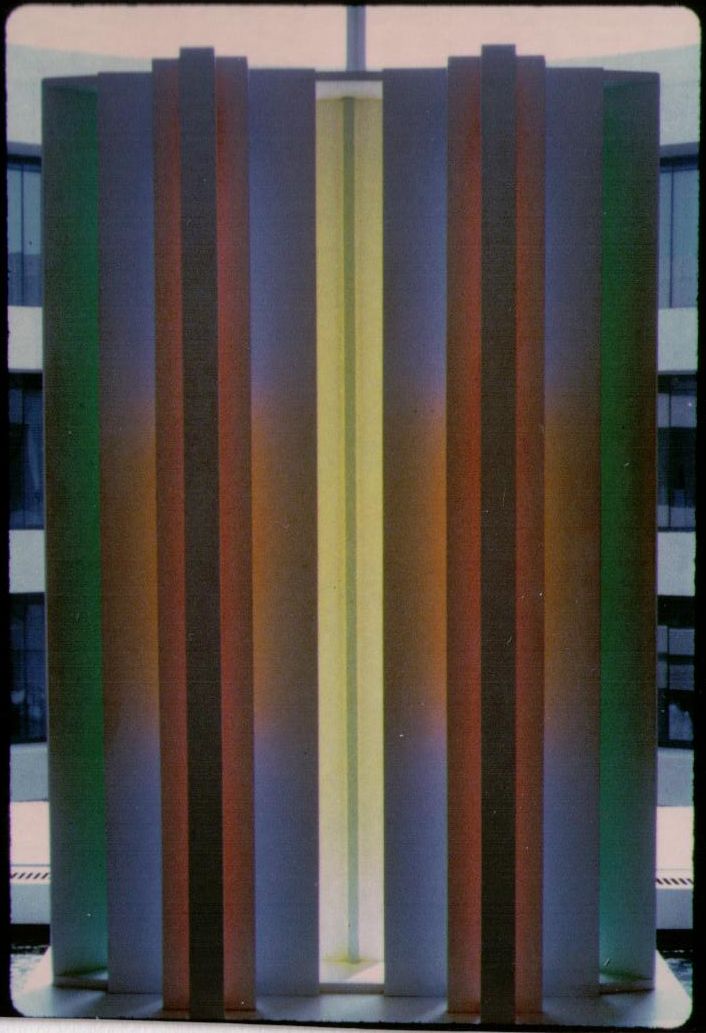

In my post about Ken Torrance I mentioned seeing in 1984 a synthesized image that looked real. The soft shading of radiosity was a new look, not at all like the sharp-edged shadows and reflections seen with classical ray tracing. Cindy Goral kindly scanned in the images from her thesis (they’re also reprinted in Cohen and Wallace’s book on radiosity and Roy Hall’s book on illumination and color), as I wanted to put them up, for old-time’s sake.

The object is a sculpture by John Ferren, made in 1968, entitled Construction in Wood, a Daylight Experiment. I was happy to find this sculpture is still sometimes rotated into display at the Hirshhorn in Washington, DC, where Cindy saw it back around 1984. To quote this page, from 2007: “It is set up in front of an unshaded window, so that sunlight reflects the fluorescent paint on the back sides of the wood slats onto the white paint on the front sides, tricking your eye into imagining that the light comes from fluorescent bulbs.” Also, through the miracle of Google, Cohen and Wallace’s description of the sculpture is easily accessible; see that page for an overhead view. No Google Image or Flickr of it, though, that I could find.

What’s most interesting about this sculpture from a CG standpoint is how it was practically designed as a worst-case for ray tracing and best-case for radiosity. All the surfaces facing the viewer are white, so it is only through indirect diffuse-diffuse interreflection, a.k.a. color bleeding, that you see any color. A classical ray trace comes out black, as no light directly illuminates any of the surfaces. Here’s a modern-day version with Maya, at the bottom of the page (update: shucks, dead link and archive.org doesn’t have it. But, there’s a SketchUp model here to download, and a free SKP importer for Blender).

The sculpture, simulation:

and reality (photo by Jerry Scharf):