“Done puttering.” Ha, I’m a liar. Here’s a follow up to the first article, a follow-up which just about no one wants. Short version: you can compute such groups of colors other ways. They all start to look a bit the same after a while. Plus, important information on what color is named “lime.”

So, I received some feedback from some readers. (Thanks, all!)

Peter-Pike Sloan gave my technique the proper name: Farthest First Traversal. Great! “Low discrepancy sequences” didn’t really feel right, as I associate that technique more with quasirandom sampling. He writes: “I think it is generally called farthest point sampling, it is common for clustering, but best with small K (or sub-sampling in some fashion).”

Alan Wolfe said, “You are nearly doing Mitchell’s best candidate for blue noise points :). For MBC, instead of looking through all triplets, you generate N*k of them randomly & keep the one with the best score. N is the number of points you have already. k is a constant (I use 1 for bn points).” – He nailed it, that’s in fact the inspiration for the method I used. But I of course just look through all the triplets, since the time to test them all is reasonable and I just need to do so once. Or more than once; read on.

Matt Pharr says he uses a low discrepancy 3D Halton sequence of points in a cube:

I should have thought of trying those, it makes sense! My naive algorithm’s a bit different and doesn’t have the nice feature that adjacent colors are noticeably different, if that’s important. If I would have had this sequence in hand, I would never have delved. But then I would never have learned about the supposed popularity of lime.

Bart Wronski points out that you could use low-discrepancy normalized spherical surface coordinates:

Since they’re on a sphere, you get only those colors at a “constant” distance from the center of the color cube. These, similarly, have the nice “neighbors differ” feature. He used this sequence, noting there’s an improved R2 sequence (this page is worth a visit, for the animations alone!), which he suspects won’t make much difference.

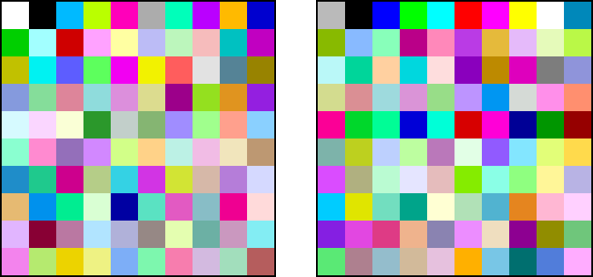

Veedrac wrote: “Here’s a quicker version if you don’t want to wait all day.” He implemented the whole shebang in the last 24 hours! It’s in python using numpy, includes skipping dark colors and grays, plus a control to adjust for blues looking dark. So, if you want to experiment with Python code, go get his. It takes 129 seconds to generate a sequence of 256 colors. Maybe there’s something to this Python stuff after all. I also like that he does a clever output trick: he writes swatch colors to SVG, instead of laborious filling in an image, like my program does. Here’s his pattern, starting with gray (the only gray), with these constraints:

Towaki Takikawa also made a compact python/numpy version of my original color-cube tester, one that also properly converts from sRGB instead of my old-school gamma==2.2. It runs on my machine in 19 seconds, vs. my original running overnight. The results are about the same as mine, just differing towards the end of the sequence. This cheers me up – I don’t have to feel too guilty about my quick gamma hack. I’ve put his code here for download.

Andrew Helmer wrote: “I had a very similar idea using Faure (0,3)-sequences rather than maximizing neighbor distances! This has really nice progressive ‘stratification’ properties.”

John Kaniarz wrote: “When I was reading your post on color sequences it reminded me of an on the fly solution I read years ago. I hunted it down only to discover that it only solved the problem in one dimension and the post has been updated to recommend a technique similar to yours. However, it’s still a neat trick you may be interested in. The algorithm is nextHue = (hue + 1/phi) % 1.0; (for hue in the range 0 to 1). It never repeats the same color twice and slowly fills in the space fairly evenly. Perhaps if instead of hue it looped over a 3-D space filling curve (Morton perhaps?), it could generate increasingly large palettes. Aras has a good post on gradients that use the Oklab perceptual color space that may also be useful to your original solution.”

Looking at that StackOverflow post John notes, the second answer down has some nice tidbits in it. The link in that post to “Paint Inspired Color Compositing” is dead, but you can find that paper here, though I disagree that this paper is relevant to the question. But, there’s a cool tool that post points at: I Want Hue. It’s got a slick interface, with all sorts of things you can vary (including optimized for color blindness) and lots of output formats. However, it doesn’t give an optimized sequence, just an optimized palette for a fixed number of colors. And, to be honest, I’m not loving the palettes it produces, I’m not sure why. Which speaks to how this whole area is a fun puzzle: tastes definitely vary, so there’s no one right answer.

Josef Spjut noted this related article, which has a number of alternate manual approaches to choosing colors, discussing reasons for picking and avoiding colors and some ways to pick a quasirandom order.

Nicolas Bonneel wrote: “You can generate LDS sequences with arbitrary constraints on projection with our sampler :P” and pointed to their SIGGRAPH 2022 paper. Cool, and correct, except for the “you” part ;). I’m joking, but I don’t plan to make a third post here to complete the trilogy. If anyone wants to further experiment, comment, or read more comments, please do! Just respond to my original twitter post.

Pontus Andersson pointed out this colour-science Python library for converting to a more perceptually uniform colorspace. He notes that CAM16-UCS is one of the most recent but that the original perceptually uniform colorspace, CIELAB, though less accurate, is an easier option to implement. There are several other options in between those two as well, where increased accuracy often requires more advanced models. Once in a perceptually uniform colorspace, you can estimate the perceived distance between colors by computing the Euclidean distances between them.

Andrew Glassner asked the same, “why not run in a perceptual color space like Lab?” Andrew Helmer did, too, noting the Oklab colorspace. Three, maybe four people said to try a perceptual color space? I of course then had to try it out.

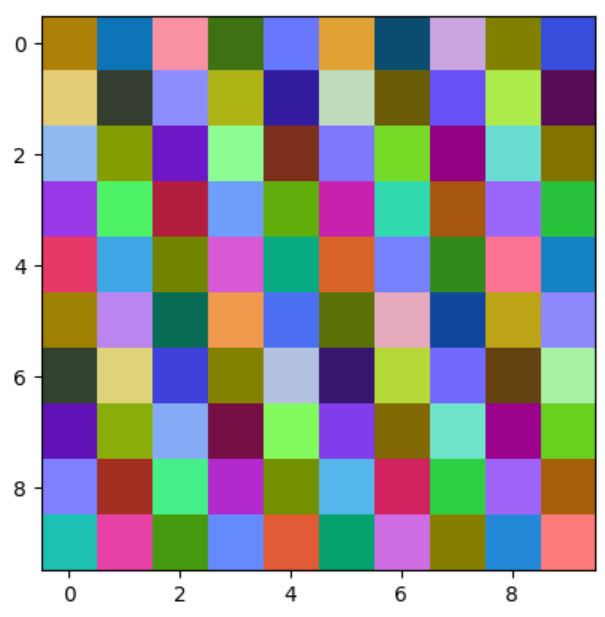

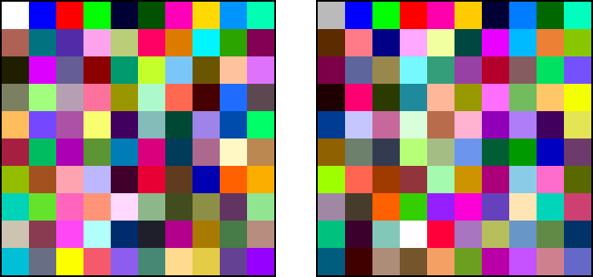

Tomas Akenine-Möller pointed me at this code for converting from sRGB to CIELab. It’s now yet another option in my (now updated) perl program. Here’s using 100 divisions (i.e., 0.00, 0.01, 0.02…, 1.00 – 101 levels on each color axis) of the color cube, since this doesn’t take all night to run – just an hour or two – and I truly want to be done messing with this stuff. Here’s CIELab starting with white as the first color, then gray as the first:

Get the data files here. Notice the second color in both is blue, not black. If you’re paying attention, you’ll now exclaim, “What?!” Yes, blue (0,0,255) is farther away from white (255,255,255) than black (0,0,52) is from white, according to CIELab metrics. And, if you read that last sentence carefully, you’ll note that I listed the black as (0,0,52), not (0,0,0). That’s what the CIELab metric said is farthest from the colors that precede it, vs. full black (0,0,0).

I thought I had screwed up their CIELab conversion code, but I think this is how it truly is. I asked, Tomas replied, “Euclidean distance is ‘correct’ only for smaller distances.” He also pointed out that, in CIELab, green (0,255,0) and blue (0,0,255) are the most distant colors from one another! So, it’s all a bit suspect to use CIELab at this scale. I should also note there are other CIELab conversion code bits out there, like this site’s. It was pretty similar to the XYZ->CIELab code Tomas points at (not sure why there are differences), so, wheels within wheels? Here’s my stop; I’m getting off the tilt-a-whirl at this point.

Here are the original RGB distance “white” and “gray” sequences, for comparison (data files here):

Interesting that the RGB sets look brighter overall than the CIELab results. Might be a bug, but I don’t think so. Bart Wronski’s tweet and Aras’s post, “Gradients in linear space are not better,” mentioned earlier, may apply. Must… resist… urge to simply interpolate in sRGB. Well, actually, that’s how I started out, in the original post, and convinced myself that linear should be better. There are other oddities, like how the black swatches in the CIELab are actually (0,52,0) not (0,0,0). Why? Well…

At this point I go, “any of these look fine to me, as I would like my life back now.” Honestly, it’s been educational, and CIELab seems perhaps a bit better, but after a certain number of colors I just want “these are different enough, not exactly the same.” I was pretty happy with what I posted yesterday, so am sticking with those for now.

Tomas also noted that color vision deficiency is another thing that could be factored in, and Pontus pointed to the matrices and related publication here. I truly will leave that for someone else who wants to experiment.

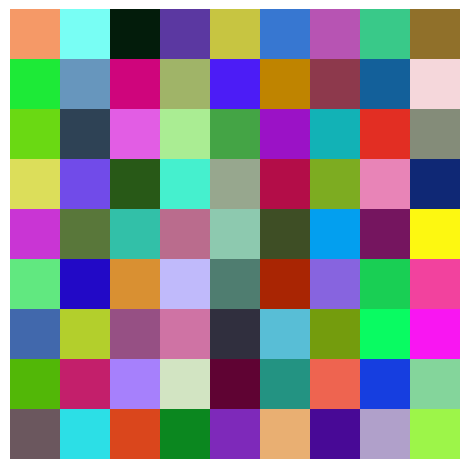

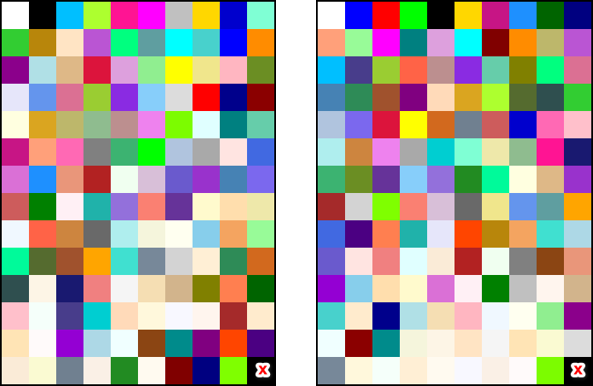

Mark Kilgard had an interesting idea of using the CSS Color Module Level 4 names and making a sequence using just them. That way, you could use the “official” color name when talking about it. This of course lured me into spending too much time trying this out. The program’s almost instantaneous to run, since there are only 139 different colors to choose from, vs. 16.7 million. Here’s the ordered name list computed using RGB and CIELab distances:

Ignore the lower right corner – there are 139 colors, which doesn’t divide nicely (it’s prime). Clearly there are a lot of beiges in the CSS list, and in both solutions these get shoved to the bottom of the list, though CIELab feels like it shove these further down – look at the bottom two rows on the right. Code is here.

The two closest colors on the whole list are, in both cases, chartreuse (127, 255, 0) and lawngreen (124, 252, 0) – quite similar! RGB chose chartreuse last; CIELab chose lawngreen last. I guess picking one over the other depends if you prefer liqueurs or mowing.

Looking at these color names, I noticed one new color was added going from version 3 to 4: Rebecca Purple, which has a sad origin story.

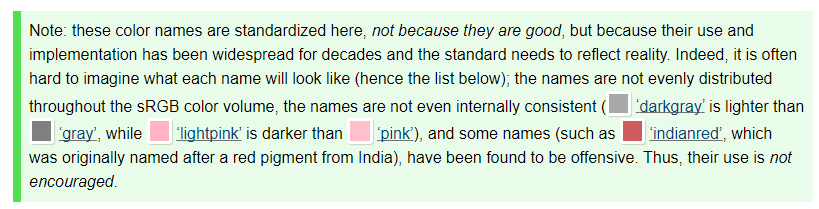

Since you made it this far, here’s some bonus trivia on color names. In the CSS names, there is a “red,” “green,” and “blue.” Red is as you might guess: (255,0,0). Blue is, too: (0,0,255). Green is, well, (0,128,0). What name is used for (0,255,0)? “Lime.”

In their defense, they say these names are pretty bad. Here’s their whole bit, with other fun facts:

My response: “Lime?! Who the heck has been using ‘lime’ for (0,255,0) for decades?” I suspect the spec writers had too much lime (and rum) in the coconut when they named these things. Follow up: Michael Chock responds, “Paul Heckbert.”