Here it is – you should recognize the third author’s name for sure. I’ve only skimmed a bit, but wow, very interesting. Many of the figures are indeed interactive, which is magical. I also like the idea that you can move the mouse over a term and a pop-up shows more information about it. Seriously, go check out a section right now.

Seven Things for August 22, 2015

Last collection of links for awhile – I’m pretty much caught up. Here’s a rundown of things that are more physical:

- Where’s Waldo in the real world; specifically, Seattle. Info. Some of the Easter Eggs are truly great.

- Pixelated hair. I collect anything where “X is used as pixels”; link collection here (and send me more).

- I’m impressed by Google Cardboard. A local architecture firm has been using it to give clients a much better sense of their designs. The fact that you can pre-render at very high quality I consider a large advantage over GPU-based VR. Also, it seems like many firms overbuilt, so these viewers are now dirt cheap, e.g. less than $3 with free shipping.

- Surroundings:

- The Ricoh Theta gives surprisingly nice instant IBLs in a relatively cheap ($300) compact camera – gallery, review.

- Matterport looks like a pretty nice room capture device.

- Photosynth 3 is strangely compelling at times. On one level it’s a low-frame-count video you can scrub through, but scenes often have a surreal feel as interpolations are shown.

- Intel Thunderbolt 3 demos, showing a laptop driving an external GPU. Annoying ad will play, but then the chewy bit of the video plays. Too much info about USB & Thunderbolt here.

- If you have lots of old business cards, two words: Menger Sponge.

- This Is Colossal covers lots of interesting artistic and well-crafted works. Mostly real-world stuff (I liked this mirror work), and also great things such as Bees & Bombs (example below).

Seven Things for August 21, 2015

I’ve burnt through most of my SIGGRAPH tidbits. Now to start running through a few worthwhile articles, resources, and sites I’ve found the past months:

- Colors and words article – a must-read. Teaser: “So he raised his daughter while being careful to never describe the color of the sky to her, and then one day asked her what color she saw when she looked up.”

- IKEA has been using V-Ray for much of its catalog for years. Favorite quote: “But the real turning point for us was when, in 2009, they called us and said, ‘You have to stop using CG. I’ve got 200 product images and they’re just terrible. You guys need to practise [sic] more.’ So we looked at all the images they said weren’t good enough and the two or three they said were great, and the ones they didn’t like were photography and the good ones were all CG!”

- Cambridge, Mass. (which I live next to) as a 3D map in your browser. Background info here. WebGL is great.

- Slightly spooky 3D program, done in CSS (that’s right – no WebGL here). Other fun experiments by the author here.

- Languages: I hadn’t heard of a few of these C++ tools. The Swift language, which I’ve heard nice things about, is going to be open-sourced by Apple (surprising, for Apple). Michael Gleicher mentioned liking the free book Javascript in 10 Minutes.

- Tools: For home use only, Glary Utilities is a bunch of free utilities – two minutes to clean off various types of sludge from your PC. Everything is a simple super-fast file and folder name searcher for Windows. I’ve added these to the bottom section of the portal page.

- Ray tracing using armor stands in Minecraft. Things just keep getting weirder.

Seven Things for August 20, 2015

Still more things, bits of info worth knowing (at least to me – now I know where I’ve written it all down):

- glTF is an up and coming format for transmitting 3D models, tailored for WebGL and OpenGL – they like to think of it as a 3D model codec. There’s three.js and Node.js support, as well as a Collada and separate FBX converter. There’s more explanation of glTF in the presentation at the WebGL BOF. Compression progress here, discussion here. (Thanks to Patrick Cozzi for these links.)

- I mentioned Shadertoy two days ago. I’ll mention it again! I’ve heard Iñigo Quilez’s youtube video channel has good tutorials on programming for Shadertoy, or just watch great demos (with no chance of locking up your GPU). Also, check this great Shadertoy illusion. My theory is every blog post should have a reference to Shadertoy, at least in my perfect world.

- The code for Epic’s Unreal Engine 4 is all open-sourced now. Best story for me at SIGGRAPH was of a guy who looked like a gang member coming to an Educator’s meeting and getting the signatures of some of the UE4 programmers, as he wanted to thank them for changing his life due to their code being accessible.

- Unity 4 is also free (including royalty free) for personal use (though not open source). Old news from March and GDC, but I realized I had only tweeted it, not blogged it.

- 3D printing. Yeah, it’s not graphics, but it’s close enough for me. The Computational Tools for 3D Printing course had a good introduction to the major types of 3D print processes, along with a useful walk down the software pipeline. BTW, I made a little page of links to 3D printing resources for beginners with an URL I can remember, bit.ly/info3dp

- I was surprised to learn that cross-site scripting attacks are 80% (by some measure) of all website security problems. A form of this type of attack was found and fixed back in summer 2011 for WebGL in Chrome and Firefox, with the concern that private textures from other sites could be read and copied by WebGL programs.

- Sketchfab has been adding cool new features, such as animation and object annotation (click horizontal arrows in lower right), as well as Oculus Rift support: just put “/embed?oculus=2” at the end of any model URL.

Going for a walk

by Yann

on Sketchfab

Seven Things for August 19, 2015

More stuff:

- New interactive 3D graphics books at SIGGRAPH 2015: WebGL Insights, GPU Pro 6 (Kindle right now, hardcover in September). Let me know if I missed anything (see full list here, which also includes links to Google Books previews for these new books).

- Updated book: 7th edition of the OpenGL SuperBible. I would guess that, with Vulkan coming down the pike, and Apple going with Metal and no longer developing OpenGL (it’s back in 2010 at 4.1 in Mavericks), this will be the final edition. Future students having to learn Vulkan or DirectX 12, well, that won’t be much fun at all…

- I mentioned yesterday how you can download the SIGGRAPH 2015 Proceedings for free this week. There’s more, in theory. Some of the links there have nothing as of right now. The Posters are worth a skim, especially since I didn’t see them at SIGGRAPH. I also liked the Studio PDF. It starts with a bunch of single-page talks that are fun to snack on, followed by a few random slidesets. Emerging Tech also has longer descriptions than on the ETech page (which has more pics and videos, however). If you gotta catch ’em all, there’s also a PDF for Panels.

- There have been many news articles recently about not viewing screens at bedtime. Right, sure. Michael Herf (former CTO at Picasa) is the president at f.lux, one company that makes screens vary in overall spectra during the day to ameliorate the problem. He pointed me at a useful-to-researchers bit: their fluxometer site, with spectra for many different displays, all downloadable.

- Oh, and related, a tip from Michael: Pantone stickers with differing colors (using metameric failure) under different temperature lights, so you can ensure you’re showing work under consistent lighting conditions.

- I was impressed by HALIDE, an MIT licensed open source project for writing high performance image processing code (including GPU versions) from scratch. Most impressive is their case study for local Laplacian filters (p. 28), showing great performance with considerably less code and time coding vs. Adobe Photoshop’s efforts. Google and others use it extensively (p. 32).

- Path tracing is all the rage for the film industry; the Arnold renderer started it (AFAIK) and others have followed suit. Here’s an entertaining path trace of interior lighting for a Minecraft scene using the free Chunky path tracer. SPP is samples per pixel:

Seven Things for August 18, 2015

Seven things:

- Stephen Hill’s great collection of SIGGRAPH 2015 links.

- As he and others have noted, the entire SIGGRAPH 2015 proceedings is available to all for free download until the end of this week. Grab it now if you’re not a SIGGRAPH member. SIGGRAPH members always have Digital Library access to SIGGRAPH-sponsored conferences, even if not Digital Library subscribers, e.g. here’s the SIGGRAPH 2014 proceedings.

- New term: froxel – frustum voxel. Alex Evans mentioned it in his fascinating talk in the Advances in RTR course; on page 83 he notes, “The term originated at the Sony WWS ATG group, I believe.” Diagram. He’s semi-right that Shadertoy programs do ray marching through froxels at their simplest; a speedup for Shadertoy is using the minimum distance-field distance found to any object as a minimum step size (e.g., line 126 of this demo, most of which they live-coded during the wonderful Shadertoy studio workshop).

- Evidence that patents appear to not spur research and innovation, even for big pharma. I like The Economist, as it tries to weigh the evidence for & against some idea, vs. knee-jerking it one way or the other.

- Folklore 1: Jim Blinn confirmed that the teapot model was scaled down vertically because it looked nicer that way, not that the pixels were non-square (incorrectly propagated here and here). Jim & 3D printed teapot.

- Which reminds me: here’s my random set of pics from SIGGRAPH 2015, with captions. I like, “Hundreds of beautiful designs, and only one or two that suck.” Update: more photos from Mauricio Vives, along with WebGL specific shots. Need more? Everyone’s.

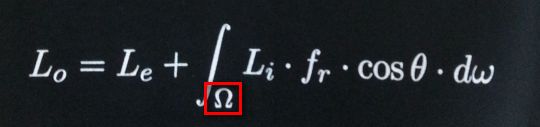

- Folklore 2: (Updated and corrected) Rendering equation: Kajiya’s used S as a subscript, in PoDIS Glassner used an omega symbol because it looks like a hemisphere, since that’s what was being integrated over. Wikipedia uses it.

Seven more tomorrow.

SIGGRAPH 2015: Calendars and Unlisted Events

Get them: http://skitten.org/2015/07/siggraph-2015-google-calendars

As of this moment it’s missing our own event Sunday, but you’re all coming to that anyway, right? I also believe there are one or two parties not listed, such as the Chapters Party.

Oh, and there’s an informal WebGL meetup Saturday night (tonight!) at the bar by the pool at the Figueroa.

Time to get on the plane – see you there!

Freezing Time at SIGGRAPH

Andrew Glassner and I are running a fun little workshop called “Freezing Time” this Sunday, as part of Making @ SIGGRAPH. Details: 12:15-1:45 PM, South Hall G – Studio Workstation Area

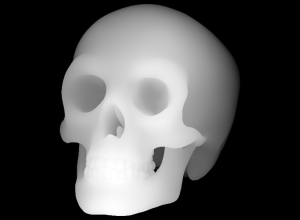

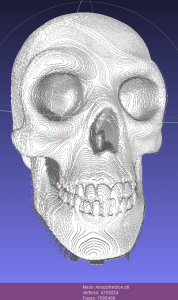

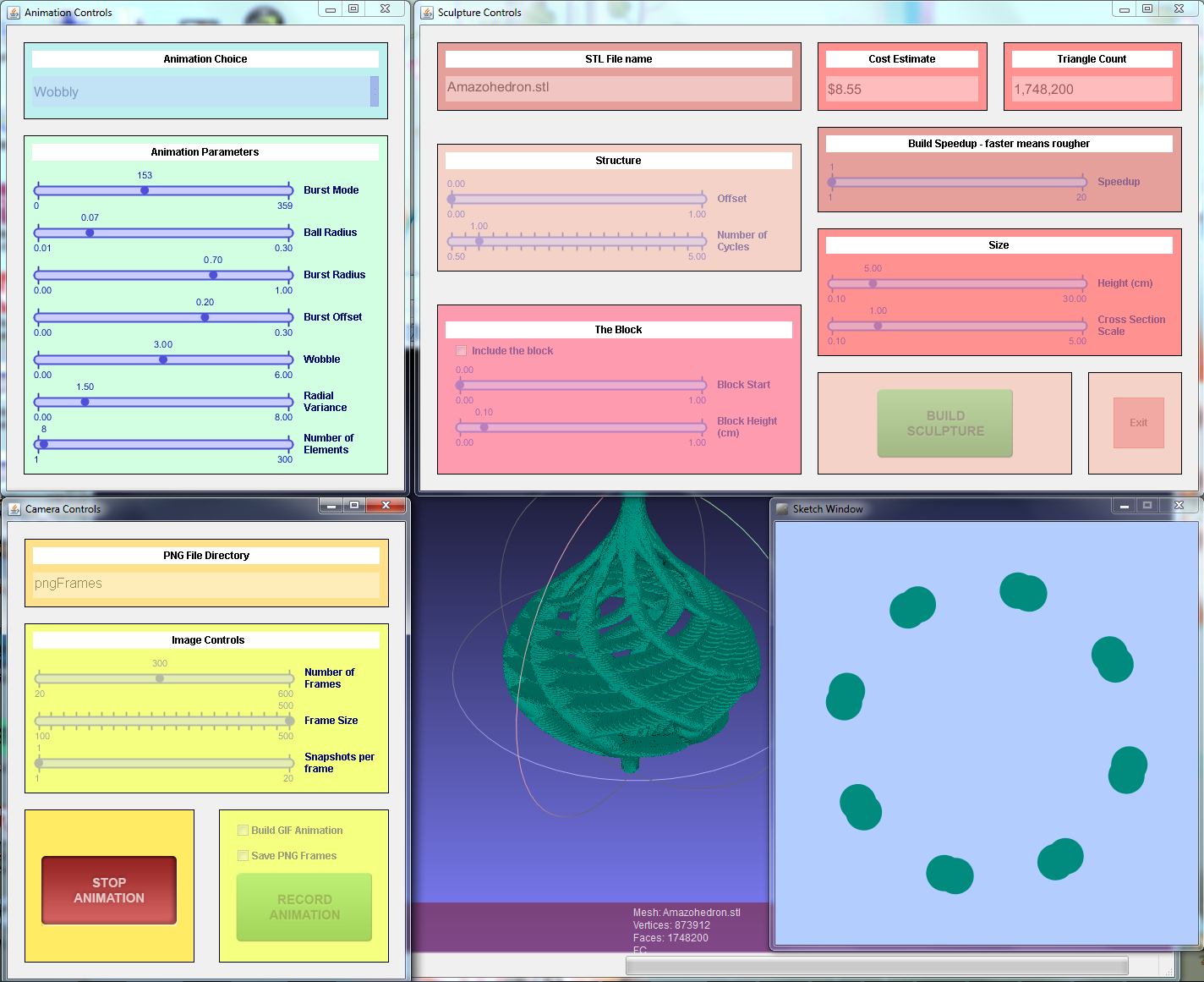

We’ll be teaching how to use T2Z, “Time To Z”, a program that lets you generate a 2D animation and then turn it into a 3D printable sculpture. Participants will be provided workstations, and there will be high-speed 3D printers available after the workshop. Can’t make it? Read on… Can make it? Get the code now and have fun on the plane ride to Los Angeles.

T2Z takes the frames of your animation and stacks them to form a 3D sculpture. This three.js program shows the transition for a number of animations – use the mouse to change the camera’s view. It’ll also get your cooling fan cranking, if your GPU is like mine. Turning “cycles” down to 1 keeps it sane.

Here’s a simple example. This animation:

gives this sculpture when you stack the frames:

The (self-imposed) challenge is to create an interesting, looping animation that also creates a visually-pleasing and printable sculpture. This example is pretty good, though the animation doesn’t quite perfectly loop. It would be easy enough to make it loop, but then we lose the base it can sit on. Tricky! If you want to hack on this code, it’s the Wobbly animation in T2Z.

There are many more examples in our gallery. I’ve been playing with the idea of data translation in general; you’ll see some experiments there. It’s been a great excuse for me to learn to use various tools at the local makerspace, Artisan’s Asylum, though I’ve not worked up the courage to actually use the plasma cutter yet. There are also plenty of fun & free tools for data manipulation, such as 123D Make, third-generation Photosynth, Sketchfab, 123D Catch, and on and on.

Even if you can’t attend the workshop, you can easily do this sort of experimentation at home. The T2Z code is free and open source, and well-documented. Companies such as Shapeways give you the ability to print high-quality models. We have lots of little animations in Animations.pde – go mess with them! There are also super-hacky “animations” at the end of this file: AnimatedGifReader turns a GIF into an STL 3D print file, FolderOfFramesReader does the same for a set of PNGs, and HeightField takes a grayscale image and uses the gray as a measure of height, e.g.

Processing is entirely fun to hack on (Debugger? We don’t need no stinkin’ debugger, println() is our only friend). It’s Java plus stuff to make graphics easy. I like the fact that to run the program you are faced with the code – the system invites you to start poking at the program from the outset. Andrew wrote most of the code, being a Processing pro (he wrote a book and teaches a course in it; the first half of his course is free). Me, I translated the Marching Cubes code to Processing: each pixel of each image is treated as a voxel, the 3D model is from the isosurface formed between the objects and the background.

We hope to see you on Sunday! Or better yet, online, where we hope to see you sending us animations for the gallery and pull requests for code you’ve added.

Where did all this come from? Last year around May Andrew started making a series of looping GIFs using Processing, taking after the Bees & Bombs Tumblr feed. His goal was to make animations worth posting. These can now be found on Andrew’s Tumblr feed. Steve Drucker and I were the critics, over more than half a year.

During this time I was attending and organizing 3D printer meetups in the Boston area. Mark Stock pointed out a fascinating way of modeling: instead of explicitly using union operations on 3D models, the traditional CAD approach, he instead deposited objects into a large voxel grid. It’s much simpler and faster to figure out if a voxel is inside some given primitive vs. performing a union or other constructive solid geometry operation on a set of models. For example, computing the union of thousands upon thousands of spheres will bring most CAD modelers to their knees. Voxel in/out functions are trivial to compute for spheres, and Marching Cubes then guarantees a watertight, well-formed model with no geometric singularities, precision problems, etc. 3D printers themselves have limits to precision, so using voxels is a good match. Here’s an example of Mark’s work:

So, for me, these two things combined: animations could be used to define voxels, and Marching Cubes used to generate 3D representations. I made an exceedingly slow GIF to STL converter in Perl and ran a bunch of Andrew’s GIFs through it. A few interesting forms turned up and that got me started on playing with what I call “323,” converting from some three-dimensional form of data (an animation being 2D plus time) to another (a sculpture).

Seeing the call for Making @ SIGGRAPH, we decided to go further and give a workshop on the process. The T2Z program that resulted is massively faster than my original Perl program, generating sculptures in a few seconds. It’s also much more usable, allowing you to make your own animations, hook up sliders to variables, and easily export them as GIFs, a set of PNGs, or a 3D STL model. Programming all this sucked up way more time than expected, and of course was highly addictive. Andrew made this Processing program do things that Nature did not intend (e.g., binary STL output and multi-window UI).

Personally, I find this whole design process entertaining. In idle moments (or at the dentist) I imagine what might make both an interesting animation and a worthwhile sculpture. It’s a fun way to think about modeling and animation, and one where my intuition doesn’t always pay off. The more I play, the more I learn.

Here’s a screenshot, to whet your appetite – click it for the full-size readable version:

So download the thing, install Processing and three little libraries (easy!), and start sliding sliders, pushing buttons, and hacking code! And let us know what you find.

BTW, if you want just one link to bookmark, it’s this: http://bit.ly/t2zspot

Web Page Updates

To celebrate Kavita Bala becoming the new Editor-in-Chief of ACM Transactions on Graphics, I updated the ancient resource pages I put there long ago:

- Software Tools page

- Research Resources page

I think the links here are fairly useful, in part due to great suggestions from people on Twitter – get them while they’re fresh. Let me know what other cool things I’m missing.

I also update our own site’s portal page and graphics books page for good measure. One cool new link on the portal page is for Shader School, for learning shader programming, which a few people have recommended. The shader compile error messages are unfortunately obscured on some platforms, but if all else fails you can check the answers.

WebGL MOOC this summer

Want to learn computer graphics using WebGL from a MOOC during the summer? Learn for free from a master, Ed Angel, at Coursera.