I gave a talk about ray tracing yesterday. For my entertainment, before the talk I asked ChatGPT what ray tracing is. Its full answer was pretty good overall. However, it stumbled at the start – I’ll let you figure it out (hint: Plato). There are some other small oddities (e.g., “follow similar paths”) and “I wouldn’t have put it exactly like that” bits. Here’s the question and its answer.

What is ray tracing?

ChatGPT:

Ray tracing is a rendering technique used in computer graphics and computer-generated imagery (CGI) to simulate the way light interacts with objects in a virtual environment. It is a method for generating highly realistic images by tracing the path of individual rays of light as they interact with the objects in a scene. Ray tracing can simulate complex optical effects, such as reflections, refractions, shadows, and global illumination, with a high degree of accuracy and realism.

Here’s a simplified explanation of how ray tracing works:

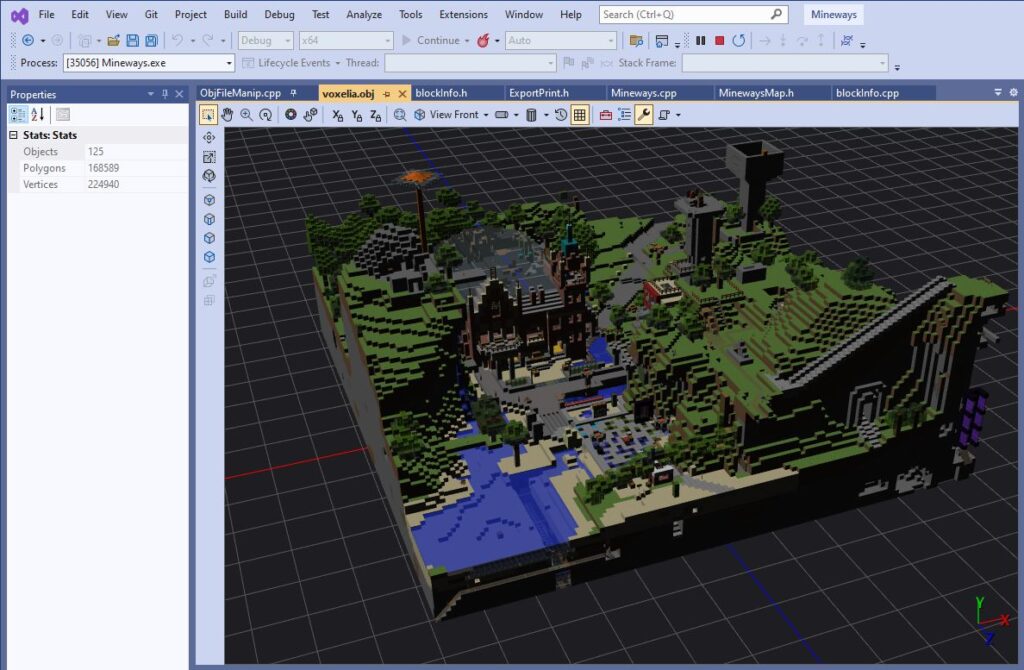

1. Ray Generation: In a ray tracing system, a virtual camera is placed within a 3D scene. Rays of light are generated from the camera’s viewpoint, with one ray typically cast for each pixel on the screen.

2. Ray Intersection: Each ray is traced into the scene, and its path is followed as it interacts with objects. When a ray encounters an object, the software calculates how it intersects with that object’s geometry.

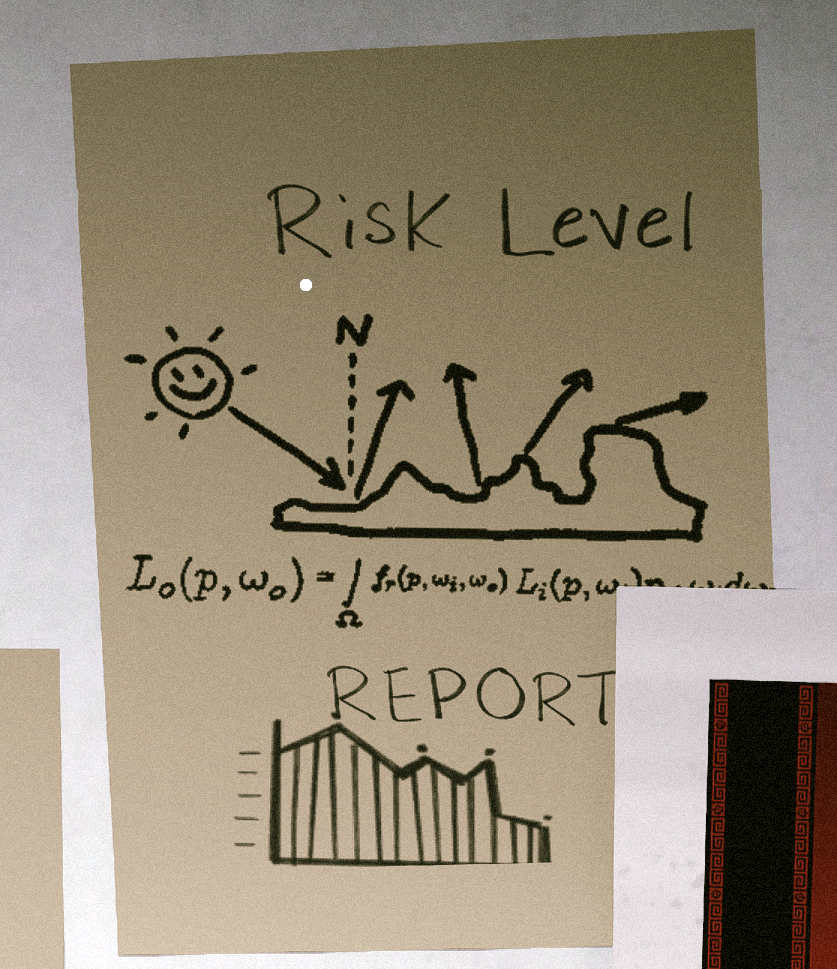

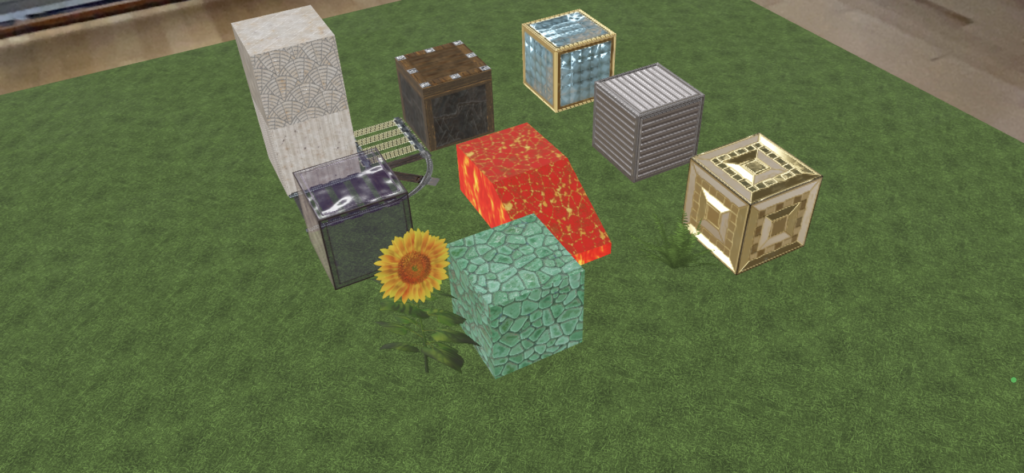

3. Shading and Lighting: After determining the intersection point, the renderer calculates how the object at that point interacts with light. This involves considering factors like material properties (e.g., reflectivity, transparency), surface normals, and lighting conditions (e.g., direct and indirect lighting).

4. Secondary Rays: In addition to primary rays cast from the camera, secondary rays may be generated to simulate effects like reflections, refractions, and shadows. These rays follow similar paths as primary rays but may be traced in different directions based on the materials and lighting in the scene.

5. Recursive Ray Tracing: To simulate complex lighting interactions, ray tracing often involves recursion. For example, when a ray hits a reflective surface, a new ray is generated to simulate the reflection, and this new ray undergoes the same process of intersection and shading. This recursion continues until a termination condition is met.

6. Image Formation: As the rays interact with objects and lights in the scene, they carry information about the color and intensity of light at each pixel. This information is used to generate the final image that is displayed on the screen.

Ray tracing is known for its ability to produce photorealistic images, making it a popular choice in fields like computer gaming, visual effects in film and television, architectural visualization, and more. However, it is computationally intensive and can require significant processing power, so real-time ray tracing in complex scenes is still a demanding task that often requires powerful hardware, such as high-end graphics cards. Advances in hardware and software continue to make ray tracing more accessible and practical for a wider range of applications.