This report about I3D 2011 is from Mauricio Vives, a coworker at Autodesk; he also has photos from the symposium. The papers listing can be found at Ke-Sen Huang’s page and at the ACM Digital Library’s page (tabbed version here). The ACM site has some interesting info, by the way, such as acceptance rates and “most downloaded, most cited” for all I3D articles. I should also remind people: if you’re a member of ACM SIGGRAPH, you automatically have access to all SIGGRAPH-sponsored ACM Digital Library materials (which is essentially almost all their graphics papers).

In case you missed them, Naty’s reports (so far) about I3D are also on this blog: Keynote, Industry Session, and Banquet Talk.

Papers

Session: Filtering and Reconstruction

A Local Image Reconstruction Algorithm for Stochastic Rendering

In a stochastic rasterizer, effects like defocus (depth of field) and transparency need a very large number of samples (64 – 256) to remove noise and look decent. This paper describes a technique to remove the noise using far fewer samples, through sorting to selectively blur samples. The results look quite good, though it does have the disadvantage of blurring transparency.

Subpixel Reconstruction Antialiasing for Deferred Shading

The post-process MLAA antialiasing technique works to find sharp edges in an image and smooth them. It provides pretty good results. This technique (SRAA) is similar, but operates with supersampled depth and normal data to produce even better results with predictable performance. For example, it looks comparable to 16x SSAA (super-sampled antialiasing) in just 2 ms on a GTX 480 at HD resolution. As with MLAA, only single-sample shading is used, and it is compatible with deferred lighting. [The use of depth and normals means it is focused on geometric edge finding, so the technique is not applicable to shadow edges and NPR post-process thickened edges, for example. – Eric]

High Quality Elliptical Texture Filtering on GPU

Elliptical filtering is necessary for removing artifacts from some high-frequency textures, but is too slow for real-time applications. This is a technique for approximating true elliptical filtering using hardware anisotropic filtering. They claim it is easy to integrate, and they provide drop-in GLSL code to replace existing texture lookups.

Session: Lighting in Participating Media

Transmittance Function Mapping

This is about rendering light and shadow in participating media (e.g. fog, smoke) with single scattering. Two techniques are described: a faster one called volumetric shadow mapping for homogeneous media, and a slower one called transmittance function mapping for heterogeneous media. This is suitable for both offline rendering and interactive applications, but it is not quite real-time (> 30 fps) yet.

Real-Time Volumetric Shadows using 1D Min-Max Mipmaps

This is again about volumetric shadows, but with real-time results for homogeneous media, using only pixel shaders. A combination of epipolar warping on the shadow map, and a 1D min-max mipmap for storage, makes this possible. This is a pretty clever combination of techniques. [This paper was the “NVIDIA Best Paper Presentation”.]

Real-Time Volume Caustics with Adaptive Beam Tracing

This is an extension of previous beam-tracing work to allow for real-time caustics, with receiving surfaces and volumes handled separately. A coarse grid of beams of refined based on the geometry and viewer to put detail where it is needed most. This enhancement is something an effect like caustics certainly needs to look good.

Session: Collision & Sound

Sound Synthesis for Impact Sounds in Video Games

This paper was motivated by having very little memory on a game console to store physics-based sounds, e.g. for footsteps or colliding objects. This uses a combination of modal synthesis and spectral modeling synthesis to produce extremely small sound definitions that could be varied on demand to provide a variety of believable effects. I don’t know much about sound generation, but the audio results (from the only audio paper in the conference) were very good.

Collision-Streams: Fast GPU-based Collision Detection for Deformable Models

Fast Continuous Collision Detection using Parallel Filter in Subspace

Both of these papers address the same problem: how to quickly perform continuous collision detection, which works by interpolating motion between time steps. I am a novice when it comes to collision detection, so I didn’t take away much from these.

Session: Shadows

This is the session that was most interesting to me, as I work on shadow algorithms.

Shadow Caster Culling for Efficient Shadow Mapping

This paper has a simple idea: when rendering a shadow map, cull objects that don’t produce a visible shadow to improve performance. Here occlusion culling is used to determine which objects are visible from the light source, and then these are rendered to produce a receiver mask in the stencil buffer. The stencil occlusion query operation can then be used to skip irrelevant objects. There are a few methods to render the receiver mask, trading off complexity and accuracy. This was mostly tested with city scenes, where it offered the same images at about 5x speed.

Colored Stochastic Shadow Maps

This included a nice overview of the problem, terminology, and existing techniques: how to render colored shadows from translucent objects? This extends existing techniques for rendering stochastic transparency to also support colored transparent shadows. It has a filter that can be adjusted for performance and quality, being based on stochastic techniques. The paper has simple explanations of the algorithm, and it seems from the presentation that this is fairly easy to integrate in a system that already handles basic stochastic shadow maps.

Sample Distribution Shadow Maps

This was the paper I was most interested in seeing, since it is an extension to cascaded shadow maps (CSM), a popular shadowing technique. The idea here is quite simple: reduce perspective aliasing with shadow maps by analyzing the distribution of samples visible to the viewer. This can be used to determine tight near/far planes for CSM partitions, and for tightly fitting the light frustum to just the visible shadow receivers.

The analysis requires a reduction operation, which can be done with rasterization (D3D9/10) or compute shaders (D3D11). Using this algorithm can result in sub-pixel sampling in many cases using a 2K shadow map; see the example below.

Session: Refraction & Global Illumination

Voxel-based Global Illumination

It wouldn’t be an interactive graphics conference without a paper about interactive global illumination, and here is one. The idea behind this technique is to create an atlas-based voxelization of the scene (every frame), and then perform fast ray casting into that grid. Direct lighting can be stored in the grid, or reflective shadow maps can be used; the latter seems to be preferred.

The technique is of course able to capture lighting and details that screen-space methods can’t. However, it can have artifacts that require a denser grid and a tuned offset to avoid self-intersections. Also, as noted earlier, it requires a texture atlas for generating the voxel grid. The results are interactive, if not quite real-time.

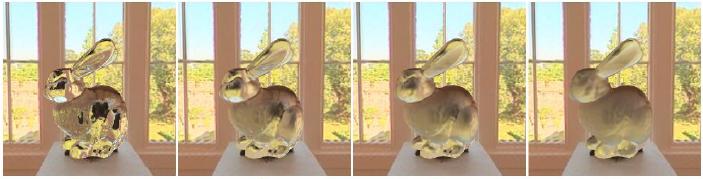

Real-Time Rough Refraction

Here the authors a solving the interesting, though somewhat specific, problem of rendering transparent materials (no scattering) with rough surfaces, which is different from translucent surfaces. Glossy reflection has been handled before; this is about glossy refraction. In this case, rays would be refracted twice, entering and exiting the surface, and this provides a fast way to perform what would otherwise be a double integration.

I wasn’t able to follow all of the math in this one, but the results are indeed real-time (> 30 fps), and comparable to what you would get out of a ray-tracer in dozens of seconds or minutes. There is also a cheap approximation for total internal reflection. This paper was selected as one of the best papers of the conference. An example with increasing roughness is shown below.

Screen-Space Bias Compensation for Interactive High-Quality Global Illumination with Virtual Point Lights

One of the problems with using virtual point lights (VPL) for global illumination is that you must apply a clamping above a certain amount of reflection to avoid pin-point light artifacts. This paper presents a fast way to compute the amount of light that was clamped away, which can then be added back to the VPL result. It is done in screen space, which can lead to some issues (as you might expect), but it is fully interactive and easy to integrate into other renderers that already have VPL.

Session: Human Animation

This is certainly not my area of expertise, so I only have a few comments about these papers.

Motion Rings for Interactive Gait Synthesis

This is human walking motion interpolation made more efficient, responding within a quarter-gait, which is necessary for interactive applications. It relies on a parameterized motion loop (called a “ring” here), and uneven terrain is handled with IK adjustments.

Realtime Human Motion Control with A Small Number of Inertial Sensors

This paper describes how to combine high-quality prerecorded motion capture data with live motion from just a few simple (noisy) sensors to enhance what would otherwise be very poor input. This is validated by comparing against the high-quality original data for motions like walking, boxing, golf swings, and jumping.

A Modular Framework for Adaptive Agent-Based Steering

Crowd simulations need hundreds of characters, but often lack local (per-person) intelligence. This paper presents a framework for dynamically choosing between one of several local steering strategies. This is able to handle fairly tight and deadlocked situations, such as two people walking toward each other down a narrow hall, though some of the resulting motion is awkward.

Session: Geometric and Procedural Modeling

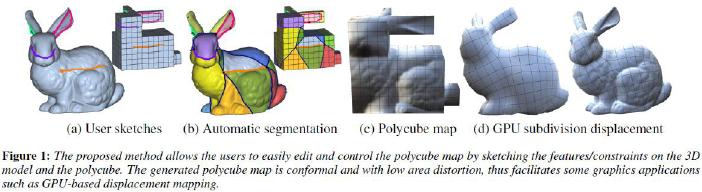

Editable Polycube Map for GPU-based Subdivision Surfaces

This is an extension of previous “polycube map” work to allow transferring geometric detail from a high-resolution triangle mesh to subdivision surfaces. A simple modeling system was presented where the user creates a very coarse polycube and sketches a handful of correspondences between it and the high-resolution mesh. The results are really quite remarkable, and you can see the process below.

GPU Curvature Estimation on Deformable Meshes

It is not unusual to perform vertex skinning or iso-surface extraction on the GPU now. However, for some effects like ambient occlusion or NPR edge extraction, it is useful to have the mathematical curvature of the new surface, but this is slow or impossible to read back from the GPU. This paper presents a method for estimating curvature on the GPU in real-time, even for very detailed models, much faster than could be done on the CPU. [This paper was a “Best Paper – Honorable Mention”.]

Urban Ecosystem Design

I have seen several papers at SIGGRAPH and elsewhere about procedurally generating urban environments: basically streets and buildings. This paper procedurally adds plants (mostly trees) to such urban layouts. City blocks are assigned a level of human “manageability” which determines how organized or wild the plants in that block will be. From there, growth and competition rules are applied. Only the city geometry is taken into account, so this can be used with systems where other information (like land use) is not available. It was implemented with CUDA, and as an example, it can simulate 70 years of growth for 250,000 plants in about two minutes.

Session: Interactivity and Interaction

Data Management for SSDs for Large-Scale Interactive Graphics Applications

Here “SSD” refers to solid-state disks, so this is about organizing a graphics database to allow for efficient out-of-core rendering using SSDs instead of traditional hard disks with spinning platters. Since SSDs don’t need data ordering, locally (as opposed to globally) optimized layouts work well with them. The presentation seem to be lacking in detail, but the demo was fairly impressive: a very large scene on disk being displayed and edited very smoothly with very little RAM. For any developers working with large graphics databases I would certainly recommend reading this paper for getting some ideas.

Coherent Image-Based Rendering of Real-World Objects

This paper attempts to generate a depth map from images captured from a few cameras. The goal is to build a virtual dressing room with a “mirror”: the mirror is a display that shows you with different virtual clothes on. The entire system runs with CUDA on a single machine with a single GPU, at interactive rates even with full body movement. It exploits frame coherence, i.e., reusing parts of previous frames, to reduce latency. This was surprisingly the only paper on image-based rendering, despite the first keynote (below) being entirely about IBR.

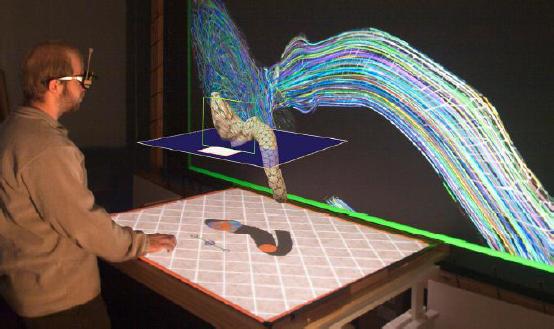

Slice WIM: A Multi-Surface, Multi-Touch Interface for Overview+Detail Exploration of Volume Datasets in Virtual Reality

Here WIM is “world in miniature,” a technique that presents a small version of a virtual environment to help the user navigate the environment. This paper extends the technique to aid in the visualization of complex volume data generated from slices, especially for medical imaging. The resulting system consists of a large main display, a smaller horizontal touch display through which the user can manipulate the view and slices, and a head-tracked VR display for the WIM. Better to just show you! [This paper was a “Best Paper – Honorable Mention”.]

TVCG Papers

A few papers from IEEE Transactions on Visualization and Computer Graphics were also presented as “guests” of the conference. There didn’t seem as relevant, but I list them here:

- Interactive Visualization of Rotational Symmetry Fields on Surfaces

- Real-Time Ray-Tracing of Implicit Surfaces on the GPU

- Simulating Multiple Character Interactions with Collaborative and Adversarial Goals

- Directing Crowd Simulations Using Navigation Fields

Posters

I didn’t spend much time looking at the posters this year, but I did make note of a few that I would like to investigate further; see below. The full poster list is

here [ACM Digital Library subscribers and SIGGRAPH members can download from

here].

- gHull: A Three-dimensional Convex Hull Algorithm for Graphics Hardware

- Interactive Indirect Illumination Using Voxel Cone Tracing [This was “Best Poster – Winner”.]

- Level-of-Detail and Streaming Optimized Irradiance Normal Mapping

- Poisson Disk Ray-Marched Ambient Occlusion

Talks

Image-Based Rendering: A 15-Year Retrospective

This talk was given by Rick Szeliski from Microsoft Research. As the title implies, it was an overview of image-based rendering research, covering topics such as panoramas, image-based modeling, photo tourism, and (in particular)

light fields. The fundamental problem of such research is determine how to make a scene from an existing one. At one point he mentioned that Autodesk “probably” has something for image-based modeling, and

indeed we do; I sent that link to him after the conference. Naty Hoffman of Activision has a longer discussion of this talk in

an earlier blog posting.

From Papers to Pixels: How research finds its way into Games

This talk by Dan Baker of Firaxis was a light “rant” about what researchers should do to get their research into games. For example, they need to consider quality and ease of integration, and realize that hardware advances will make certain techniques obsolete quickly. Most of it was reasonable, but it included a number of controversial points, in my opinion. For example, “nobody uses OpenGL” and there is too much research into GPGPU.

He also started out by stating that the vast majority of the research for I3D is for games, and that everything else is “boring”… calling out “3D CAD” in particular as boring! By the end, I was quite tempted to provide an on-the-spot rebuttal from the Autodesk perspective.

A Game Developer’s Wishlist for Researchers

Chris Hecker, formerly of Maxis, presented his opinion on almost the same topic, i.e. what game developers want from researchers – I liked it better. His priorities are robustness, simplicity, and performance, in that order, but researchers often make the mistake of putting performance first. The nature of interactivity means that robustness is absolutely critical. For example, you can’t afford errors even every few hundred frames when you are rendering 30 fps. He also states that papers frequently exclude negative results and worst-case scenarios, which would be helpful for assessing robustness.

I could say more, but Chris has the full talk available

here. See for yourself why he says, “We are always about to fail.”

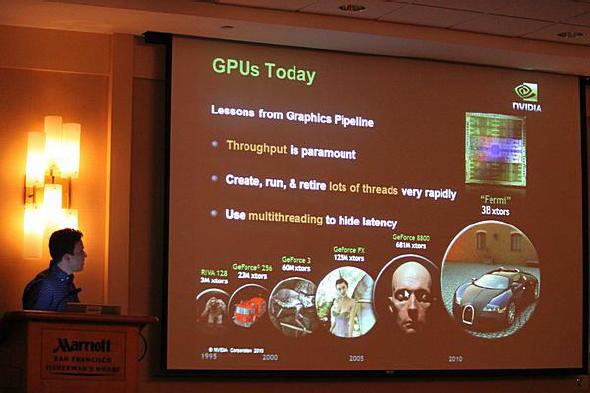

GPU Computing: Past, Present, and Future

This was the banquet talk, given by David Luebke of NVIDIA. It was a fairly light look at the state of GPU computing, where it has come and where it will go. A lot of it went against Dan Baker’s earlier comments about GPGPU, and David made sure to point that out (and I agree).

Some of this talk had the feel of a marketing presentation for NVIDIA’s CUDA and GPU computing products like Tesla, but it is hard to deny that this area is important. He cited several cases where GPU computing is saving lives, e.g. assisting in heart surgery, malaria treatment, and cancer detection. Of course, he also mentioned graphics, scientific computing, data mining, speech processing, etc. At one point he (amusingly) pointed out that all of this innovation and technology has its roots in humble VGA controller hardware.

Mobile Computational Photography

As someone who recently started going beyond point-and-shoot photography with a DSLR camera, this talk was quite interesting. Kari Pulli of Nokia described an API for controlling digital cameras called

FCam… and it’s pretty cool what skilled hands are able to do with this. Note that this isn’t really about high-end cameras: the idea is to allow even cell phone cameras to take great photos.

FCam basically makes a supporting camera programmable, allowing you to do things that the camera normally can’t do. Most of it revolves around taking multiple images and combining them to produce a better result. For example, from his talk and the site:

… take two photos with different settings back-to-back. The first frame has a high ISO and short exposure time, and the second has a low ISO and longer exposure time. The first frame tends to be noisy, while the second instead exhibits blur due to camera shake. This application combines the two frames to create an output that’s better than either.

This is absolutely something I wish I could do with my current camera. Another example he showed was combining a few images with narrow depths of field into a single image with a wide depth of field (i.e. fully sharp). Another automatically took photos until one was captured with minimal camera shake, keeping only the “good” one. All of this is done right on the camera, which is a great improvement over the typical post-processing workflow, and it can leverage metadata that only the camera has.