I’ve have a goal this week (it should be clear by now) of clearing my queue of stored-up RTR links by my birthday, today! (Hint: I want a pony.) So excuse the excessively-long list o’ links. Next task on my list, update the main RTR page itself.

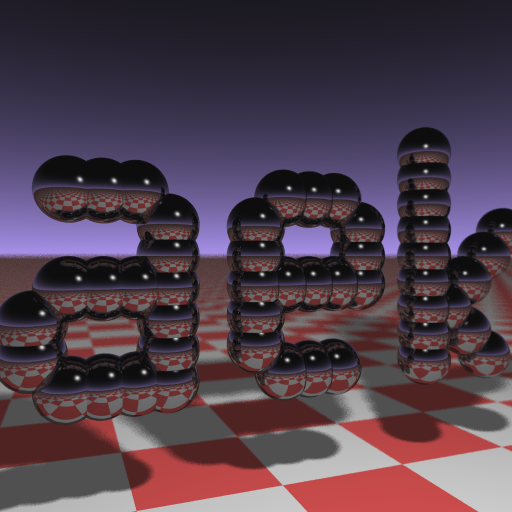

- StructureSynth. This looks pretty cool, and I love procedural models (my ancient SPD package was all about this, back in the days when downloading models was oppressively slow). I do wish they just provided an executable – building looks like a pain.

- That previous link was on Meshula.net, which also blogs about Pixel Bender Fractals. Great stuff, sort of steampunk computer graphics: you must click this link, if no other on this page, and look on in awe.

- Shapeways has a blog, and it’s not just dull company announcements. I’m glad they find people as pixels as interesting as I do. They also cover exporting Spore characters to Collada files (which is a great addition to Spore) and creating physical models from these.

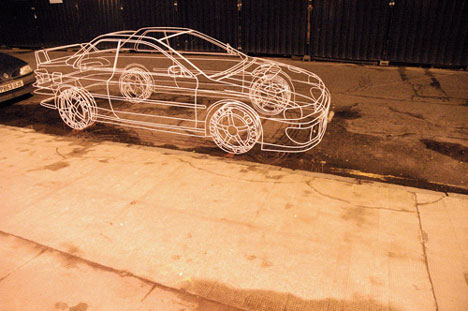

- In related news, The Economist has a reasonable summary of some trends in 3D printing. Their Technology Quarterly also has articles on Augmented Reality, 3D displays, and CAPTCHAs, among other topics.

- This is one more reason the Internet is great: an in-depth article on normal compression techniques, weighing the pros and cons of each. This sort of article would probably not see the light of day in traditional publications, even Game Developer – too long for them, but all the info presented here is worthwhile for a developer making this decision. Aras’ blog has other nice bits such as packing a float into RGBA and SSAO blurring.

- I need to add a link to the article itself to the object intersection page, but Morgan McGuire recently verified that he found this ray/box algorithm super-fast in SIMD. Code’s downloadable from that page, free version of article is downloadable here. Morgan uses this test in the ray tracer for his cool photon mapping paper at HPG 2009; if nothing else, you should at least see the video.

- In related news, I am happy to see that AK Peters is beginning to put past journal of graphics tools articles online. At $15 each, the price of an article is quite high for individuals (or at least this individual), but current journal of graphics (gpu, & game) tools subscribers have full access to this archive for free. The mechanism to get access is a little clunky right now: if you’re a subscriber, you need to register with Metapress, then tell AK Peters your userid and they’ll provide you access.

- Related to this, I hope Google Books conquers the world (or anyone else doing similar work, as long as it isn’t Apple or Amazon or other overcharging closed-box “we’re just protecting the authors, who get 10% or less for a purely digital sale with nil physical cost to us per unit” retailers – rant over, and I do understand there are fixed start-up costs for the retailer/publisher/etc., but really…). Google Books is so darn handy to look for short articles in books at Google’s repository, such as this one giving a clean way to build an orthonormal basis given a vector, from Graphics Tools: The JGT Editors’ Choice.

- Humus provides a whole slew of new cubemaps he captured, if you’re getting tired of Grace Cathedral.

- CUDA itself (vs. others) may or may not be a critical technology, but what it shows about the underlying GPU architecture is fascinating.

- It should be mentioned: August 2009 DirectX SDK is available. Includes the first official release of DirectX 11.

- This is hilarious, and possibly even useful!

- I love seeing things like this: build your own multitouch display. Not that I’ll ever do it, but I hope others will.

- You might be sick of Larrabee news (ship one, already!), but I found Phil Taylor’s article pleasantly hype-free and informative.

- ATI’s Eyefinity (cute marketing name, I must admit – now I want to use the word everywhere) seems to me to solve a problem that rarely occurs: too much GPU for too few screens. Still, it’s nice to have the option. Eyefinity allows up to six monitors to be driven by a single GPU. I guess Eyefinity is useful when running older flight simulator programs on newer GPUs; otherwise, Eyefinity is pretty irrelevant. Eyefinity, eyefinity, eyefinity. At work I find two displays is plenty, one to run, one to debug. Anyway, the sweet spot for the monitor:GPU ratio is 13:1, as can be seen here:

- There’s an article on instancing animated grass using DX10 on Gamasutra.

- Humus’ summary of z interpolation is a good summary of the topic. He gives some of the key tricks, e.g., if you’re using floating point, use a near=1.0 and far=0.0 to help preserve precision.

- Here’s a basic tutorial on different projection methods used in videogames, with lots of visual examples (add “Zaxxon” and it’s complete, for me). The one new tidbit I learnt from it was about reverse perspective, an effect I’ve made myself once every now and then when I screw up a projection matrix.

- While I’ve been on break (one of the reasons I’ve been posting so much – Autodesk gives wonderful 6 week “sabbaticals”, aka “long vacations”, to U.S. employees every four years you’re there; it’s like being French or Swedish every fourth year), the rest of the company’s been busy: this new sketch application for the iPhone looks pretty cool, at the usual $2.99 “cup of coffee” type price.

- Caustics can be dangerous. I can attest to this myself; a goofy award Andrew Glassner gave me long ago sat on my windowsill for years (I moved once, as you should discern from the picture), until I noticed what was happening to the base:

- I usually don’t have time to keep up with Slashdot, but SeenOnSlash, the funny bits of SlashDot, is sometimes entertaining. Graphics-related example: AMD’s latest chip.